Hi,

I'm testing a new system and have tried different nvme disks to see what the performance difference is.

I'm not sure if i hit any limits on any protocols, i would like some guidance from the community (beyond the the resources/stickies i've allready read).

The system specs:

Supermicro 1114-WN10RT

AMD Rome 7402P

512GB 3200Mhz RAM

10Gbit NIC (iSCSI only)

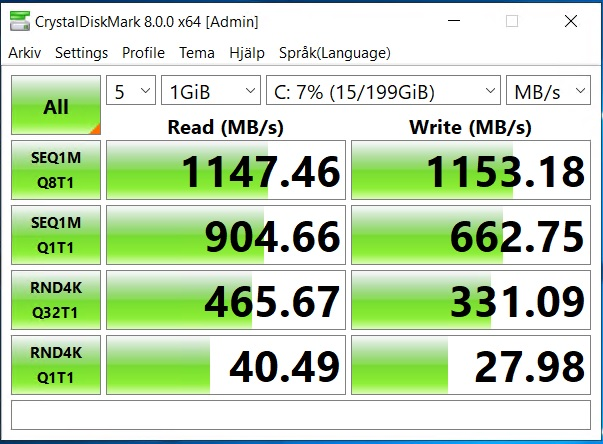

With four PM983 disks (1.9TB NVME U2) as striped mirror (two vdevs), exported to iSCSI as 1TB zvol (blocksize 64KiB, sync always) , i get this performance when running it on a VM (only one on the storage).

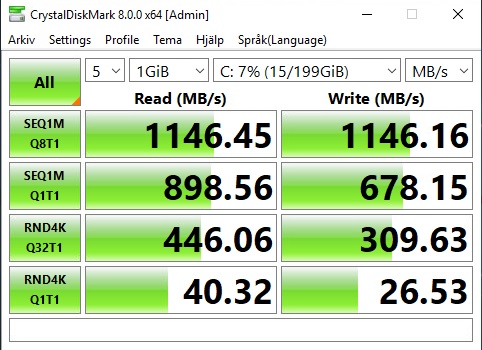

And with ten PM1733 disks (1.9TB NVME U2) as striped mirror (5 vdevs), exported to iSCSI as 5TB zvol (blocksize 64KiB, sync always), i get kind of the same numbers.

Is this expected? Or am i hitting som kind of limitations?

I wouldn't expect more on the first test, since the link is only 10Gbit atm.

This is just basic tests, i'm still waiting on a dual 40Gbit NIC so i can test multipathing and more, but i was a little bit pussled that there was not more difference between the tests, when the disks are very different performance wise.

I ran diskinfo on the disks also.

PM983:

PM1733:

Is there anything i can tweak more for better performance or is this not getting better?

I did try with a SLOG (RMS-300 8GB) but that gave a little bit worse bumbers than without.

Kind Regards

I'm testing a new system and have tried different nvme disks to see what the performance difference is.

I'm not sure if i hit any limits on any protocols, i would like some guidance from the community (beyond the the resources/stickies i've allready read).

The system specs:

Supermicro 1114-WN10RT

AMD Rome 7402P

512GB 3200Mhz RAM

10Gbit NIC (iSCSI only)

With four PM983 disks (1.9TB NVME U2) as striped mirror (two vdevs), exported to iSCSI as 1TB zvol (blocksize 64KiB, sync always) , i get this performance when running it on a VM (only one on the storage).

And with ten PM1733 disks (1.9TB NVME U2) as striped mirror (5 vdevs), exported to iSCSI as 5TB zvol (blocksize 64KiB, sync always), i get kind of the same numbers.

Is this expected? Or am i hitting som kind of limitations?

I wouldn't expect more on the first test, since the link is only 10Gbit atm.

This is just basic tests, i'm still waiting on a dual 40Gbit NIC so i can test multipathing and more, but i was a little bit pussled that there was not more difference between the tests, when the disks are very different performance wise.

I ran diskinfo on the disks also.

PM983:

Code:

# diskinfo -wS /dev/nvd3

/dev/nvd3

512 # sectorsize

1920383410176 # mediasize in bytes (1.7T)

3750748848 # mediasize in sectors

0 # stripesize

0 # stripeoffset

SAMSUNG MZQLB1T9HAJR-00007 # Disk descr.

S439NXXX901635 # Disk ident.

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

0.5 kbytes: 13.1 usec/IO = 37.3 Mbytes/s

1 kbytes: 13.3 usec/IO = 73.5 Mbytes/s

2 kbytes: 13.7 usec/IO = 142.9 Mbytes/s

4 kbytes: 14.3 usec/IO = 274.1 Mbytes/s

8 kbytes: 15.6 usec/IO = 499.9 Mbytes/s

16 kbytes: 18.5 usec/IO = 843.2 Mbytes/s

32 kbytes: 24.0 usec/IO = 1304.4 Mbytes/s

64 kbytes: 34.2 usec/IO = 1826.7 Mbytes/s

128 kbytes: 60.0 usec/IO = 2084.3 Mbytes/s

256 kbytes: 117.3 usec/IO = 2132.0 Mbytes/s

512 kbytes: 234.5 usec/IO = 2132.4 Mbytes/s

1024 kbytes: 465.9 usec/IO = 2146.2 Mbytes/s

2048 kbytes: 923.8 usec/IO = 2164.9 Mbytes/s

4096 kbytes: 1840.1 usec/IO = 2173.8 Mbytes/s

8192 kbytes: 3696.7 usec/IO = 2164.1 Mbytes/sPM1733:

Code:

# diskinfo -wS /dev/nvd0

/dev/nvd0

512 # sectorsize

1920383410176 # mediasize in bytes (1.7T)

3750748848 # mediasize in sectors

0 # stripesize

0 # stripeoffset

SAMSUNG MZWLJ1T9HBJR-00007 # Disk descr.

XXXXXXXXXXXXXX # Disk ident.

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

0.5 kbytes: 20.6 usec/IO = 23.7 Mbytes/s

1 kbytes: 20.3 usec/IO = 48.2 Mbytes/s

2 kbytes: 19.1 usec/IO = 102.2 Mbytes/s

4 kbytes: 13.8 usec/IO = 283.3 Mbytes/s

8 kbytes: 15.3 usec/IO = 510.1 Mbytes/s

16 kbytes: 17.2 usec/IO = 907.4 Mbytes/s

32 kbytes: 20.7 usec/IO = 1508.6 Mbytes/s

64 kbytes: 27.3 usec/IO = 2292.8 Mbytes/s

128 kbytes: 50.4 usec/IO = 2481.3 Mbytes/s

256 kbytes: 101.2 usec/IO = 2470.3 Mbytes/s

512 kbytes: 202.2 usec/IO = 2473.0 Mbytes/s

1024 kbytes: 403.0 usec/IO = 2481.3 Mbytes/s

2048 kbytes: 813.3 usec/IO = 2459.2 Mbytes/s

4096 kbytes: 1620.7 usec/IO = 2468.1 Mbytes/s

8192 kbytes: 3258.2 usec/IO = 2455.3 Mbytes/sIs there anything i can tweak more for better performance or is this not getting better?

I did try with a SLOG (RMS-300 8GB) but that gave a little bit worse bumbers than without.

Kind Regards