I always wanted to max out my 10gbe cards in both my nas, and main machine. () YES they are connected to eachother without switch :) )

To achieve this i got a nice little Intel optane 900p 480gb.

But why??? Why not?

I work mostly with big files. Think of movie rips, 1080p, 4k and lots of raw pictures.

The NAS hardware:

The workstation:

ALRIGHT,

First some basics tests...

Iperf, i did 3 tests, with a couple of minutes in between:

So networking doesn't seem to be the issue. Next stop, log SSD.

Basic smartctl check gives us the following:

Not hot, not cold and no error's, great. Next some speed tests.

I did a 3 tests to make sure the results aren't bad:

Great results.

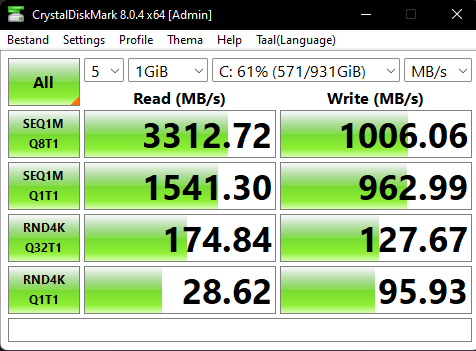

Just to be sure, lets test the local drive in the workstation. It might be as simple as that.

It looks to be fine..

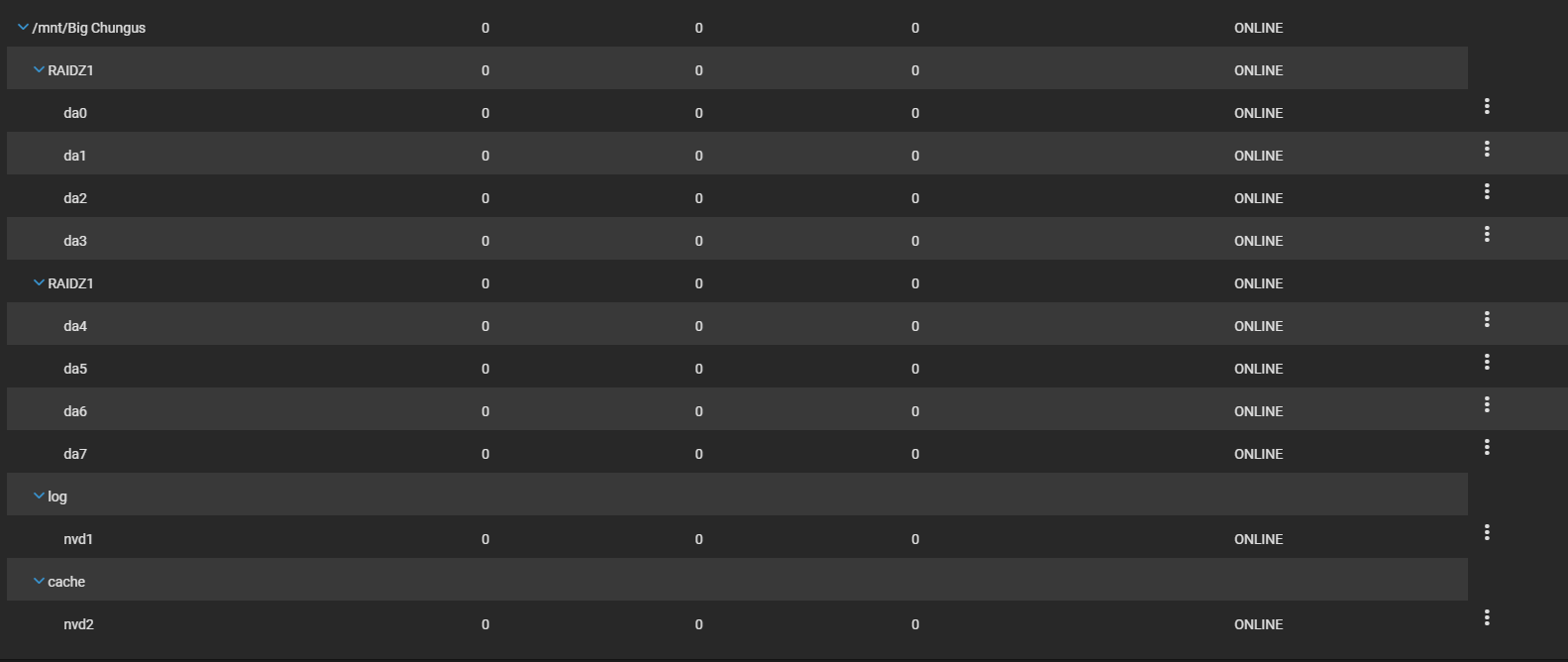

Adding the drive as a log device to the pool:

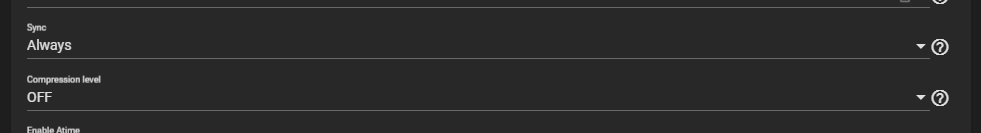

I edit the dataset to set `sync` to `always`, And `compression level` to `OFF`.

Now let's do a file transfer, i used Choeasycopy to sync a folder with 1 26gb mkv file.

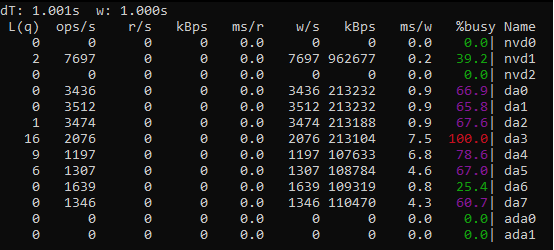

With the transfer going i took a look at gstat -p, this shows me that the optane disk IS being used but only ( for the max that i've seen ) 41%. I took a screenshot to give an idee of the process.

robo copy results:

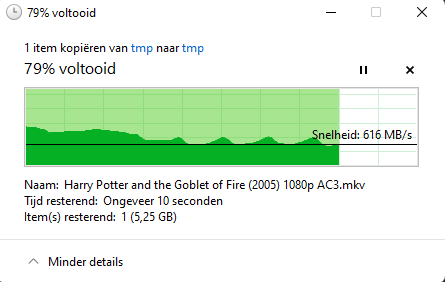

Copying the file in the file explorer to the nas results in the following speed:

It starts of good, 1gb a sec. But then goes down to as low as 550mb/sec.

My understanding with `sync` set to `always` is that it forces the transfer to the log device. The log device being fast enough to handle a large file transfer should be 'easy' to sustain a 10gbit transfer.

To achieve this i got a nice little Intel optane 900p 480gb.

But why??? Why not?

I work mostly with big files. Think of movie rips, 1080p, 4k and lots of raw pictures.

The NAS hardware:

Code:

Motherboard: Gigabyte Z370 AORUS GAMING 7-OP cpu: Intel I7 8086k ram: 64gb DDR4 corsair LPX drives: 4 x seagate ironwolf 8tb 4 x WD red plus 4tb Connected to LSI 9240-8i IT Intel optane 32gb boot drive Intel optane 900p 480gb HP/Intel X540-T2

The workstation:

Code:

Threadripper 24 cores. 64GB ddr4 corsair force mp600 1tb HP/Intel X540-T2

ALRIGHT,

First some basics tests...

Iperf, i did 3 tests, with a couple of minutes in between:

Code:

RUN 1: WS: ------------------------------------------------------------ Client connecting to 10.10.1.2, TCP port 5001 TCP window size: 9.77 KByte (WARNING: requested 4.88 KByte) ------------------------------------------------------------ [ 3] local 172.22.62.138 port 57874 connected with 10.10.1.2 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.16 GBytes 994 Mbits/sec NAS: [ 1] local 10.10.1.2 port 5001 connected with 10.10.1.3 port 52066 [ ID] Interval Transfer Bandwidth [ 1] 0.00-10.00 sec 1.16 GBytes 994 Mbits/sec RUN 2: WS: ------------------------------------------------------------ Client connecting to 10.10.1.2, TCP port 5001 TCP window size: 9.77 KByte (WARNING: requested 4.88 KByte) ------------------------------------------------------------ [ 3] local 172.22.62.138 port 57876 connected with 10.10.1.2 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.21 GBytes 1.04 Gbits/sec NAS: [ 2] local 10.10.1.2 port 5001 connected with 10.10.1.3 port 52068 [ ID] Interval Transfer Bandwidth [ 2] 0.00-10.02 sec 1.21 GBytes 1.04 Gbits/sec RUN 3: WS: ------------------------------------------------------------ Client connecting to 10.10.1.2, TCP port 5001 TCP window size: 9.77 KByte (WARNING: requested 4.88 KByte) ------------------------------------------------------------ [ 3] local 172.22.62.138 port 57878 connected with 10.10.1.2 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.11 GBytes 950 Mbits/sec NAS: [ 3] local 10.10.1.2 port 5001 connected with 10.10.1.3 port 52070 [ ID] Interval Transfer Bandwidth [ 3] 0.00-10.00 sec 1.11 GBytes 949 Mbits/sec

So networking doesn't seem to be the issue. Next stop, log SSD.

Basic smartctl check gives us the following:

Code:

smartctl 7.2 2021-09-14 r5236 [FreeBSD 13.1-RELEASE-p2 amd64] (local build) Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: INTEL SSDPED1D480GA Serial Number: PHMB751000N0480DGN Firmware Version: E2010435 PCI Vendor/Subsystem ID: 0x8086 IEEE OUI Identifier: 0x5cd2e4 Controller ID: 0 NVMe Version: <1.2 Number of Namespaces: 1 Namespace 1 Size/Capacity: 480,103,981,056 [480 GB] Namespace 1 Formatted LBA Size: 512 Local Time is: Thu Dec 1 00:01:45 2022 CET Firmware Updates (0x02): 1 Slot Optional Admin Commands (0x0007): Security Format Frmw_DL Optional NVM Commands (0x0006): Wr_Unc DS_Mngmt Log Page Attributes (0x02): Cmd_Eff_Lg Maximum Data Transfer Size: 32 Pages Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 18.00W - - 0 0 0 0 0 0 Supported LBA Sizes (NSID 0x1) Id Fmt Data Metadt Rel_Perf 0 + 512 0 2 === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 41 Celsius Available Spare: 100% Available Spare Threshold: 0% Percentage Used: 0% Data Units Read: 5,947,696 [3.04 TB] Data Units Written: 33,512,722 [17.1 TB] Host Read Commands: 223,213,231 Host Write Commands: 500,078,378 Controller Busy Time: 178 Power Cycles: 448 Power On Hours: 2,471 Unsafe Shutdowns: 210 Media and Data Integrity Errors: 0 Error Information Log Entries: 0 Error Information (NVMe Log 0x01, 16 of 64 entries) No Errors Logged

Not hot, not cold and no error's, great. Next some speed tests.

I did a 3 tests to make sure the results aren't bad:

Code:

Test 1:

/dev/nvd1

512 # sectorsize

480103981056 # mediasize in bytes (447G)

937703088 # mediasize in sectors

0 # stripesize

0 # stripeoffset

INTEL SSDPED1D480GA # Disk descr.

PHMB751000N0480DGN # Disk ident.

nvme1 # Attachment

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

0.5 kbytes: 28.1 usec/IO = 17.4 Mbytes/s

1 kbytes: 29.5 usec/IO = 33.2 Mbytes/s

2 kbytes: 28.6 usec/IO = 68.4 Mbytes/s

4 kbytes: 27.2 usec/IO = 143.7 Mbytes/s

8 kbytes: 27.5 usec/IO = 283.8 Mbytes/s

16 kbytes: 17.0 usec/IO = 917.6 Mbytes/s

32 kbytes: 41.6 usec/IO = 751.4 Mbytes/s

64 kbytes: 56.1 usec/IO = 1114.9 Mbytes/s

128 kbytes: 91.0 usec/IO = 1373.0 Mbytes/s

256 kbytes: 167.1 usec/IO = 1495.9 Mbytes/s

512 kbytes: 270.4 usec/IO = 1849.2 Mbytes/s

1024 kbytes: 474.1 usec/IO = 2109.2 Mbytes/s

2048 kbytes: 899.6 usec/IO = 2223.1 Mbytes/s

4096 kbytes: 1743.7 usec/IO = 2294.0 Mbytes/s

8192 kbytes: 3419.7 usec/IO = 2339.4 Mbytes/s

Test 2:

/dev/nvd1

512 # sectorsize

480103981056 # mediasize in bytes (447G)

937703088 # mediasize in sectors

0 # stripesize

0 # stripeoffset

INTEL SSDPED1D480GA # Disk descr.

PHMB751000N0480DGN # Disk ident.

nvme1 # Attachment

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

0.5 kbytes: 28.1 usec/IO = 17.4 Mbytes/s

1 kbytes: 28.1 usec/IO = 34.7 Mbytes/s

2 kbytes: 28.4 usec/IO = 68.7 Mbytes/s

4 kbytes: 25.8 usec/IO = 151.6 Mbytes/s

8 kbytes: 27.7 usec/IO = 281.7 Mbytes/s

16 kbytes: 17.0 usec/IO = 916.7 Mbytes/s

32 kbytes: 39.2 usec/IO = 797.9 Mbytes/s

64 kbytes: 55.3 usec/IO = 1130.9 Mbytes/s

128 kbytes: 110.2 usec/IO = 1134.0 Mbytes/s

256 kbytes: 171.7 usec/IO = 1456.0 Mbytes/s

512 kbytes: 272.3 usec/IO = 1836.5 Mbytes/s

1024 kbytes: 476.1 usec/IO = 2100.5 Mbytes/s

2048 kbytes: 893.5 usec/IO = 2238.4 Mbytes/s

4096 kbytes: 1738.1 usec/IO = 2301.3 Mbytes/s

8192 kbytes: 3417.4 usec/IO = 2341.0 Mbytes/s

Test 3:

/dev/nvd1

512 # sectorsize

480103981056 # mediasize in bytes (447G)

937703088 # mediasize in sectors

0 # stripesize

0 # stripeoffset

INTEL SSDPED1D480GA # Disk descr.

PHMB751000N0480DGN # Disk ident.

nvme1 # Attachment

Yes # TRIM/UNMAP support

0 # Rotation rate in RPM

Synchronous random writes:

0.5 kbytes: 27.0 usec/IO = 18.1 Mbytes/s

1 kbytes: 28.0 usec/IO = 34.9 Mbytes/s

2 kbytes: 28.4 usec/IO = 68.8 Mbytes/s

4 kbytes: 25.7 usec/IO = 152.2 Mbytes/s

8 kbytes: 27.4 usec/IO = 284.7 Mbytes/s

16 kbytes: 32.6 usec/IO = 479.2 Mbytes/s

32 kbytes: 23.8 usec/IO = 1314.3 Mbytes/s

64 kbytes: 55.4 usec/IO = 1127.6 Mbytes/s

128 kbytes: 111.3 usec/IO = 1123.5 Mbytes/s

256 kbytes: 167.6 usec/IO = 1491.9 Mbytes/s

512 kbytes: 268.8 usec/IO = 1860.4 Mbytes/s

1024 kbytes: 476.0 usec/IO = 2101.0 Mbytes/s

2048 kbytes: 896.6 usec/IO = 2230.6 Mbytes/s

4096 kbytes: 1720.2 usec/IO = 2325.3 Mbytes/s

8192 kbytes: 3419.5 usec/IO = 2339.5 Mbytes/s

Great results.

Just to be sure, lets test the local drive in the workstation. It might be as simple as that.

It looks to be fine..

Adding the drive as a log device to the pool:

I edit the dataset to set `sync` to `always`, And `compression level` to `OFF`.

Now let's do a file transfer, i used Choeasycopy to sync a folder with 1 26gb mkv file.

With the transfer going i took a look at gstat -p, this shows me that the optane disk IS being used but only ( for the max that i've seen ) 41%. I took a screenshot to give an idee of the process.

robo copy results:

Code:

Total Copied Skipped Mismatch FAILED Extras

Dirs : 1 1 1 0 0 0

Files : 1 1 0 0 0 0

Bytes : 26.115 g 26.115 g 0 0 0 0

Times : 0:01:10 0:00:17 0:00:00 0:00:17

Speed : 1.601.062.714 Bytes/sec.

Speed : 91613,547 MegaBytes/min.

Ended : donderdag 1 december 2022 10:39:16

Copying the file in the file explorer to the nas results in the following speed:

It starts of good, 1gb a sec. But then goes down to as low as 550mb/sec.

My understanding with `sync` set to `always` is that it forces the transfer to the log device. The log device being fast enough to handle a large file transfer should be 'easy' to sustain a 10gbit transfer.