So even if dedup is off for pool, but there are datasets with dedup on on it, they will share common blocks? And if pool has dedup on , all its datasets are actually deduped?

Yes and yes.

So replication of a dataset (not entire pool) to another pool may lead to bigger allocated space or smaller, depending on actual blocks on both pools? This leads to a conclusion: we can't have exact estimate on how much space would we need on target pool. If target pool already has most of blocks, actually allocated space for replica will be smaller. If source dataset did use blocks from outside of it and that blocks are not replicated, its replica will be bigger on target device than pool statistics showed for source dataset. Am I correct here?

Yup.

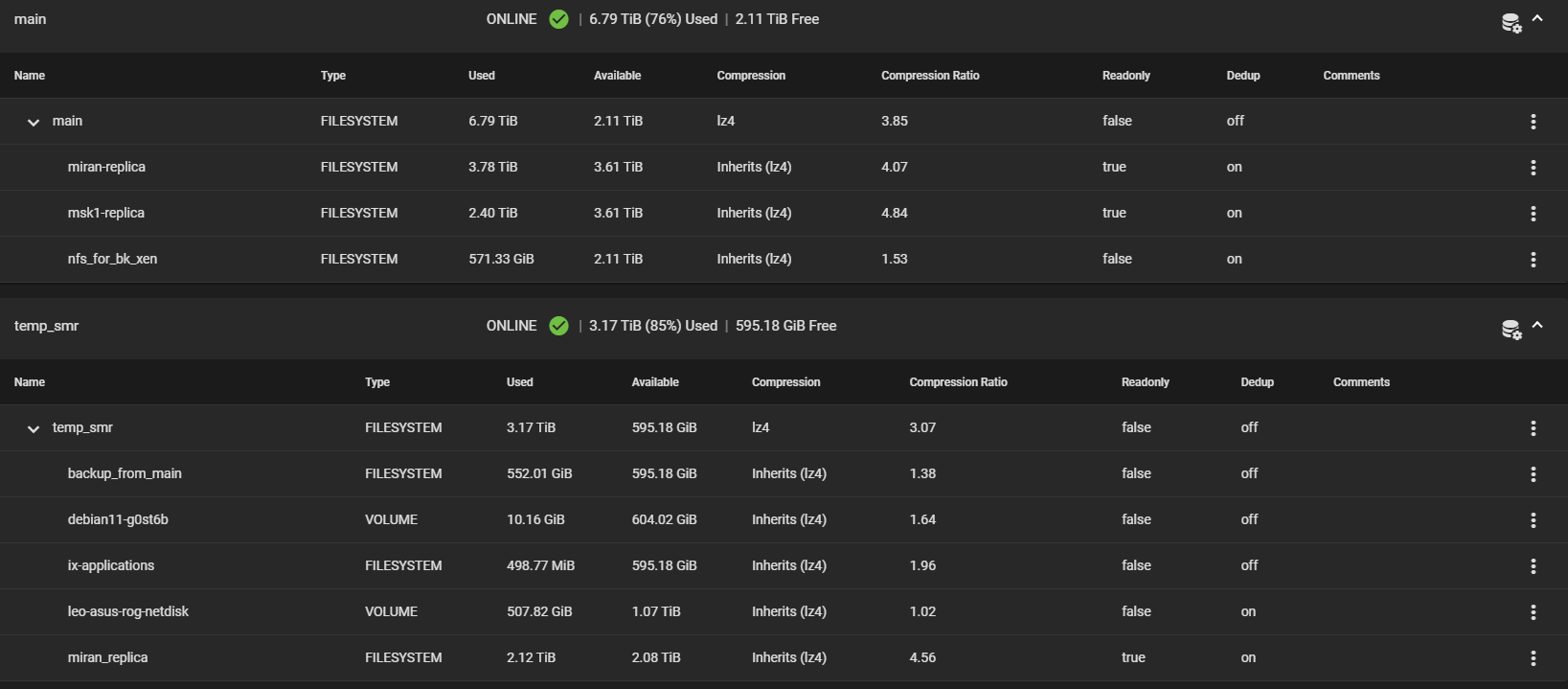

Apart from theoretical interest I have a practical task. We have 3 TrueNASes in separate datacenters we use as storages for our backups (let's say, they are 3 Tb each). We are establishing an off-site server which should hold replicas of all 3 servers. How large should be our storage? Now I see that if we replicate all pools, target server should have all 3 replicas on one pool for dedup to run across all three replicas. But resulting storage should not be less than 9 Tb in case dedup between datasets won't be effective.

There's that pesky Tb again

. (Again, it's a pet peeve; don't take it personally)

It's not clear that your off-site server should only have one pool. It may be smarter, from a data segregation perspective, to maintain three separate pools on that server. That really depends on your use case, but it's what I would suggest in these kind of applications. However, if you are limited to only one set of disks, that kind of makes the decision for you.

9TB would, for this example, be your safe lower limit. However, you may definitely be able to lower that, if you can somehow characterize your data in a way that you're confident that your dedup ratio between servers would be high. For example, if you're doing full file-system backups of Windows machines, you can be pretty dang confident that the data is highly redundant between servers.

Unfortunately, I know of no easy way to calculate this, other than just testing it.

And another question related to replication. It involves snapshots and do snapshots also use common deduplicated blocks with its parent dataset or pool?

Yes. A good way to think of snapshots is just pointers to a point-in-time configuration of the dataset. When ZFS writes updated data, it always performs a copy-on-write: make a copy of the existing data, merge it with the new data, and then write that modified data to disk, leaving the original data untouched. Then, it can mark the original data as unneeded (or overwritable). However, if there's a dataset, then that dataset continues to point to that original data, so that original data is left alone, and not marked as overwritable.

A consequence of this behavior is that snapshots and deduplication can cause sudden and unexpected ballooning in your dataset. For example, imagine that I have a dozen Windows clients that back up to a server with deduplication. Great, now I'm basically using one client worth of data to store a dozen clients. But if each client gets a snapshot at a different point in time (aka, with different files), then deduplication is useless on those snapshots, and suddenly I'm dealing with 12x that amount of data. Definitely not insurmountable, but something to be mindful of.

A good solution to this problem is to use a fast server to store the most recent week or so of data (maximizing the space saving from small snapshots and deduplication, and therefore saving money on fast storage), and then use a large and slow server to store older backups (maximizing the cost efficiency of huge storage arrays).