Dears,

I'm afraid I'm fairly new to the Freenas management and configuration so my questions may reflect it but we have to start somewhere right ?

My configuration is a Freenas 11.1 U5 Installed on a Supermicro server with two volumes configured.

The volume which gives an issue is a raidz3-0 volume of 7 HHDs of 6TB each (18,3Tib used and 16.8TB Available).

This volume is used as an ISCSI target to backup a few VM's on a 10years policy retention and is currently used nearly to the maximum capacity (2TB Free)

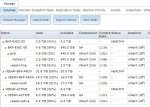

To extend the storage, we bought 4 HDD's of 4TB each and my colleague added them into the volume as stipes which gives the img1.

After that, because he was out of ideas to go forwards, I took back the case and am trying to put the config in the right order.

Could you please tell me first if it's possible to extend safely an existing volume of 7HHDs in Raidz3 with 4 HDDs of 4TB ?

If it is the case, is it possible to use the current configuration or correct the configuration in some way ? I mean I'm not sure stripes are a safe way to add space to a volume because they are the same as RAID 0 right ? Is there a way to remove the striped hard drives without crashing the whole configuration and configure it correctly or should I take as a fact that I lost the 4 HDD's forever and I'm good to buy X other HDD's to extend the volume ?

Any indication about how to solve this mess would be greatly appreciated

Thanks forwards for your help.

Best regards,

Laurent

I'm afraid I'm fairly new to the Freenas management and configuration so my questions may reflect it but we have to start somewhere right ?

My configuration is a Freenas 11.1 U5 Installed on a Supermicro server with two volumes configured.

The volume which gives an issue is a raidz3-0 volume of 7 HHDs of 6TB each (18,3Tib used and 16.8TB Available).

This volume is used as an ISCSI target to backup a few VM's on a 10years policy retention and is currently used nearly to the maximum capacity (2TB Free)

To extend the storage, we bought 4 HDD's of 4TB each and my colleague added them into the volume as stipes which gives the img1.

After that, because he was out of ideas to go forwards, I took back the case and am trying to put the config in the right order.

Could you please tell me first if it's possible to extend safely an existing volume of 7HHDs in Raidz3 with 4 HDDs of 4TB ?

If it is the case, is it possible to use the current configuration or correct the configuration in some way ? I mean I'm not sure stripes are a safe way to add space to a volume because they are the same as RAID 0 right ? Is there a way to remove the striped hard drives without crashing the whole configuration and configure it correctly or should I take as a fact that I lost the 4 HDD's forever and I'm good to buy X other HDD's to extend the volume ?

Any indication about how to solve this mess would be greatly appreciated

Thanks forwards for your help.

Best regards,

Laurent