- Enterprise-Grade Storage Appliances

- Business and Mission-Critical Use

- Reliability, Data Protection, Performance

- Professional Enterprise Support

TrueNAS Enterprise Overview

Enterprise Appliances and Support

TrueNAS® Enterprise systems take the ultimate in data protection, performance, and efficiency of TrueNAS to the next level with enterprise-grade hardware, additional features, and around-the-clock support. With flexible choices including high-availability and all-flash configurations, TrueNAS Enterprise appliances integrate into any environment and take guesswork and worry out of storage and data management.

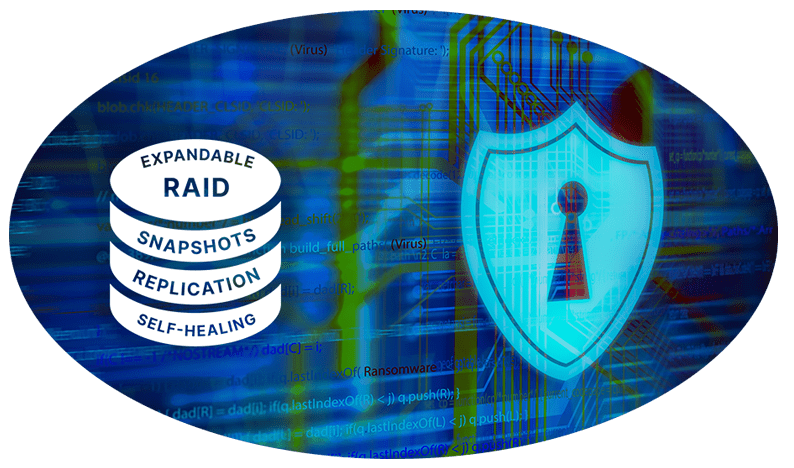

The Ultimate Data Protection & Storage Optimization

Built on the powerful OpenZFS file system, TrueNAS Enterprise comes with integrated data protection features including copy-on-write and data integrity checks to prevent corruption. Built-in RAID protection, unlimited snapshots, and resilient replication protect your data and facilitate recovery even in the face of hardware failure or malware. Storage optimization features like advanced compression, caching, and thin/thick provisioning maximize storage efficiency.

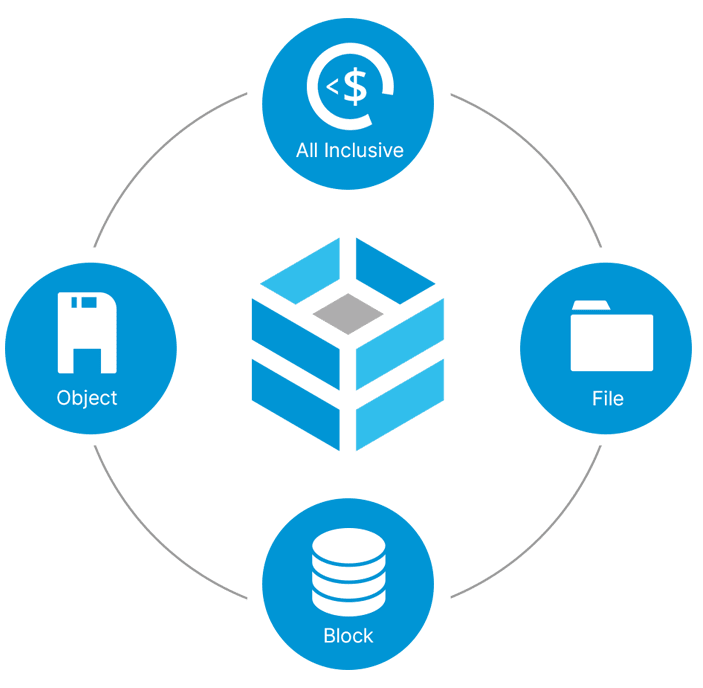

True Data Freedom

Free your data and your budget from proprietary systems and costly vendor lock-in. With our all-inclusive licensing model, you pay one price for all the features with no hidden fees to drive up costs. Available in all-flash or hybrid disk/flash configurations to meet any performance or capacity need, TrueNAS can be deployed as both a NAS and a SAN and supports block, file, and object protocols.

Flexible & Future-Proof

Whether you need a compact system for a remote office or a high-performance, rack-scale solution, there is a TrueNAS Enterprise system to fit your needs. Grow a system from a few terabytes to over twenty five petabytes on a single head unit. Scalable performance can be tuned to fit the specific needs of your workload or application. Easily integrate TrueNAS into any environment with built-in support for all major hypervisors and cloud backup services. TrueNAS Enterprise is flexible storage that adapts to your ever-changing infrastructure requirements.

When Downtime is Not an Option

When your business can’t afford to go offline, a TrueNAS system with high availability has your back. Redundant controllers and full redundancy of all active components provide simple serviceability and maximize uptime for mission-critical environments. In the unlikely event that a failover occurs, all services automatically proceed on the second controller for uninterrupted access.

US-Based Support

Every TrueNAS Enterprise system is fully backed by our dedicated and top-rated professional support team. Enterprise support services with 24×365 coverage and onsite support options are backed by a global spares network to ensure international users receive the same great service.

Systems

TrueNAS F-Series

Ultra-Performance & High-Density NVMe Flash Storage

Designed for maximum performance and high-density applications, the F-Series is our fastest storage available. With built-in high-availability and NVMe SSDs for the fastest possible throughput, the TrueNAS F-Series safeguards data for mission-critical operations while keeping data operations moving as fast as possible.

TrueNAS M-Series

When you need to balance performance, capacity, and reliability, look no further than the TrueNAS M-Series. Backed by NVDIMM and NVMe technology, the M-Series is capable of bandwidth up to 10 GB/s and can grow beyond 20 PB in a single rack, enough to support over 10,000 VMs.

TrueNAS H-Series

Entry-level storage with high availability for small / medium businesses, the TrueNAS H-Series is a compact storage appliance that can deliver speeds over 2 GB/s and scale up to 2.5 PB of raw capacity in 6RU. With high-availability options and top to bottom data protection, the entry-level H-Series ensures maximum uptime while providing the lowest Total Cost of Ownership (TCO).

TrueNAS R-Series

Configurable Value-Oriented Storage Lineup

The TrueNAS R-Series offers a selection of flexible configurations designed for various applications and use cases. Available in both all-flash and hybrid models, these single controller appliances provide excellent storage density and performance. The TrueNAS R-Series can be installed with TrueNAS CORE, Enterprise, or SCALE.

TrueNAS Enterprise: Features

| Multi-Systems | TrueCommand, RBAC, Auditing | Single Sign-on, Dataset Management | Alerting, Reporting, Analytics | TrueCommand Enclosure Views, TrueCommand 2.3 SCALE UI (22.12) |

|---|---|---|---|---|

| Administration | Web UI, SNMP, Syslog | REST API, WebSockets API | NetData (Plugin), Reports | vCenter Plugin, API ACLs and Rootless Admin (22.12) |

| Systems Utilities | Tasks, Cron Jobs, Scrips | In-Service Updates | Alerting, Email, Support | Proactive Support Monitoring |

| Clients and Applications | Windows, MacOS, Linux, UNIX, iOS, Android Clients | Many applications via SMB, NFS, or iSCSI | Integrated applications via ZFS and Containers / VMs | GlusterFS client (22.12) |

| Application Services | FreeBSD Jails or VMs (13.0), Windows, Linux, or FreeBSD, and PCie pass-through (22.12) | Plex, Asigra, Iconik, NextCloud, other Plugins (13.0) | Kubernetes, Docker Containers - App Catalogs (Helm charts), GPU sharing (22.12) | OverlayFS, AlderLake GPU, GeForce 30xx GPUs, Bulk App Updates VMs: USB pass-through, CPU Pinning (22.12) |

| Directory Services | Active Directory, 2-Factor | Local Users and Groups | NIS, LDAP, Kerberos | Built-in Administrators (22.12) |

| Storage Services | File: SMB v1/v2/v3, NFS v3/v4, AFP, FTP, WebDAV, rsync, GlusterFS (22.12) | Block: iSCSI, OpenStack Cinder, VAAI, Fibre Channel (13.0) | Object: S3-Compliant API, Minio clustering, Multitenancy, Cloudsync | ALUA, CSI (iSCSA, Gluster, SMB, NFS) (22.12) |

| Data Management | Unlimited Snapshots, Pool checkpoints | Space-efficient Clones | Replication: Remote, Local, Auto-resume, to Linux ZFS | Improved Storage UI (22.12) |

| Data Protection | Accelerated Copy-on-Write, Multi-Copy Metadata | Built-in RAID: Single/Dual/Triple Parity, Mirrors, Fast Resilvering, Fast Boot | Self-healing Checksums, Background Scrubbing | iX-Storj Distributed Storage |

| Data Reduction | Thin/Thick Provisioning | In-line Adaptive Compression | Clones, Deduplication, Trim | |

| Data Acceleration | All Flash, Fusion Pools, Metadata on Flash | Read Cache (ARC/L2ARC): RAM/Flash | Write Cache (SLOG/ZIL): Flash | HA NVDIMM, Dual Port SAS/NVMe (22.12) |

| Networking | IPv4, v6: 1- 100GbE, DHCP | LAGG, VLANs | Jumbo Frames, TCP options | Fibre Channel (8-32Gb) (13.0) |

| Data Security | Self-Encrypted Drives (TCG Opal), Dataset Encryption | Encrypted Replication, WireGuard, OpenVPN | ACLs, IP Filtering | KMIP, SEDs, FIPS 140-3 Validated Encryption (22.12) |

| Foundation | System logging, NTP | FreeBSD, Boot Management, SSH, Local Jails, Bhyve VMs (13.0) | Debian, Boot Management, SSH Kubernetes, KVM (22.12) | Performance Autotune |

| High Availability | Fast ZFS Replication | Client-based Mirroring | Dual Controller High Availability | 10-100 GbE Clustering, VLANs or LAGG (22.12) |

| Hardware Management | IPMI Remote Management | SAS JBODs, Global Spares | SMART, SSD Wear Monitoring | Visual Enclosure Management (GUI) |

| Technical Support | Enterprise Support: Up to 24x7 | |||