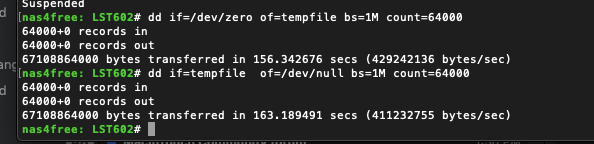

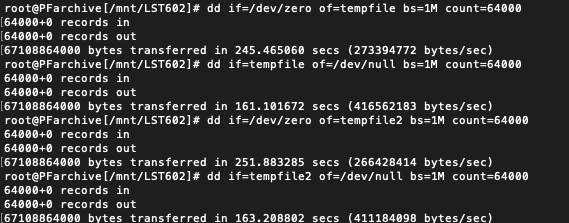

I have just recently switched from NAS4Free to FreeNAS and have noticed that my pools write speeds (4 drive RAIDZ in this specific instance) are dramatically different between the two operating systems. ~430MBs writes in NAS4free vs ~270MBs in FreeNAS. Hardware is identical in both setups, I simply created a new FreeNAS SSD boot drive and booted it. I also have a second system that I switched to FreeNAS and am seeing the same issue there as well. (Though not as thoroughly as tested but definitely slower pool write speeds.)

NOTE: I want to stay with FreeNAS and realize this post my get inflamed. I request that responses push for a technical answer here so I keep using FreeNAS. I do need the fast write speeds for these pools because I use these drives to capture video and images from a high speed film scanner. When the pools slow down, the scanner automatically slows as well. This is actually how I discovered the issue.

Hardware:

Xeon E3-1230 v2 3.3 Ghz

Super Micro 9XSCM

32 Gigs ECC RAM

2x LSI 9201-16i - FW:20.00.01.00 - IT. >>> 30 Drives direct attached to these cards.

1x LSI 9207 8E - FW:20.00.01.00 - IT >>>

1 External Sas cable to >>> INTEL RESCV360JBD Expander >> 20 Drives & 10 Open Slots.

1 External Sas cable to >>> External 4 drive bay box

Software

NAS4Free: 11.0.0.4 Sayyadina (4283)

FreeNAS: 11.2-U4.1

RAID in question:

- 6 TB usable RAIDZ (4x2TB)

- No compression in either OS.

- No Encryption

- No ZIL or l2arc (on any pool)

TESTS and NOTES:

- To isolate the drives and remove network, SSH into the system and ran a DD test (64GB file - I am dealing with large video files) - dd if=/dev/zero of=tempfile bs=1M count=64000. Images are from this test. Results are consistently reproducible.

- I tried various "sync" options in FreeNAS on the pools using the GUI, but it didn't seem to effect test results.

NOTE: I want to stay with FreeNAS and realize this post my get inflamed. I request that responses push for a technical answer here so I keep using FreeNAS. I do need the fast write speeds for these pools because I use these drives to capture video and images from a high speed film scanner. When the pools slow down, the scanner automatically slows as well. This is actually how I discovered the issue.

Hardware:

Xeon E3-1230 v2 3.3 Ghz

Super Micro 9XSCM

32 Gigs ECC RAM

2x LSI 9201-16i - FW:20.00.01.00 - IT. >>> 30 Drives direct attached to these cards.

1x LSI 9207 8E - FW:20.00.01.00 - IT >>>

1 External Sas cable to >>> INTEL RESCV360JBD Expander >> 20 Drives & 10 Open Slots.

1 External Sas cable to >>> External 4 drive bay box

Software

NAS4Free: 11.0.0.4 Sayyadina (4283)

FreeNAS: 11.2-U4.1

RAID in question:

- 6 TB usable RAIDZ (4x2TB)

- No compression in either OS.

- No Encryption

- No ZIL or l2arc (on any pool)

TESTS and NOTES:

- To isolate the drives and remove network, SSH into the system and ran a DD test (64GB file - I am dealing with large video files) - dd if=/dev/zero of=tempfile bs=1M count=64000. Images are from this test. Results are consistently reproducible.

- I tried various "sync" options in FreeNAS on the pools using the GUI, but it didn't seem to effect test results.