Krautmaster

Explorer

- Joined

- Apr 10, 2017

- Messages

- 81

Dear all,

I noticed that there exist a few threads mentioning that anything is weird on ZFS and deletion but I cant get behind so far.

What I did and have.

-> freenas 11.2 most actual

-> backupped from one RaidZ1 pool to a second, several TB

-> backup pool was reported to be full so i deleted several TB of that backup pool. The "rm" command took like years to delete the folder so I destroyed the datasets

2019-09-24.00:03:32 zpool set cachefile=/data/zfs/zpool.cache RaidZWDGreen [user 0 (root) on ]

2019-09-26.07:44:06 zfs destroy -r RaidZWDGreen/ncdata [user 0 (root) on freenas.local]

2019-09-26.07:45:58 zfs destroy -r RaidZWDGreen/cloud [user 0 (root) on freenas.local]

2019-09-26.07:47:36 zfs destroy -r RaidZWDGreen/Software [user 0 (root) on freenas.local]

2019-09-27.07:26:10 zfs destroy -r RaidZWDGreen/Backup [user 0 (root) on freenas.local]

2019-09-27.13:18:14 zpool import -c /data/zfs/zpool.cache.saved -o cachefile=none -R /mnt -f 4492606138290332196 [user 0 (root) on ]

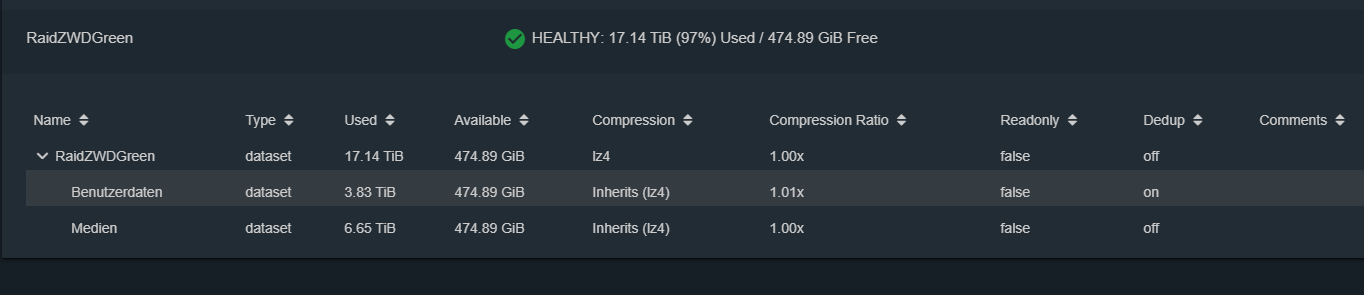

any how, the free space is still way less than it should be related to size and the usage.

RaidZWDGreen 17.1T 475G 166K /RaidZWDGreen

RaidZWDGreen/Benutzerdaten 3.83T 475G 3.83T /RaidZWDGreen/Benutzerdaten

RaidZWDGreen/Medien 6.65T 475G 6.65T /RaidZWDGreen/Medien

17TB, not even 10TB used and only 475 GB free? It can be that i may take a few time to free that TB of data but not several days or??

How can that be. And no, there are no known snapshots. Thanks for your help.

Edit. Btw, it would be helpful if i could get one of that destroyed datasets restored, wanted to check something in there. Is that possible? -the nfFx import did not help. But as the space seem to be used after destruction of the dataset, the data might be still there?

I noticed that there exist a few threads mentioning that anything is weird on ZFS and deletion but I cant get behind so far.

What I did and have.

-> freenas 11.2 most actual

-> backupped from one RaidZ1 pool to a second, several TB

-> backup pool was reported to be full so i deleted several TB of that backup pool. The "rm" command took like years to delete the folder so I destroyed the datasets

2019-09-24.00:03:32 zpool set cachefile=/data/zfs/zpool.cache RaidZWDGreen [user 0 (root) on ]

2019-09-26.07:44:06 zfs destroy -r RaidZWDGreen/ncdata [user 0 (root) on freenas.local]

2019-09-26.07:45:58 zfs destroy -r RaidZWDGreen/cloud [user 0 (root) on freenas.local]

2019-09-26.07:47:36 zfs destroy -r RaidZWDGreen/Software [user 0 (root) on freenas.local]

2019-09-27.07:26:10 zfs destroy -r RaidZWDGreen/Backup [user 0 (root) on freenas.local]

2019-09-27.13:18:14 zpool import -c /data/zfs/zpool.cache.saved -o cachefile=none -R /mnt -f 4492606138290332196 [user 0 (root) on ]

any how, the free space is still way less than it should be related to size and the usage.

RaidZWDGreen 17.1T 475G 166K /RaidZWDGreen

RaidZWDGreen/Benutzerdaten 3.83T 475G 3.83T /RaidZWDGreen/Benutzerdaten

RaidZWDGreen/Medien 6.65T 475G 6.65T /RaidZWDGreen/Medien

17TB, not even 10TB used and only 475 GB free? It can be that i may take a few time to free that TB of data but not several days or??

How can that be. And no, there are no known snapshots. Thanks for your help.

Edit. Btw, it would be helpful if i could get one of that destroyed datasets restored, wanted to check something in there. Is that possible? -the nfFx import did not help. But as the space seem to be used after destruction of the dataset, the data might be still there?

Last edited: