Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Hi everyone,

As I was trying to figure out which datasets / zvols and shares I best create for my home FreeNAS server, I first wanted to get a better idea of the performance of all these options. As my knowledge of these options is still pretty limited, I wanted to share my experiences, so others can tell me if I'm doing something wrong / sub-optimal and perhaps my knowledge / experiences can also be useful to others...

My FreeNAS server should not be limited by CPU (AMD Ryzen 3600X) and also network should not be limiting much (Intel X550 10GBe). I have a single pool with 8x 10TB in RAIDZ2 and 32GB RAM, no L2ARC / SLOG. For more details, see my signature.

I've run all benchmarks on my Windows 10 1909 desktop (Ryzen 3900X with 32GB RAM, Intel X540 NIC, NVMe SSD), which has direct connection (so without a router in between) to the FreeNAS server.

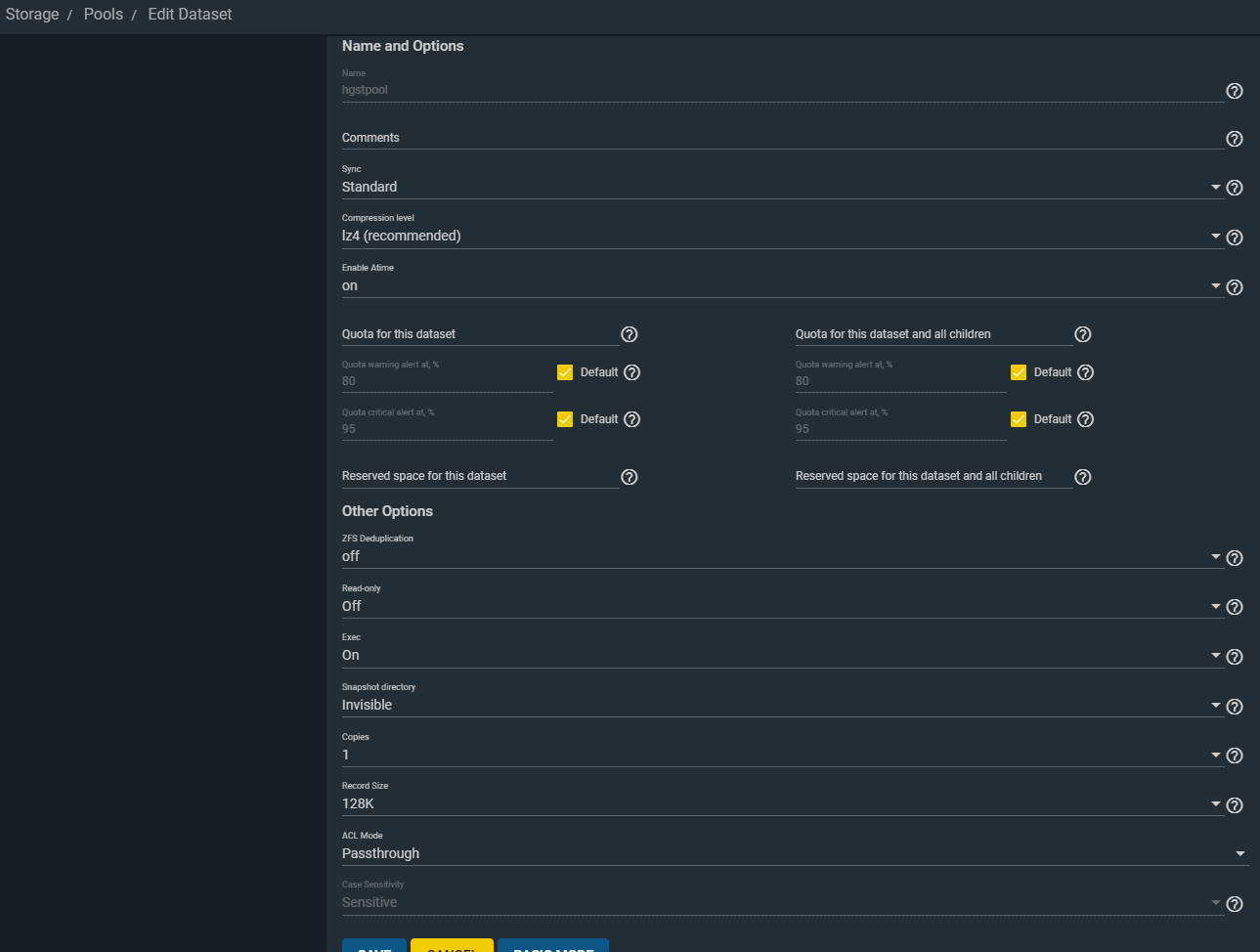

Config - Pool

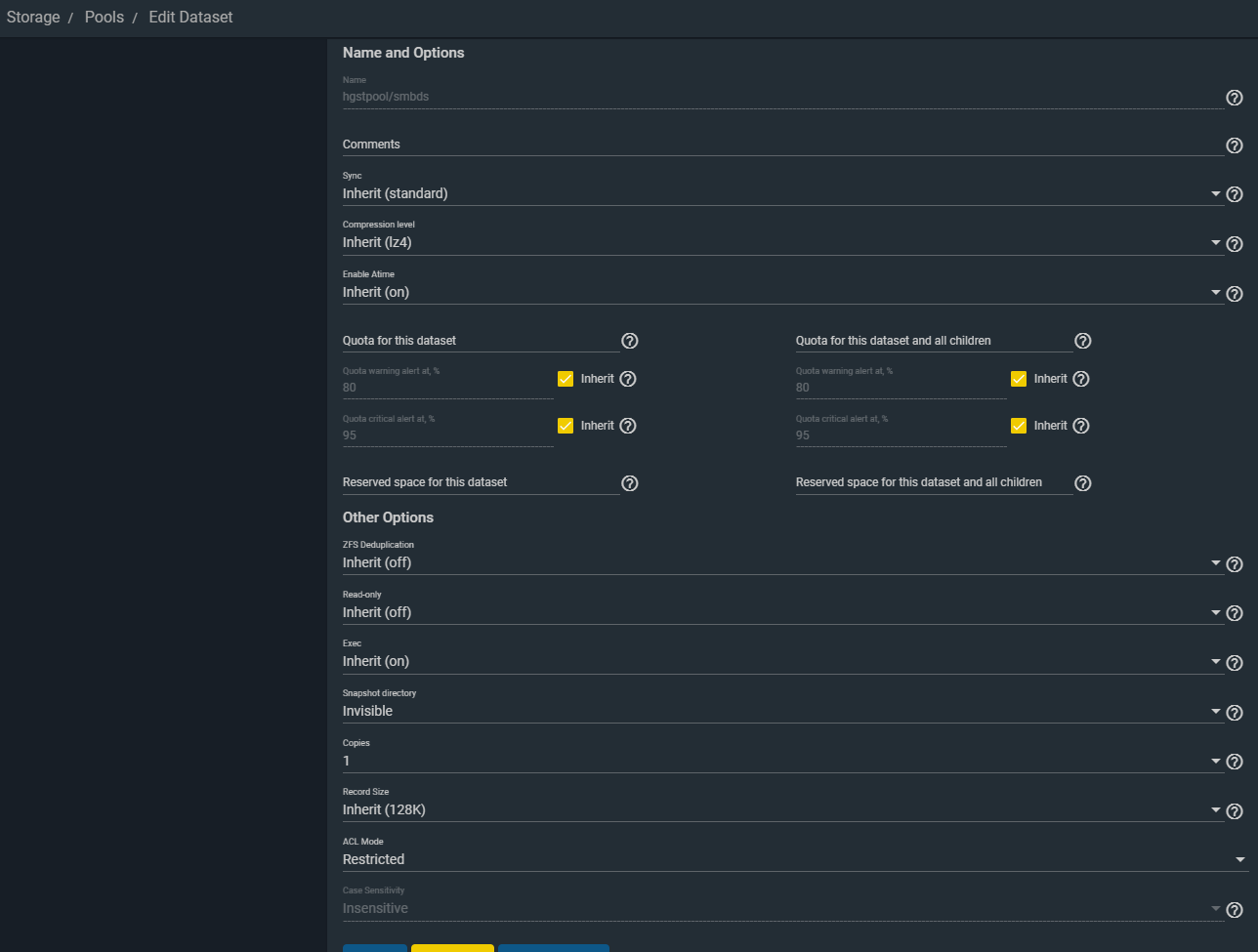

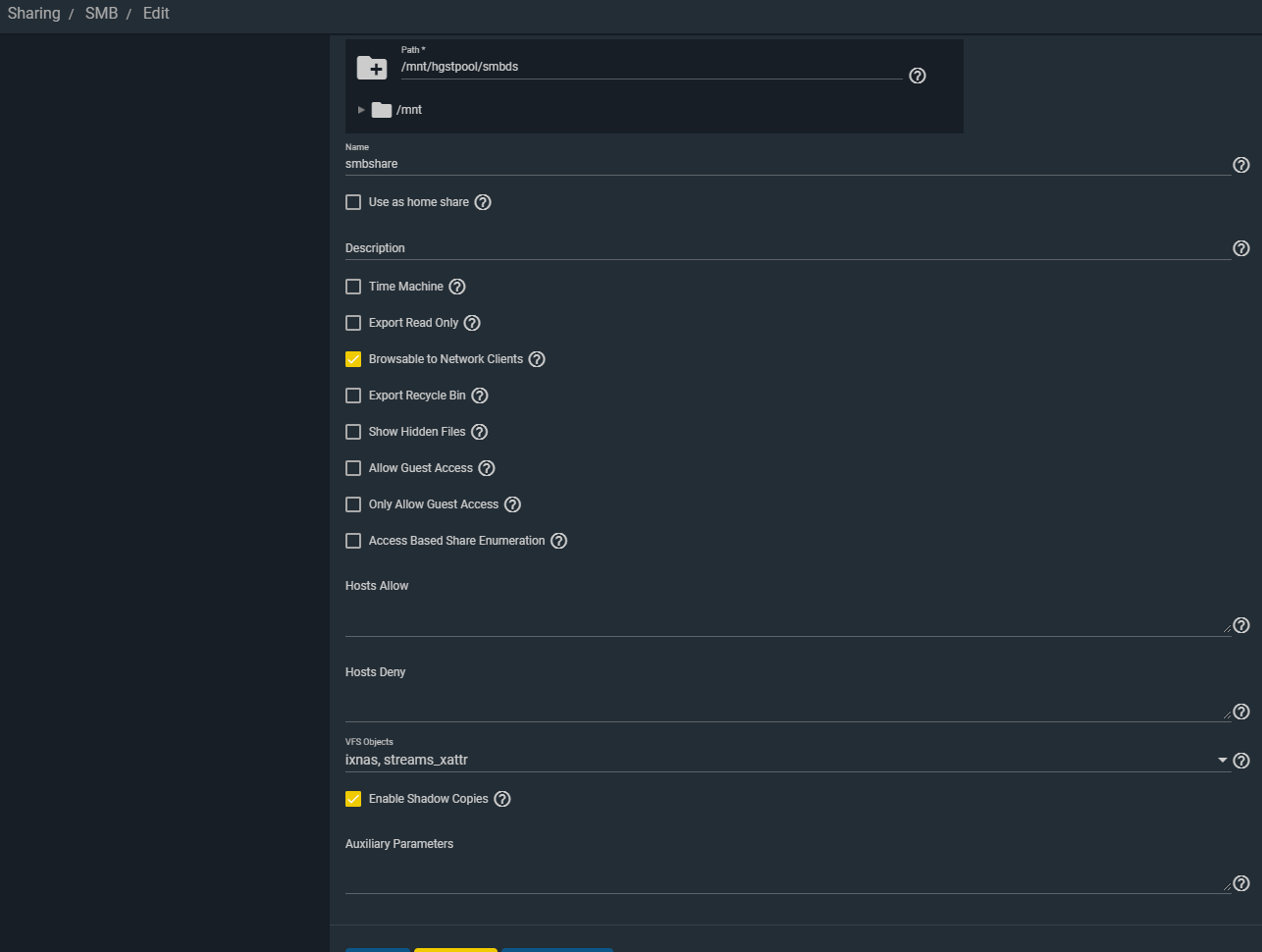

Config - SMB

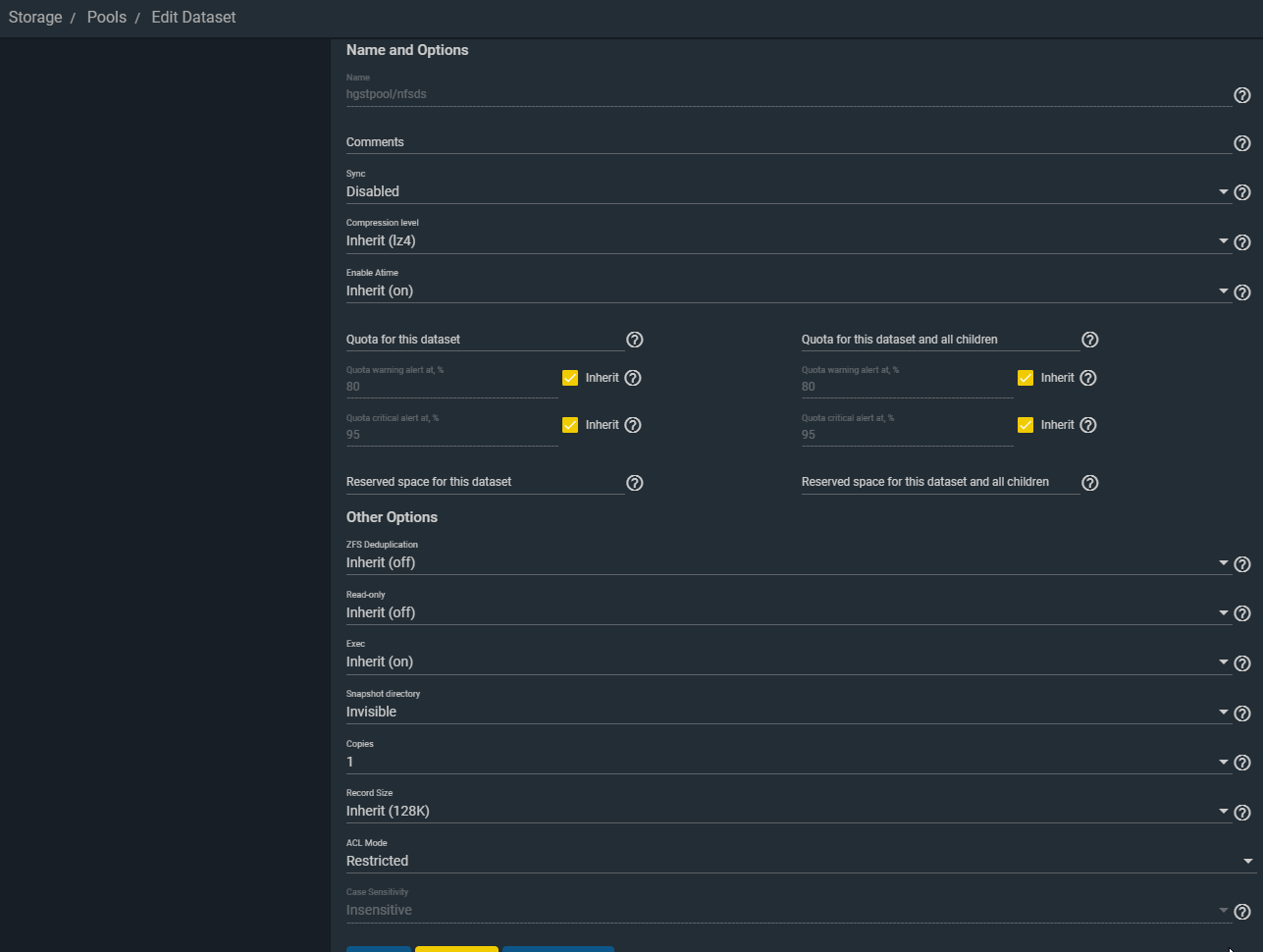

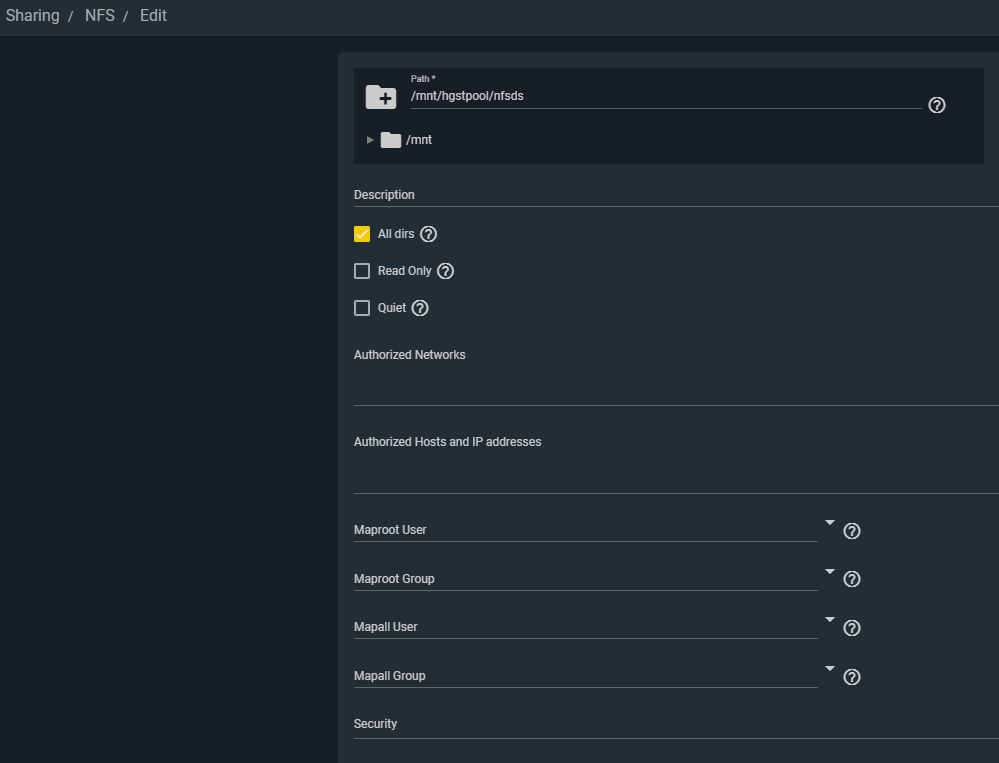

Config - NFS

As I was trying to figure out which datasets / zvols and shares I best create for my home FreeNAS server, I first wanted to get a better idea of the performance of all these options. As my knowledge of these options is still pretty limited, I wanted to share my experiences, so others can tell me if I'm doing something wrong / sub-optimal and perhaps my knowledge / experiences can also be useful to others...

My FreeNAS server should not be limited by CPU (AMD Ryzen 3600X) and also network should not be limiting much (Intel X550 10GBe). I have a single pool with 8x 10TB in RAIDZ2 and 32GB RAM, no L2ARC / SLOG. For more details, see my signature.

I've run all benchmarks on my Windows 10 1909 desktop (Ryzen 3900X with 32GB RAM, Intel X540 NIC, NVMe SSD), which has direct connection (so without a router in between) to the FreeNAS server.

Config - Pool

Config - SMB

Config - NFS

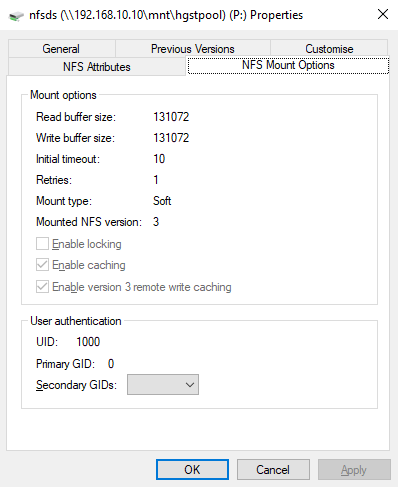

Code:

C:\Users\m4st4>mount

Local Remote Properties

-------------------------------------------------------------------------------

p: \\192.168.10.10\mnt\hgstpool\nfsds UID=1000, GID=0

rsize=131072, wsize=131072

mount=soft, timeout=10.0

retry=1, locking=no

fileaccess=755, lang=ANSI

casesensitive=no

sec=sys

Last edited: