Adam Tyler

Explorer

- Joined

- Oct 19, 2015

- Messages

- 67

Hello everyone! I am troubleshooting a FreeNAS performance issue and was hoping someone out there could provide insight.

First, here is my single zPool setup:

Each drive is a WD 2 TB RED drive. Model WD20EFRX.. Rough math, I am expecting each mirror to provide a sustained transfer speed of about 60 MB/s. Depends on the workload I realize, but that is a rough guide.. Let me know if I am miss informed this ar.

So, by 60 MB/s per mirror, I should be able to push 140 MB/s total. Or 1.92 Gigabit.

So, when I do an internal speed test using the following commands I am seeing some interesting results:

It seems this command wrote and read a 3 Gb test file and came up with 1.45 Gigabit write and 0.3542 Gigabit read.. Huh? Shouldn't read be much faster than write? Am I not interpreting these numbers correctly?

The FreeNAS server has 32 Gb RAM and is run by an I7 2nd gen processor. Using a new LSI SAS controller (9207-8I) if memory serves.

If I take this a step further and use a VM running on this zPool connected via iSCSI to FreeNAS using round robin <multipath IO> load balancing between 2, 1 Gb NICs I am getting about 103 MB/s write and up to 140 MB/s read. Doesn't seem to be consistent with the local FreeNAS results. And no where near the 1.92 Gb the drives should* be capable of. That isn't even considering their cache ability to burst.

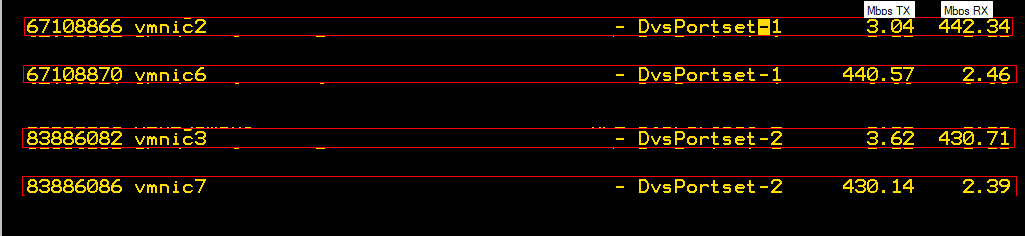

When I attempt to perform a "storage vMotion" job between a "different" storage device and this FreeNAS box, take a look at the throughput metrics on the NIC links.

This output is reading from other storage device and writing to FreeNAS.

As you can see from the screenshot vmnic2 and vmnic3 are receiving just over 860 Mbps (RX) from the "other" storage device and writing (TX) it to the FreeNAS storage device using vmnic6 and vmnic7. Two completely separate 2 Gb links for each storage device perfectly load balanced. Only, each link is performing at about half of what it can...

-Adam

First, here is my single zPool setup:

Code:

pool: zPool01 state: ONLINE scan: none requested config: NAME STATE READ WRITE CKSUM zPool01 ONLINE 0 0 0 mirror-0 ONLINE 0 0 0 gptid/9144804f-2d70-11e8-8f45-003048b3d5b8 ONLINE 0 0 0 gptid/926ecc51-2d70-11e8-8f45-003048b3d5b8 ONLINE 0 0 0 mirror-1 ONLINE 0 0 0 gptid/3336bb1e-2d74-11e8-8f45-003048b3d5b8 ONLINE 0 0 0 gptid/34124ad5-2d74-11e8-8f45-003048b3d5b8 ONLINE 0 0 0 mirror-2 ONLINE 0 0 0 gptid/6f4654c3-2d74-11e8-8f45-003048b3d5b8 ONLINE 0 0 0 gptid/701c8b6f-2d74-11e8-8f45-003048b3d5b8 ONLINE 0 0 0 mirror-3 ONLINE 0 0 0 gptid/7adbb06f-2d74-11e8-8f45-003048b3d5b8 ONLINE 0 0 0 gptid/7bbe348a-2d74-11e8-8f45-003048b3d5b8 ONLINE 0 0 0 errors: No known data errors

Each drive is a WD 2 TB RED drive. Model WD20EFRX.. Rough math, I am expecting each mirror to provide a sustained transfer speed of about 60 MB/s. Depends on the workload I realize, but that is a rough guide.. Let me know if I am miss informed this ar.

So, by 60 MB/s per mirror, I should be able to push 140 MB/s total. Or 1.92 Gigabit.

So, when I do an internal speed test using the following commands I am seeing some interesting results:

Code:

root@TYL-NAS01:/mnt/zPool01 # dd if=/dev/zero of=testfile bs=1024 count=3000000 3000000+0 records in 3000000+0 records out 3072000000 bytes transferred in 16.906389 secs (181706453 bytes/sec) root@TYL-NAS01:/mnt/zPool01 # dd if=testfile of=/dev/zero bs=1024 count=300000 300000+0 records in 300000+0 records out 307200000 bytes transferred in 6.937014 secs (44284184 bytes/sec) -rw-r--r-- 1 root wheel 2.9G Mar 26 11:04 testfile

It seems this command wrote and read a 3 Gb test file and came up with 1.45 Gigabit write and 0.3542 Gigabit read.. Huh? Shouldn't read be much faster than write? Am I not interpreting these numbers correctly?

The FreeNAS server has 32 Gb RAM and is run by an I7 2nd gen processor. Using a new LSI SAS controller (9207-8I) if memory serves.

If I take this a step further and use a VM running on this zPool connected via iSCSI to FreeNAS using round robin <multipath IO> load balancing between 2, 1 Gb NICs I am getting about 103 MB/s write and up to 140 MB/s read. Doesn't seem to be consistent with the local FreeNAS results. And no where near the 1.92 Gb the drives should* be capable of. That isn't even considering their cache ability to burst.

When I attempt to perform a "storage vMotion" job between a "different" storage device and this FreeNAS box, take a look at the throughput metrics on the NIC links.

This output is reading from other storage device and writing to FreeNAS.

As you can see from the screenshot vmnic2 and vmnic3 are receiving just over 860 Mbps (RX) from the "other" storage device and writing (TX) it to the FreeNAS storage device using vmnic6 and vmnic7. Two completely separate 2 Gb links for each storage device perfectly load balanced. Only, each link is performing at about half of what it can...

-Adam