-

Important Announcement for The TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Selecting First FreeNAS Server Build

- Thread starter MalVeauX

- Start date

- Joined

- Feb 18, 2014

- Messages

- 2,925

After I lost my FreeNAS Mini and Dell C2100 servers from water damage after a fire (HDD's saved) I built two such systems as you described above to replace them. They have been entirely satisfactory and I highly recommend their consideration.Further further edit: Maybe the 32GiB max idea?

I put them in Fractal Define R5 cases, added an additional front case fan and used Coolermaster Hyper 212 Evo CPU coolers, 32GiB ECC Ram, EVGA 850W PS's (overkill but have same PS in all workstations too so easy to carry spare(s) and swap in/out in case of trouble or testing).

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

Windows, no. Linux, yes, just be sure that zfsutils-linux is installed. There's a caveat here about feature flags, that'll largely be resolved assuming TrueNAS Core and Ubuntu 20.04.

There is a ZFS port for Windows but I'm not sure how stable it is. You're better off with a boot into Linux.

Thanks, that makes sense. I'm just trying to have a backup plan to deal with a total melt down, assuming the drives are alive but the host machine is dead. I'll need to have a Ubuntu or similar distro on standby or another FreeNAS system on standby to do a quick read and test that I can migrate a drive as needed in such an event. I'll do this before moving any real data of course.

I am not sure if giving a Widows machine native access to a file share, qualifies as streaming. My Windows HTPC with J-River also has access to all my media files trough a Samba share but I never looked at it as streaming. J-River provides streaming though. Anyway as long as another machine is doing the heavy lifting you are good.

I have never done it but I suspect that at least in theory that should be possible on any OS supporting ZFS. That being said, ZFS has so called feature flags. I am not an expert, to say the least, on this subject but I suspect that if the ZFS version you are running has feature flags that are not supported on the receiving system you are out of luck.

Thanks, fair enough, I'm not sure what else to call it other than streaming, slowly sending data from one machine to another, not at top speed, but at a sustained rate within a buffer. Maybe its just slow sustained file transfer? Let me know what to call it if it's confusing, I don't know what else to call it. But yea the goal is just simple file sharing and the client machines will sometimes playback an AVI or FLAC from the server through Kodi (client side processing, decoding) which is not network intensive (I do this now over 5G wifi flawless just with basic Windows file sharing as it is).

For x9 there's a suggestion over yonder that runs you about 380: https://www.ixsystems.com/community...anges-to-upgrade-as-high-as-512gb-of-ram.110/

Note that you can absolutely drop those costs further by going with 32GiB of RAM and choosing a 4-core CPU, which would be entirely adequate for your use case.

For example https://www.ebay.com/itm/Intel-Xeon...626484?hash=item23d7d09534:g:ss4AAOSwd59eyX65 and https://www.ebay.com/itm/SAMSUNG-32...304181&hash=item3da89715cb:g:bL0AAOSwCQJfJCY5

Further edit: Those x9srl-f are getting expensive. Some more digging to see what's affordable right now :). Could be an x9sri-f, e.g. https://www.ebay.com/itm/Supermicro...tel-C602-ECC-DDR3/264821242181?epid=127348132 for $150. Add CPU and memory and cooler and you are right around that 250 mark.

Further further edit: Maybe the 32GiB max idea?

$75 for board, e.g. https://www.ebay.com/p/127396627?iid=352558745024

$55 for Xeon quad, e.g. https://www.ebay.com/p/10011083316?iid=382486487188

$80 for 16 GiB of UDIMM RAM, e.g. https://www.ebay.com/itm/Crucial-8G...VER-Memory-RAM-8G/383582216503?epid=691116539

$40 for some form of CPU cooler for it, look around. Maybe this. https://www.ebay.com/itm/401123086618 . I assume you have thermal paste, otherwise that's an additional expense.

$250 total, add a boot drive (could be a $25 SSD like Intel 320 40GB), and you are set. An extra 80 gets you more memory, which gives you a bit of headroom. Nice but not necessary.

Thank you very much, this is great, I will go through and review it all. Thanks for digging up the links and sharing them. I do like the idea of getting as modern as possible to avoid issues and keep hardware relevant and easier to obtain when needed. I think 32Gb is plenty, overkill really, for my purposes so that would be a big cost saver.

You know, in case of e real disaster, temporary installing FreeNAS on another system is not very time consuming. Especially if you have taken care that you saved your configuration file on a save place. It should not take more then 25 minutes.

Thanks, this is something I need to figure out and try a few times before moving into the final stages of filling up the NAS with data. Basically simulate a fail and how I would replace the drive(s) and get back to business. And simulate a machine failure (just remove the disc from the NAS) and see if I can read it on another system and retrieve data without too much fuss. Having a bootable Linux distro, something light, might handle this, or a FreeNAS option on another machine (any old machine should be fine with an available SATA port). I could also exposure plugging it in via eSATA or USB with an enclosure (prefer SATA).

Very best,

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

@MalVeauX

If you are interested I have a spare x8sie-f I would be willing to send you for the cost of shipping.

Thank you, that is very kind, I will take a look at things. I have to learn about these boards and components and be in touch. Again, thank you. Very generous!

After I lost my FreeNAS Mini and Dell C2100 servers from water damage after a fire (HDD's saved) I built two such systems as you described above to replace them. They have been entirely satisfactory and I highly recommend their consideration.

I put them in Fractal Define R5 cases, added an additional front case fan and used Coolermaster Hyper 212 Evo CPU coolers, 32GiB ECC Ram, EVGA 850W PS's (overkill but have same PS in all workstations too so easy to carry spare(s) and swap in/out in case of trouble or testing).

That's very good to hear about those systems, sorry to hear about the loss! Sounds like things were recovered nicely? Do you mind sharing a little about how you recovered the drives?

Very best,

- Joined

- Feb 18, 2014

- Messages

- 2,925

The drives were "above the waterline" in the system cases (the mainboards were not). I pulled the drive caddies the night of the fire and put them in a very dry environment. Fired them up a few weeks later and all of them came back, same with my workstation drives, too. I've since lost a couple of the server drives out of a total of 18 recovered. Three Lenovo Thinkpad HDD's also survived - laptops were open, running, on desks, keyboards soaking wet and covered with debris, put drives in borrowed laptops the next day and they fired up just fine, too. Had no need to resort to backups on any system. though we had them...Do you mind sharing a little about how you recovered the drives?

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

The drives were "above the waterline" in the system cases (the mainboards were not). I pulled the drive caddies the night of the fire and put them in a very dry environment. Fired them up a few weeks later and all of them came back, same with my workstation drives, too. I've since lost a couple of the server drives out of a total of 18 recovered. Three Lenovo Thinkpad HDD's also survived - laptops were open, running, on desks, keyboards soaking wet and covered with debris, put drives in borrowed laptops the next day and they fired up just fine, too. Had no need to resort to backups on any system. though we had them...

Wow, yikes, that's crazy, sorry you had to go through that. Probably learned a lot though recovering from it.

Any hiccups getting your data back?

And what was your backup solution if that didn't work?

Very best,

- Joined

- Feb 18, 2014

- Messages

- 2,925

No hiccups, lots of tension.And what was your backup solution if that didn't work?

All workstations and laptops backed up by Veeam nightly to the FreeNAS Mini, FreeNAS Mini backed up to the Dell C2100 (which on day of fire unfortunately was in the same building "temporarily" while finding it a new home). "Irreplaceable" data files Veeam backed up from the FreeNAS Mini to a portable USB drive daily. Working on cloud choice now.

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

No hiccups, lots of tension.

All workstations and laptops backed up by Veeam nightly to the FreeNAS Mini, FreeNAS Mini backed up to the Dell C2100 (which on day of fire unfortunately was in the same building "temporarily" while finding it a new home). "Irreplaceable" data files Veeam backed up from the FreeNAS Mini to a portable USB drive daily. Working on cloud choice now.

Thanks, sounds like quite a nightmare. I likely need to figure out a realistic backup plan. Though I don't think a cloud would work for me (limited internet speeds where I am). Maybe a physical drive in a fire box?

Very best,

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

Hello all,

I'm trying to wrap my head around the mother board differences.

The SuperMicro X8 series vs X9 series; is the X9 series mainly something to consider for the more modern CPU options, memory options and controller options for SATA?

I'm looking real hard at this SuperMicro X9SRi-F because it has LGA2011 CPU slot (allowing for CPU like 2650, but do I even need that over a 34xx series XEON?), 8x RAM slots (may not need that?) and 10x SATA slots which greatly simplifies some things such as needing a separate HBC starting out or not, etc.

Specifically attractive to me is this on that above board:

So it has 2x SATA III ports; 4x SATA II ports; and 4 more SATA ports via SCU. I had to look up SCU and found it to simply be an Intel thing to expand the controller to handle more SATA. Rated for the same handling as SATA II. But overall only a handful of SATA III ports. So I'm curious if this is ok for the purpose of this server? The drives that will be used on these will be at best 7200 RPM hard disc drives such as WD Data Center drives in mirror pools (no RAIDZ for now I think). I would think these cannot really saturate a 3GB/s bandwidth potential right? So is having 10x SATA ports broken up into SATA3, SATA2 and SATA on SCU ok with FreeNAS and ZFS for the purpose of pools of mirrors? I'm not sure how it all works with respect to putting the pool drives on the SATA2 and SATA on SCU ports (8 total) and reserving the SATA3 for connecting the boot disc and having a spare for use (hot spare maybe). Or would I be better off getting a board with less SATA ports and just putting a HBC card in there (LSI) and having +8 SATA ports that way?

I'm trying to see if it's worth going for a board like that, or if it would be better for my purposes to simply get the other board, the SuperMicro X8SIA-F board with its 6x SATA ports (comes with RAM & CPU immediately) and just add a HBC as needed?

Basically, slightly more modern board with 10x SATA (no CPU and RAM included) versus the slightly older board with XEON & RAM included with 6x SATA and simply add a HBC as needed. I'm not sure if the CPU differences even matter for my purpose. So mainly it's more about the architecture & chipset differences and if they matter significantly for my purpose, if anyone has thoughts on this?

Ultimately it just seems like putting more money into the board for the X9 series vs X8 series but not really something to be a major benefit

Very best,

I'm trying to wrap my head around the mother board differences.

The SuperMicro X8 series vs X9 series; is the X9 series mainly something to consider for the more modern CPU options, memory options and controller options for SATA?

I'm looking real hard at this SuperMicro X9SRi-F because it has LGA2011 CPU slot (allowing for CPU like 2650, but do I even need that over a 34xx series XEON?), 8x RAM slots (may not need that?) and 10x SATA slots which greatly simplifies some things such as needing a separate HBC starting out or not, etc.

Specifically attractive to me is this on that above board:

Code:

6. 2 SATA3 (6Gb/s), 4 SATA2 (3Gb/s),

& 4 SATA (3Gb/s) ports via SCU

So it has 2x SATA III ports; 4x SATA II ports; and 4 more SATA ports via SCU. I had to look up SCU and found it to simply be an Intel thing to expand the controller to handle more SATA. Rated for the same handling as SATA II. But overall only a handful of SATA III ports. So I'm curious if this is ok for the purpose of this server? The drives that will be used on these will be at best 7200 RPM hard disc drives such as WD Data Center drives in mirror pools (no RAIDZ for now I think). I would think these cannot really saturate a 3GB/s bandwidth potential right? So is having 10x SATA ports broken up into SATA3, SATA2 and SATA on SCU ok with FreeNAS and ZFS for the purpose of pools of mirrors? I'm not sure how it all works with respect to putting the pool drives on the SATA2 and SATA on SCU ports (8 total) and reserving the SATA3 for connecting the boot disc and having a spare for use (hot spare maybe). Or would I be better off getting a board with less SATA ports and just putting a HBC card in there (LSI) and having +8 SATA ports that way?

I'm trying to see if it's worth going for a board like that, or if it would be better for my purposes to simply get the other board, the SuperMicro X8SIA-F board with its 6x SATA ports (comes with RAM & CPU immediately) and just add a HBC as needed?

Basically, slightly more modern board with 10x SATA (no CPU and RAM included) versus the slightly older board with XEON & RAM included with 6x SATA and simply add a HBC as needed. I'm not sure if the CPU differences even matter for my purpose. So mainly it's more about the architecture & chipset differences and if they matter significantly for my purpose, if anyone has thoughts on this?

Ultimately it just seems like putting more money into the board for the X9 series vs X8 series but not really something to be a major benefit

Very best,

Last edited:

The X9sri-f is a great board however if you plan on doing a mostly flash nas the sata ports are mostly 3Gb/s which won't be able to keep up with a modern ssd. You could also consider the x9-srh7f which has the integrated sas2308 which provides 8 SAS ports which are 6 Gb/s. Just be sure to flash it with IT mode firmware. If you are going for spinning rust then either board is a good choice in my opinion.

You probably don't need the extra cpu power but it never hurts, plus you can get cheap Ram for the e5 CPU's

You probably don't need the extra cpu power but it never hurts, plus you can get cheap Ram for the e5 CPU's

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

The X9sri-f is a great board however if you plan on doing a mostly flash nas the sata ports are mostly 3Gb/s which won't be able to keep up with a modern ssd. You could also consider the x9-srh7f which has the integrated sas2308 which provides 8 SAS ports which are 6 Gb/s. Just be sure to flash it with IT mode firmware. If you are going for spinning rust then either board is a good choice in my opinion.

You probably don't need the extra cpu power but it never hurts, plus you can get cheap Ram for the e5 CPU's

Thanks; by Flash NAS do you mean SSD or similar? I likely will not be doing that, as I need more capacity than that will provide currently and I don't need supreme speed of transfer. For quite a while I will still be using 7200RPM hard discs. Good point about the RAM relative to the CPU. I will take a look more into that!

Addendum:

Ugh, looking at this x8SIL-F with XEON x3440 and 16Gb ECC RAM for a nice little price. I found an article showing this idling at 48 watts with drives in a Windows platform. So likely to be a bit less with FreeNAS and not having run a GUI in the background resources? That's acceptable consumption I think, my electric rate in my area is aroun $0.11/kWh, so maybe $4 give or take per month? I may have calculated that incorrectly.

I'm thinking a Seasonic Focus GX-550 Fully Modular 80+ Gold PSU.

Very best,

Last edited:

By Flash, I do mean all SSD's.

While the x8SIL-F is a bit on the older side it works great for Freenas. The Freenas system I just replaced was a X8SIL-F with Xeon X3430 and 16GB ECC Ram. The only real reason I moved up to the X9srh was I decided to host storage for my Proxmox environment with ISCSI and needed more ram than what the X8SIL-F would accept.

For your use case, I think x8SIL-F with XEON x3440 and 16Gb ECC RAM you mentioned would be a great fit and for just $90 including shipping, you don't have a ton invested should you choose to upgrade at a later date.

The Seasonic Focus GX-550 Fully Modular 80+ Gold PSU also looks like a good power supply with plenty of SATA connectors.

While the x8SIL-F is a bit on the older side it works great for Freenas. The Freenas system I just replaced was a X8SIL-F with Xeon X3430 and 16GB ECC Ram. The only real reason I moved up to the X9srh was I decided to host storage for my Proxmox environment with ISCSI and needed more ram than what the X8SIL-F would accept.

For your use case, I think x8SIL-F with XEON x3440 and 16Gb ECC RAM you mentioned would be a great fit and for just $90 including shipping, you don't have a ton invested should you choose to upgrade at a later date.

The Seasonic Focus GX-550 Fully Modular 80+ Gold PSU also looks like a good power supply with plenty of SATA connectors.

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

By Flash, I do mean all SSD's.

While the x8SIL-F is a bit on the older side it works great for Freenas. The Freenas system I just replaced was a X8SIL-F with Xeon X3430 and 16GB ECC Ram. The only real reason I moved up to the X9srh was I decided to host storage for my Proxmox environment with ISCSI and needed more ram than what the X8SIL-F would accept.

For your use case, I think x8SIL-F with XEON x3440 and 16Gb ECC RAM you mentioned would be a great fit and for just $90 including shipping, you don't have a ton invested should you choose to upgrade at a later date.

The Seasonic Focus GX-550 Fully Modular 80+ Gold PSU also looks like a good power supply with plenty of SATA connectors.

Thanks for the input; I love the idea of SSD, but not quite there for cost for high capacity, at least for my application and purpose. One day maybe they will become high capacity, but for now, I'll have to stick with old spinning discs with higher capacity. I have SSD in all my machines but the biggest one is only 1TB.

I may move forward with this inexpensive kit then. I have some old drives laying around to use for testing so I can learn the OS/GUI and learn how to deal with pools, users, Samba, etc. I'd like to simulate the faults/failures and replace drives using the test drives I have laying around that are not needed anymore to get an idea of things before installing the big drives and copying over the data that I want redundancy for.

I appreciate everyone's input. Please feel free to throw out any other tips or info!

Very best,

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

Update:

I have won an auction on an X8SIL-F Supermicro motherboard with 16Gb of ECC RAM and a XEON x3440 CPU for $50 USD. So I'm starting with this. I've picked up the power supply also (new though, will not gamble used there).

I will test this out with some old drives I have laying around to learn FreeNAS 11+ on. I'll start with a SSD boot drive (I have a few old ones laying around) and will use some normal hard drives, low capacity, to make mirror pool and just test everything. I have two distros of Linux to also test so that if I were to take a drive from my pool (assuming total hardware melt down) and plug it into a Linux distro with updated ZFS ability to read via ZFS utils and simply be able to read and recover data from at least one drive that way. If I can do all that, a few times, and it's reasonable, I'll move forward with getting the larger new drives, and get ready to transfer a lot of data in chunks to the new mirror pool.

I'm going to shop for an appropriate case to hold this for now, with the ability to mount maybe 6 physical drives for future expansion of pools. I think my goal will be to rotate two mirror pools. First pool will be an 8TB 1:1 mirror pool and a boot drive. Then, later, when drives become more affordable in the 12TB+ range, I will add a second pool and migrate the data from the 8TB pool over to that with the new data and retire the 8TB drives and wait for the next large capacity drives range to enter the scene and price range. I don't care to have a ton of drives all consuming power with parity and stuff. I will stick to mirrors strictly I think based on everything I've read so far for maximum simplicity and total redundancy.

Update: Picked out a case, should be here in a few days. Went with a Corsair Carbide 100R, inexpensive, but lots of room for cable management and can handle a bunch of hard drives and up to ATX size boards.

My goal is to have a living copy in the working system (physical copy #1), a redundant copy on the mirror system in the FreeNAS box (physical copy #2, with redundancy) and then the important stuff will have a 3rd copy on an external hard drive that will live in a fireproof safe that I will refresh every 3 years or so. The idea being a basic backup plan, 2 physical copies for the working environment with some redundancy, 3 physical copies for important stuff.

Will update with build once everything arrives.

Very best,

I have won an auction on an X8SIL-F Supermicro motherboard with 16Gb of ECC RAM and a XEON x3440 CPU for $50 USD. So I'm starting with this. I've picked up the power supply also (new though, will not gamble used there).

I will test this out with some old drives I have laying around to learn FreeNAS 11+ on. I'll start with a SSD boot drive (I have a few old ones laying around) and will use some normal hard drives, low capacity, to make mirror pool and just test everything. I have two distros of Linux to also test so that if I were to take a drive from my pool (assuming total hardware melt down) and plug it into a Linux distro with updated ZFS ability to read via ZFS utils and simply be able to read and recover data from at least one drive that way. If I can do all that, a few times, and it's reasonable, I'll move forward with getting the larger new drives, and get ready to transfer a lot of data in chunks to the new mirror pool.

I'm going to shop for an appropriate case to hold this for now, with the ability to mount maybe 6 physical drives for future expansion of pools. I think my goal will be to rotate two mirror pools. First pool will be an 8TB 1:1 mirror pool and a boot drive. Then, later, when drives become more affordable in the 12TB+ range, I will add a second pool and migrate the data from the 8TB pool over to that with the new data and retire the 8TB drives and wait for the next large capacity drives range to enter the scene and price range. I don't care to have a ton of drives all consuming power with parity and stuff. I will stick to mirrors strictly I think based on everything I've read so far for maximum simplicity and total redundancy.

Update: Picked out a case, should be here in a few days. Went with a Corsair Carbide 100R, inexpensive, but lots of room for cable management and can handle a bunch of hard drives and up to ATX size boards.

My goal is to have a living copy in the working system (physical copy #1), a redundant copy on the mirror system in the FreeNAS box (physical copy #2, with redundancy) and then the important stuff will have a 3rd copy on an external hard drive that will live in a fireproof safe that I will refresh every 3 years or so. The idea being a basic backup plan, 2 physical copies for the working environment with some redundancy, 3 physical copies for important stuff.

Will update with build once everything arrives.

Very best,

Last edited:

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

Update:

I have the hardware up and running. I started testing things just to make sure the hardware is in good working order, so I started stressing it on doing tasks to see how it handles everything and to see how the power consumption would be and overall temperature and noise levels. To test things, I installed Lubuntu via USB and installed it to a SSD I had laying around, just to play with the hardware and test the memory and CPU and everything before I wipe it and migrate to installing FreeNAS. Mainly I just wanted to see the hardware, do some network copying via SMB and generally operate with a GUI and browse websites, etc, just to see how the older hardware behaved and what consumption and temperatures were produced.

I ended up with:

Supermicro XSIL8-F Motherboard

Intel Xeon X3440 CPU

16Gb ECC DDR3 RAM (4x4)

(currently have 1x SSD and 2x HDD's installed too)

I installed it into a Corsair Carbide Series 100R case.

I'm currently using the stock CPU cooler, but will be replacing this with a CoolMaster Hyper 212 EVO shortly just to get that last little bit of heat handling and silence. That said, the stock temperatures are fine so far, so I'm not too terribly concerned.

Power Consumption & Temps:

Current CPU temperture at idle is 26C with stock Intel cooler (it's 21.6C ambient temp in my office).

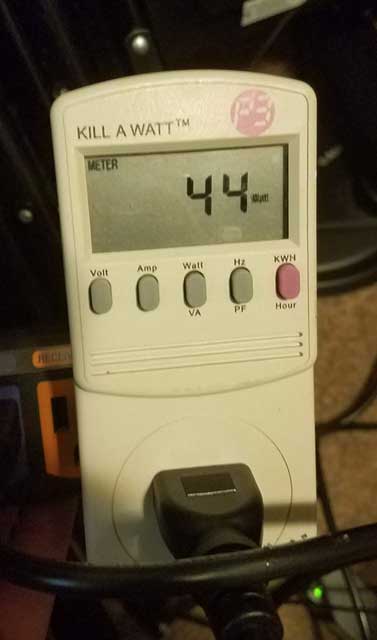

Power Consumption at idle with 3 drives running (1x SSD, 2x HDD): 43~44 watts

During active copying 3Gb files back and forth over the network (using onboard Intel Gigabit NIC) to my router and to my other workstation, the power consumption spiked anywhere from 53 to 68 watts with the discs whirling and copying going on, while running Lubuntu operating system with a few applications running.

CPU temperature during active file copying (testing on 200Gb of files drive to drive internally) while running Lubuntu has the temperature on the CPU at 38C in same environment, with the same consumption as above (averaging 66 watts currently, all discs spinning and active file copying between them).

The two drives copying write now are some old WD Blue drives. Current internal transfer speed is averaging 3Gb per 60 seconds sustained after a half hour of copying and monitoring. Not super fast, but fast enough for me for this purpose.

Network Transfer Speed:

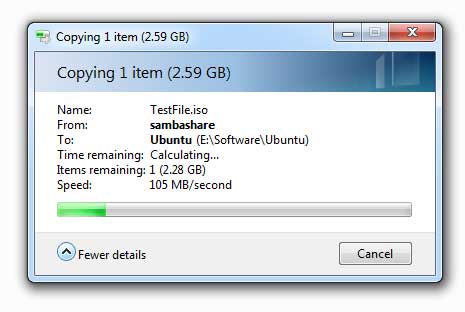

Sustained copy rate so far was around 90Mb/s on the 3Gb file across the network (wired Gigabit; both Intel NICs in each machine and my ASUS router; server is running Lubuntu and I installed SAMBA temporarily to test sharing and copying this data over the network via a Win7 machine over SMB share). Burst was closer to 160Mb/s. This is fairly slow for large data, but it's plenty fast for copying a few files and with sustained slow reads over the network (such as reading an AVI file over the network to play on another machine without any transcoding). I would certainy like this to be faster, but it would require upgrading all machines to 10G networking and a new router, etc. So for now, I'll just live with the speeds.

Will stress a few more days. Then install FreeNAS and go headless.

Very best,

I have the hardware up and running. I started testing things just to make sure the hardware is in good working order, so I started stressing it on doing tasks to see how it handles everything and to see how the power consumption would be and overall temperature and noise levels. To test things, I installed Lubuntu via USB and installed it to a SSD I had laying around, just to play with the hardware and test the memory and CPU and everything before I wipe it and migrate to installing FreeNAS. Mainly I just wanted to see the hardware, do some network copying via SMB and generally operate with a GUI and browse websites, etc, just to see how the older hardware behaved and what consumption and temperatures were produced.

I ended up with:

Supermicro XSIL8-F Motherboard

Intel Xeon X3440 CPU

16Gb ECC DDR3 RAM (4x4)

(currently have 1x SSD and 2x HDD's installed too)

I installed it into a Corsair Carbide Series 100R case.

I'm currently using the stock CPU cooler, but will be replacing this with a CoolMaster Hyper 212 EVO shortly just to get that last little bit of heat handling and silence. That said, the stock temperatures are fine so far, so I'm not too terribly concerned.

Power Consumption & Temps:

Current CPU temperture at idle is 26C with stock Intel cooler (it's 21.6C ambient temp in my office).

Power Consumption at idle with 3 drives running (1x SSD, 2x HDD): 43~44 watts

During active copying 3Gb files back and forth over the network (using onboard Intel Gigabit NIC) to my router and to my other workstation, the power consumption spiked anywhere from 53 to 68 watts with the discs whirling and copying going on, while running Lubuntu operating system with a few applications running.

CPU temperature during active file copying (testing on 200Gb of files drive to drive internally) while running Lubuntu has the temperature on the CPU at 38C in same environment, with the same consumption as above (averaging 66 watts currently, all discs spinning and active file copying between them).

The two drives copying write now are some old WD Blue drives. Current internal transfer speed is averaging 3Gb per 60 seconds sustained after a half hour of copying and monitoring. Not super fast, but fast enough for me for this purpose.

Network Transfer Speed:

Sustained copy rate so far was around 90Mb/s on the 3Gb file across the network (wired Gigabit; both Intel NICs in each machine and my ASUS router; server is running Lubuntu and I installed SAMBA temporarily to test sharing and copying this data over the network via a Win7 machine over SMB share). Burst was closer to 160Mb/s. This is fairly slow for large data, but it's plenty fast for copying a few files and with sustained slow reads over the network (such as reading an AVI file over the network to play on another machine without any transcoding). I would certainy like this to be faster, but it would require upgrading all machines to 10G networking and a new router, etc. So for now, I'll just live with the speeds.

Will stress a few more days. Then install FreeNAS and go headless.

Very best,

Last edited:

Evertb1

Guru

- Joined

- May 31, 2016

- Messages

- 700

Looks like you are nicely on your way.

I have used several generations of Xeons on home servers and homelab servers and always just used the stock cooler. Unless you have problems with the sound (noise) and/or temperature I would not bother. Most of the time your CPU will not work all that hard. It's not a gaming rig. Though I must admit that the stock coolers are uglyI'm currently using the stock CPU cooler

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

Looks like you are nicely on your way.

I have used several generations of Xeons on home servers and homelab servers and always just used the stock cooler. Unless you have problems with the sound (noise) and/or temperature I would not bother. Most of the time your CPU will not work all that hard. It's not a gaming rig. Though I must admit that the stock coolers are ugly

Thanks,

That's true, these temps are nothing to be concerned over. I would definitely be doing something else if it were in the 50's~60's C as it would be a heater in my office. Florida is hot enough to add yet another hot box to the room. Mid-20's to 30's C sustainable is not bad. As you said, it will not be actively at 100% load on the CPU hardly ever.

Hard to argue with it for a $50 package, plus some shipping, I never realized this stuff (granted its 8 years old and used) was this inexpensive out there and fairly common enough.

The stock cooler is pretty quiet currently, since it doesn't need to work very hard. So noise so far is not an issue at all. This case is completely open currently too, so maybe temps will change a little when I close it up completely and then I won't hear anything, but if it has to work harder for temps, then maybe it will be noisier. Not too bad anyways. But you are right, stock coolers are ugly! I may still change it out with a $30 air cooler to get the temps lower and dead silent pretty much when the box is totally closed. Probably a waste of money thinking logically about the efficiency and results per the cost spent relative to the already working stock cooler. It will not be priority for sure. I just don't want to have to ever take the board out to install a plate behind it for a cooler in the future, so just thinking ahead to avoid unplugging stuff and having to replug, re-seat, etc. I would pay money just to not have to do that eventually if I did find out I needed a better cooler, hah!

Edit: Well, after reading through a few things, testing a bit more, I decided to go ahead with a 3rd party cooler. Went with an Arctic Freezer 33, was only $24 shipped, compares to Hyper 212 and others and saw some good real world examples on same CPU. Will go ahead and install it early on to avoid having to take out the board later to put the plate on and all that. Won't hurt it. Will just have better temps and no noise for when I close the case up for a long while.

Very best,

Last edited:

MalVeauX

Contributor

- Joined

- Aug 6, 2020

- Messages

- 110

Update:

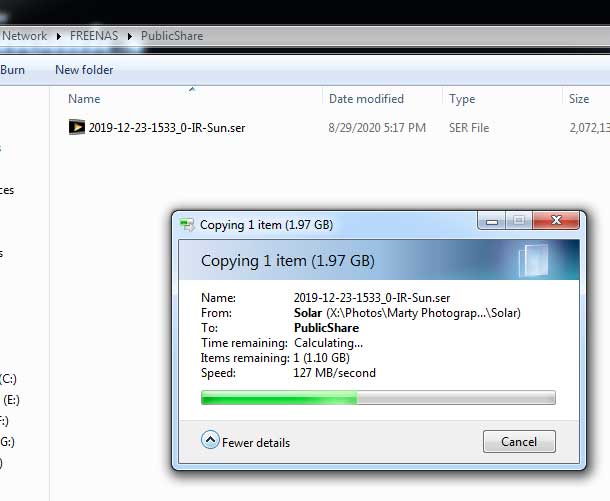

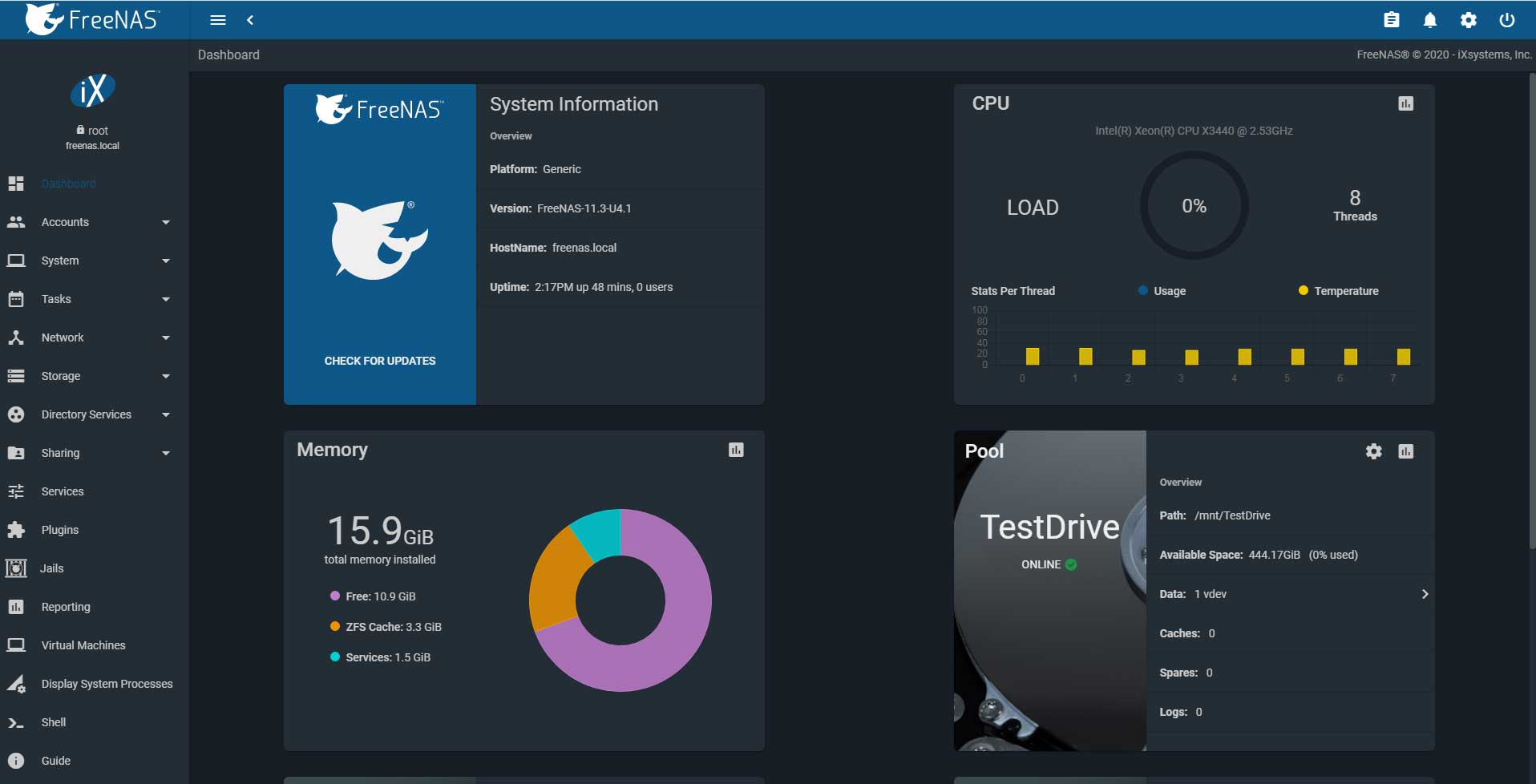

I have installed FreeNAS 11.3 to start learning to use it. I have it on just some random HDD with low capacity, nothing special, just a basic drive to play with pools, shares, users, etc. I went through some information and learned to make a pool, data set to access on that pool that is public, a group, a user with permissions in that group, turned on SMB service, created some shares with permissions for the user group. Got it running.

Tested it with a 2Gb file to see how it handled transfer speeds with FreeNAS in control and the speed was improved compared to what I was doing with just Lubuntu oddly enough. This sustained in the 120MB/s the whole time roughly, so that was a little bit of an improvement (over 20% compared to what I was getting in Lubuntu with basic Samba sharing).

Dashboard up and running, nothing special, just learning to use it. FreeNAS loves RAM. My goodness. A simple service and cache already soaks up 5Gb of RAM instantly!

Soon, I will try to simulate some drive failure scenarios to see how a recovery would go and whether or not I could access a single drive out of the FreeNAS box via Linux (Ubuntu with ZFS utils) in case of a total melt down of the hardware. And I need to learn to do snapshots and how to backup configurations, etc.

I have a new SSD in the mail to become the new boot drive afterwards.

Very best,

I have installed FreeNAS 11.3 to start learning to use it. I have it on just some random HDD with low capacity, nothing special, just a basic drive to play with pools, shares, users, etc. I went through some information and learned to make a pool, data set to access on that pool that is public, a group, a user with permissions in that group, turned on SMB service, created some shares with permissions for the user group. Got it running.

Tested it with a 2Gb file to see how it handled transfer speeds with FreeNAS in control and the speed was improved compared to what I was doing with just Lubuntu oddly enough. This sustained in the 120MB/s the whole time roughly, so that was a little bit of an improvement (over 20% compared to what I was getting in Lubuntu with basic Samba sharing).

Dashboard up and running, nothing special, just learning to use it. FreeNAS loves RAM. My goodness. A simple service and cache already soaks up 5Gb of RAM instantly!

Soon, I will try to simulate some drive failure scenarios to see how a recovery would go and whether or not I could access a single drive out of the FreeNAS box via Linux (Ubuntu with ZFS utils) in case of a total melt down of the hardware. And I need to learn to do snapshots and how to backup configurations, etc.

I have a new SSD in the mail to become the new boot drive afterwards.

Very best,

Evertb1

Guru

- Joined

- May 31, 2016

- Messages

- 700

Just some fun. They tried to fail a FreeNAS RAIDZ1 setup as well.Soon, I will try to simulate some drive failure scenarios

Looks promising.

PLEX however needs 2000 pass mark score CPU for 1080p transcoding of one LOW quality stream, that looks like 600MB 3 hours video = ugly as hell.

Medium transcoding settings max out i3-4170, comparably performing CPU, and produce very watchable stream.

for 2-3 users watching you will have to transcode beforehand -> "optimise for..." option in PLEX, or live with ugly streams

PLEX however needs 2000 pass mark score CPU for 1080p transcoding of one LOW quality stream, that looks like 600MB 3 hours video = ugly as hell.

Medium transcoding settings max out i3-4170, comparably performing CPU, and produce very watchable stream.

for 2-3 users watching you will have to transcode beforehand -> "optimise for..." option in PLEX, or live with ugly streams

Similar threads

- Replies

- 3

- Views

- 2K

- Replies

- 4

- Views

- 1K

- Locked

- Replies

- 4

- Views

- 2K

- Replies

- 5

- Views

- 2K

- Replies

- 2

- Views

- 1K