I've been running this pool for years with no problems, replaced several drives with no problems.

upgraded the pool recently (within 6 months) and upgraded to latest stable freenas... no problems - gui very pretty, i love it. :)

so anyway i went to replace a failing disk, and i noticed it changed behavior and shows "replacing" now, which its never done before but hey - looks good!

but then something went wrong, the disk i was replacing to failed. so i replaced that and resilvered. now its stuck. and its done it again to another disk too.

so whilst the pool is still functional i have these split out "Replacing" things and even tho theres a replaced completely resilvered disk there i cannot remove the old ones.

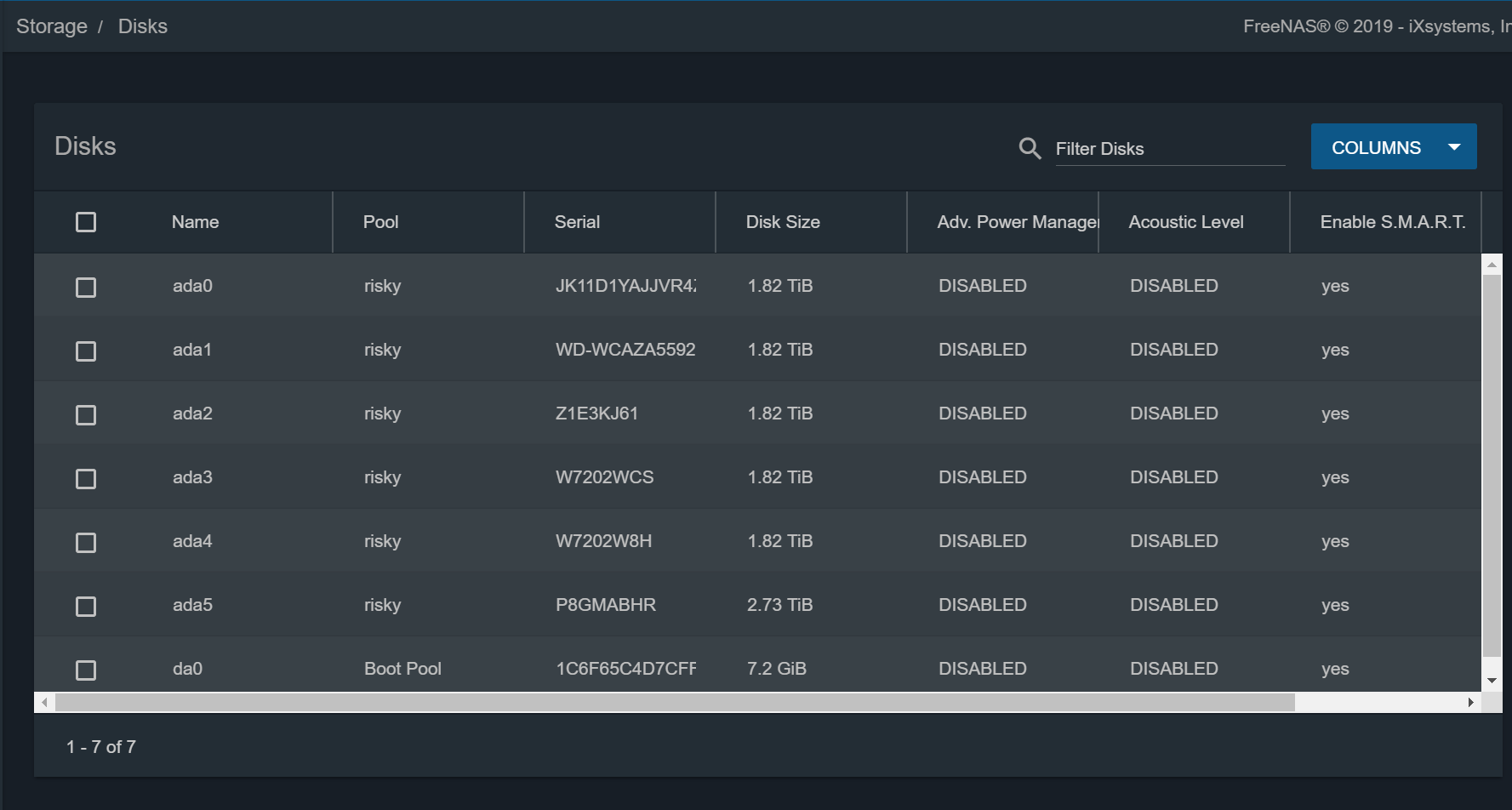

its a 7 drive z2, with my 1 disk failure and then attempting to replace the replacement im down to 6 online disks, so im pretty hesitant to make it worse at this point.

what i was hoping to do was clear any errors - remove any damaged files, make the zpool status look good but degraded at 6 drives, and then reinsert my 7th drive and be good to go but I cant offline the "ghost" drives and i cant detach them. it says "insufficient replicas" even tho the disks are "unavailable" so they literally cannot be contributing to the pool correct?

I have tried to manually offline the disks in cli because the gui threw the errors above, and used legacy gui, same behavior.

I suspect there is some record somewhere in my pool that says those disks are there (they arent) and that has caused this behavior. I dont know how to proceed from here hence asking for help, I can say that the pool is still functional, ive done several resilvers, and still no joy.

There was some data loss - about 3 files and a log, ive removed the lost files and replaced them, and cleared errors, but it seems its still coming back and reading them as lost - its as if there is some sort of journal that its reading and not updating so its stuck here?

Im sorry if im not explaining the issue clearly or if there is some obvious solution im not seeing - but would really appreciate any pointers be it existing doucmentation etc.

I hear reference to "snapshots" but im not sure enough about what that is for me to ttry to mes with it.

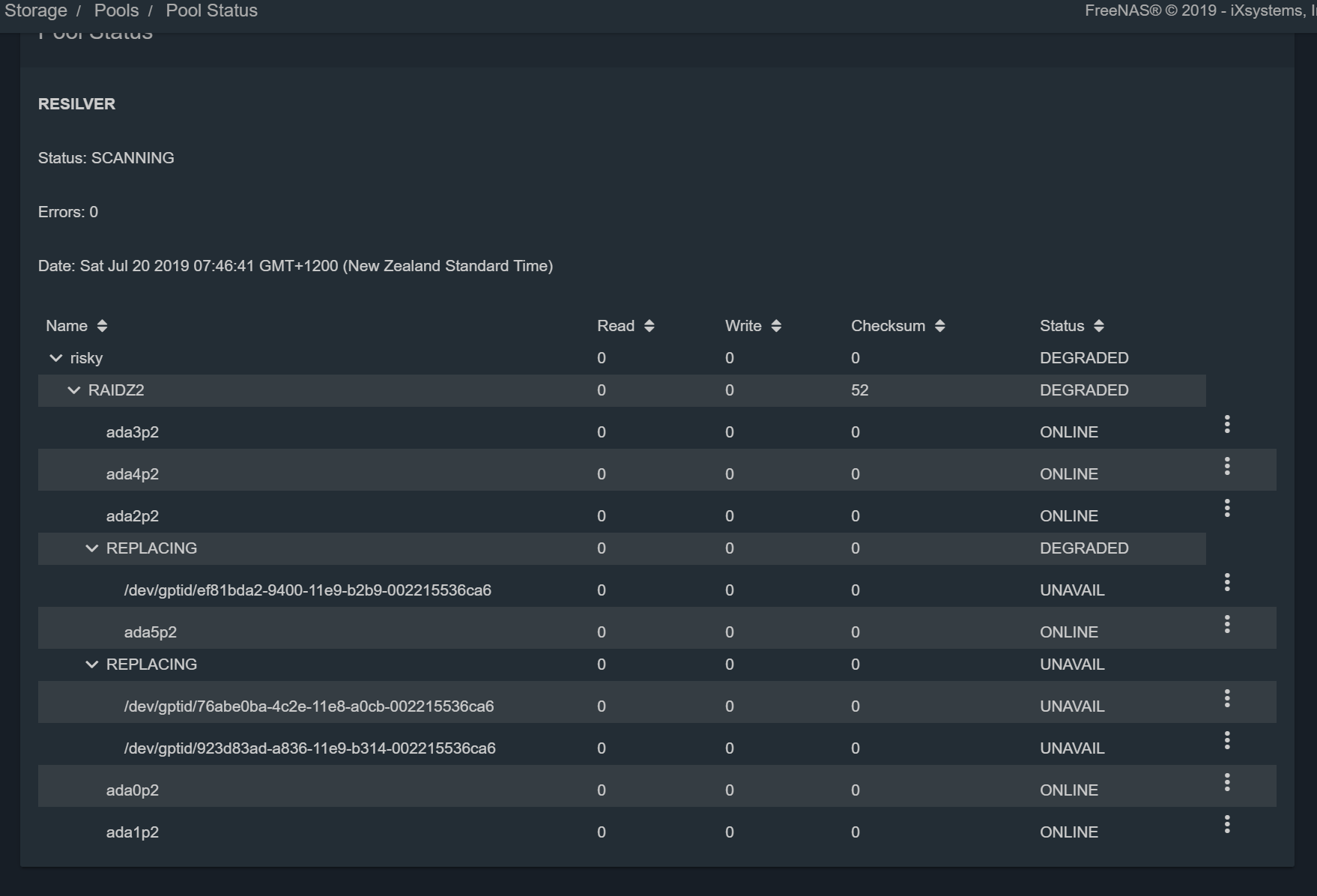

anyway heres a screenshot - its resilvering again after i issued a zpool clear, before you tell me yes i know ill wait for the resilver to complete again (this will be the 3rd resilver in a week :( )

root@freenas:~ # zpool status -v risky

pool: risky

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sat Jul 20 07:46:41 2019

2.05T scanned at 385M/s, 1.49T issued at 280M/s, 9.45T total

209G resilvered, 15.75% done, 0 days 08:17:21 to go

config:

NAME STATE READ WRITE CKSUM

risky DEGRADED 0 0 22

raidz2-0 DEGRADED 0 0 44

gptid/7cfa6df5-1064-11e5-a8c1-002215536ca6 ONLINE 0 0 0

gptid/7d5dd3c6-1064-11e5-a8c1-002215536ca6 ONLINE 0 0 0

gptid/c63d6cc7-0097-11e7-947b-002215536ca6 ONLINE 0 0 0

replacing-3 DEGRADED 0 0 0

6664045380065261761 UNAVAIL 0 0 0 was /dev/gptid/ef81bda2-9400-11e9-b2b9-002215536ca6

gptid/bab70f66-a6bc-11e9-9ee1-002215536ca6 ONLINE 0 0 0

replacing-4 UNAVAIL 0 0 0

12799727272099027470 UNAVAIL 0 0 0 was /dev/gptid/76abe0ba-4c2e-11e8-a0cb-002215536ca6

13679467726233141733 UNAVAIL 0 0 0 was /dev/gptid/923d83ad-a836-11e9-b314-002215536ca6

gptid/df6777e3-90bb-11e9-84a2-002215536ca6 ONLINE 0 0 0

gptid/35058293-343f-11e6-b971-002215536ca6 ONLINE 0 0 0

errors: Permanent errors have been detected in the following files:

risky:<0x2b305>

risky:<0x2b30b>

risky:<0x2b311>

risky:<0x2b2f9>

risky/.system/syslog-903a2a7d45924e86a448cccd86aa67c2:<0x2e>

root@freenas:~ #

root@freenas:~ #

root@freenas:~ # glabel status

Name Status Components

gptid/df6777e3-90bb-11e9-84a2-002215536ca6 N/A ada0p2

gptid/35058293-343f-11e6-b971-002215536ca6 N/A ada1p2

gptid/c63d6cc7-0097-11e7-947b-002215536ca6 N/A ada2p2

gptid/7cfa6df5-1064-11e5-a8c1-002215536ca6 N/A ada3p2

gptid/7d5dd3c6-1064-11e5-a8c1-002215536ca6 N/A ada4p2

gptid/bab70f66-a6bc-11e9-9ee1-002215536ca6 N/A ada5p2

gptid/eb722a12-90b7-11e9-b9db-002215536ca6 N/A da0p1

root@freenas:~ # camcontrol devlist

<HITACHI H7220AA30SUN2.0T 1019MJVR4Z JKAOA28A> at scbus0 target 0 lun 0 (pass0,ada0)

<WDC WD20EARS-00MVWB0 51.0AB51> at scbus4 target 0 lun 0 (pass1,ada1)

<ST2000DM001-1CH164 CC26> at scbus5 target 0 lun 0 (pass2,ada2)

<ST2000VN000-1HJ164 SC60> at scbus6 target 0 lun 0 (pass3,ada3)

<ST2000VN000-1HJ164 SC60> at scbus7 target 0 lun 0 (pass4,ada4)

<Hitachi HUS724030ALE641 MJ8OA5F0> at scbus9 target 0 lun 0 (pass5,ada5)

<Kingston DataTraveler 3.0 > at scbus11 target 0 lun 0 (pass6,da0)

root@freenas:~ #

any advice anyone can offer most appreciated.

what i was hoping to do is remove that "old" /dev/gptid/ef81bda2-9400-11e9-b2b9-002215536ca6 which has been replaced by ada5, aka gptid/bab70f66-a6bc-11e9-9ee1-002215536ca6

then remove both /dev/gptid/76abe0ba-4c2e-11e8-a0cb-002215536ca6 and /dev/gptid/923d83ad-a836-11e9-b314-002215536ca6, which will result in a z2 running with one drive missing, then add in my other new 3tb and have the array bak to z2.

unfortunately at this point im just a bit lost on 1. what ive done wrong and 2. how to get out of it hahaha

I suspect what happened with the dataloss is one of the bad drives was causing random camcontrol errors in dmesg, they stopped once i was able to get rid of the drive but i had attempted to resilver before i identified that was the issue, to be clear im not concerned about the lost files and have no expectations of any recovery there, but would be very greatful if theres a way i can preserve the rest of the pool.

its still an operational volume in every way except for the known lost files.

anyway - thanks for any help :D

upgraded the pool recently (within 6 months) and upgraded to latest stable freenas... no problems - gui very pretty, i love it. :)

so anyway i went to replace a failing disk, and i noticed it changed behavior and shows "replacing" now, which its never done before but hey - looks good!

but then something went wrong, the disk i was replacing to failed. so i replaced that and resilvered. now its stuck. and its done it again to another disk too.

so whilst the pool is still functional i have these split out "Replacing" things and even tho theres a replaced completely resilvered disk there i cannot remove the old ones.

its a 7 drive z2, with my 1 disk failure and then attempting to replace the replacement im down to 6 online disks, so im pretty hesitant to make it worse at this point.

what i was hoping to do was clear any errors - remove any damaged files, make the zpool status look good but degraded at 6 drives, and then reinsert my 7th drive and be good to go but I cant offline the "ghost" drives and i cant detach them. it says "insufficient replicas" even tho the disks are "unavailable" so they literally cannot be contributing to the pool correct?

I have tried to manually offline the disks in cli because the gui threw the errors above, and used legacy gui, same behavior.

I suspect there is some record somewhere in my pool that says those disks are there (they arent) and that has caused this behavior. I dont know how to proceed from here hence asking for help, I can say that the pool is still functional, ive done several resilvers, and still no joy.

There was some data loss - about 3 files and a log, ive removed the lost files and replaced them, and cleared errors, but it seems its still coming back and reading them as lost - its as if there is some sort of journal that its reading and not updating so its stuck here?

Im sorry if im not explaining the issue clearly or if there is some obvious solution im not seeing - but would really appreciate any pointers be it existing doucmentation etc.

I hear reference to "snapshots" but im not sure enough about what that is for me to ttry to mes with it.

anyway heres a screenshot - its resilvering again after i issued a zpool clear, before you tell me yes i know ill wait for the resilver to complete again (this will be the 3rd resilver in a week :( )

root@freenas:~ # zpool status -v risky

pool: risky

state: DEGRADED

status: One or more devices is currently being resilvered. The pool will

continue to function, possibly in a degraded state.

action: Wait for the resilver to complete.

scan: resilver in progress since Sat Jul 20 07:46:41 2019

2.05T scanned at 385M/s, 1.49T issued at 280M/s, 9.45T total

209G resilvered, 15.75% done, 0 days 08:17:21 to go

config:

NAME STATE READ WRITE CKSUM

risky DEGRADED 0 0 22

raidz2-0 DEGRADED 0 0 44

gptid/7cfa6df5-1064-11e5-a8c1-002215536ca6 ONLINE 0 0 0

gptid/7d5dd3c6-1064-11e5-a8c1-002215536ca6 ONLINE 0 0 0

gptid/c63d6cc7-0097-11e7-947b-002215536ca6 ONLINE 0 0 0

replacing-3 DEGRADED 0 0 0

6664045380065261761 UNAVAIL 0 0 0 was /dev/gptid/ef81bda2-9400-11e9-b2b9-002215536ca6

gptid/bab70f66-a6bc-11e9-9ee1-002215536ca6 ONLINE 0 0 0

replacing-4 UNAVAIL 0 0 0

12799727272099027470 UNAVAIL 0 0 0 was /dev/gptid/76abe0ba-4c2e-11e8-a0cb-002215536ca6

13679467726233141733 UNAVAIL 0 0 0 was /dev/gptid/923d83ad-a836-11e9-b314-002215536ca6

gptid/df6777e3-90bb-11e9-84a2-002215536ca6 ONLINE 0 0 0

gptid/35058293-343f-11e6-b971-002215536ca6 ONLINE 0 0 0

errors: Permanent errors have been detected in the following files:

risky:<0x2b305>

risky:<0x2b30b>

risky:<0x2b311>

risky:<0x2b2f9>

risky/.system/syslog-903a2a7d45924e86a448cccd86aa67c2:<0x2e>

root@freenas:~ #

root@freenas:~ #

root@freenas:~ # glabel status

Name Status Components

gptid/df6777e3-90bb-11e9-84a2-002215536ca6 N/A ada0p2

gptid/35058293-343f-11e6-b971-002215536ca6 N/A ada1p2

gptid/c63d6cc7-0097-11e7-947b-002215536ca6 N/A ada2p2

gptid/7cfa6df5-1064-11e5-a8c1-002215536ca6 N/A ada3p2

gptid/7d5dd3c6-1064-11e5-a8c1-002215536ca6 N/A ada4p2

gptid/bab70f66-a6bc-11e9-9ee1-002215536ca6 N/A ada5p2

gptid/eb722a12-90b7-11e9-b9db-002215536ca6 N/A da0p1

root@freenas:~ # camcontrol devlist

<HITACHI H7220AA30SUN2.0T 1019MJVR4Z JKAOA28A> at scbus0 target 0 lun 0 (pass0,ada0)

<WDC WD20EARS-00MVWB0 51.0AB51> at scbus4 target 0 lun 0 (pass1,ada1)

<ST2000DM001-1CH164 CC26> at scbus5 target 0 lun 0 (pass2,ada2)

<ST2000VN000-1HJ164 SC60> at scbus6 target 0 lun 0 (pass3,ada3)

<ST2000VN000-1HJ164 SC60> at scbus7 target 0 lun 0 (pass4,ada4)

<Hitachi HUS724030ALE641 MJ8OA5F0> at scbus9 target 0 lun 0 (pass5,ada5)

<Kingston DataTraveler 3.0 > at scbus11 target 0 lun 0 (pass6,da0)

root@freenas:~ #

any advice anyone can offer most appreciated.

what i was hoping to do is remove that "old" /dev/gptid/ef81bda2-9400-11e9-b2b9-002215536ca6 which has been replaced by ada5, aka gptid/bab70f66-a6bc-11e9-9ee1-002215536ca6

then remove both /dev/gptid/76abe0ba-4c2e-11e8-a0cb-002215536ca6 and /dev/gptid/923d83ad-a836-11e9-b314-002215536ca6, which will result in a z2 running with one drive missing, then add in my other new 3tb and have the array bak to z2.

unfortunately at this point im just a bit lost on 1. what ive done wrong and 2. how to get out of it hahaha

I suspect what happened with the dataloss is one of the bad drives was causing random camcontrol errors in dmesg, they stopped once i was able to get rid of the drive but i had attempted to resilver before i identified that was the issue, to be clear im not concerned about the lost files and have no expectations of any recovery there, but would be very greatful if theres a way i can preserve the rest of the pool.

its still an operational volume in every way except for the known lost files.

anyway - thanks for any help :D

Last edited: