tc9999

Dabbler

- Joined

- Oct 15, 2020

- Messages

- 15

Hi,

earlier this week I got a warning that my pool status was degraded. One drive showed as unavailable and gave the gptid but I could not match it to a serial number of my drives. The next day I checked again and now it said one drive was unavailable and one was faulted.

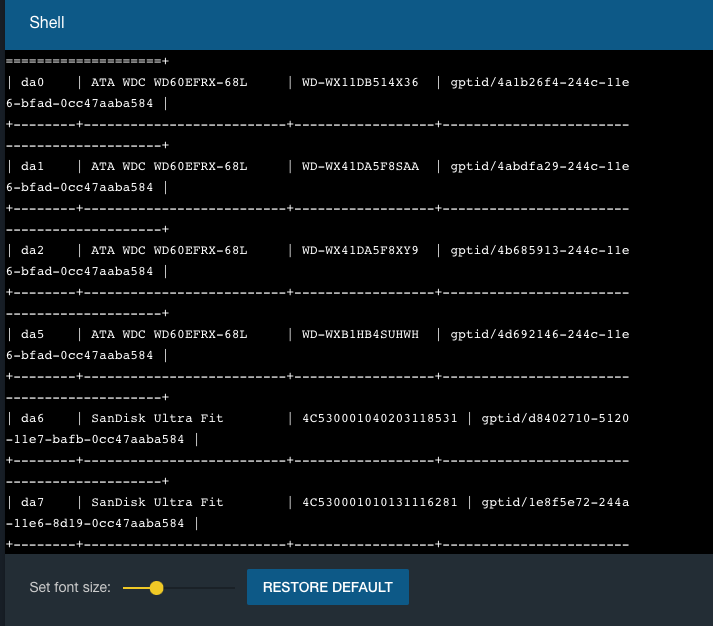

I have 7 hdds but both glabel status and a script I found in this forum only showed 4 drives with their gptid/serial:

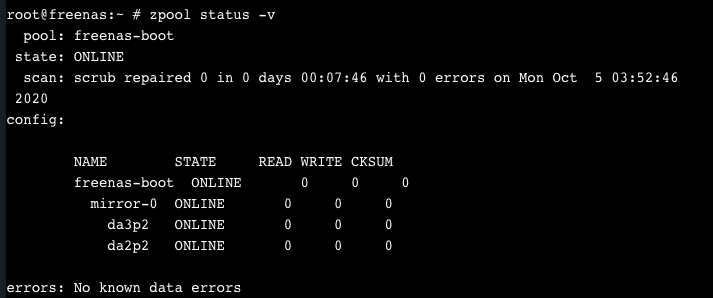

Since I couldn't figure out which of the 3 remaining drives are bad, I turned off the server. Today I wanted to try and figure it out again, but now after turning it back on again it says pool status unknown and only lists 2 drives:

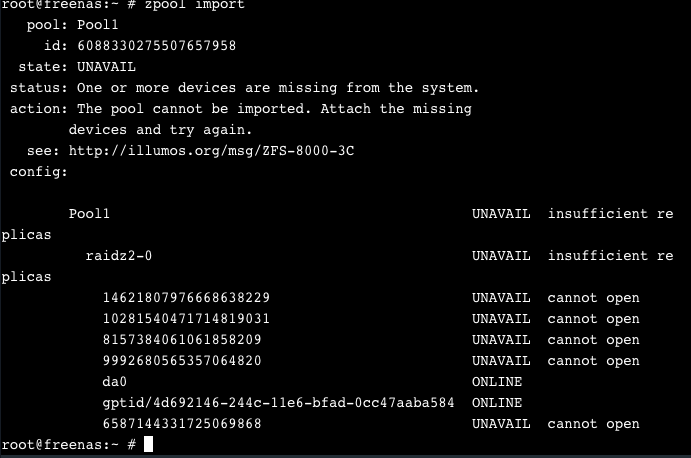

zpool import shows this:

Any idea what's wrong? I'm a complete noob when it comes to freenas.

Thanks!

earlier this week I got a warning that my pool status was degraded. One drive showed as unavailable and gave the gptid but I could not match it to a serial number of my drives. The next day I checked again and now it said one drive was unavailable and one was faulted.

I have 7 hdds but both glabel status and a script I found in this forum only showed 4 drives with their gptid/serial:

Since I couldn't figure out which of the 3 remaining drives are bad, I turned off the server. Today I wanted to try and figure it out again, but now after turning it back on again it says pool status unknown and only lists 2 drives:

zpool import shows this:

Any idea what's wrong? I'm a complete noob when it comes to freenas.

Thanks!