Hello,

I recently replaced all 8 drives in my server with new drives. (4TB HGST to shucked 10TB Seagate Barracuda Pros).

I actually had to rebuild the entire Pool to change the block size to 4k, then replicated to the new drives. I did this using two chassis and two LSI cards due to not having enough space in my primary chassis

I did have some weird device reset errors when I had the drives split up, I attributed this to the precarious nature of the setup, and the errors were on my backup chassis.

Completed the transfer and put the new drives into the primary chassis. Everything was fine until last week when I got a notification the Pool was degraded.

DA7 was showing some read/write errors and degraded, I power cycled the server and re-seated the drive, it booted up fine and the Pool was normal again.

Fast forward to yesterday when I received a notification the Pool was degraded again.

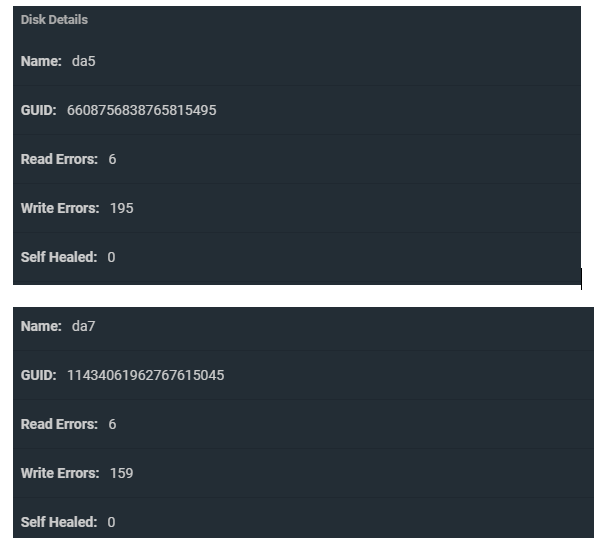

DA7 and DA5 both have read/write errors.

I have SMART tests scheduled but received no SMART warnings so I checked for errors, here is the output:

DA5

DA7

Disk errors reported in FreeNAS:

I'm no expert with SMART data but I don't see any read/write errors present? What would cause the ZFS to see read/write errors? The LSI card, or am I missing something perhaps? All the new drives are on the same LSI card in IT mode that was working with the old drives.

Thanks

I recently replaced all 8 drives in my server with new drives. (4TB HGST to shucked 10TB Seagate Barracuda Pros).

I actually had to rebuild the entire Pool to change the block size to 4k, then replicated to the new drives. I did this using two chassis and two LSI cards due to not having enough space in my primary chassis

I did have some weird device reset errors when I had the drives split up, I attributed this to the precarious nature of the setup, and the errors were on my backup chassis.

Completed the transfer and put the new drives into the primary chassis. Everything was fine until last week when I got a notification the Pool was degraded.

DA7 was showing some read/write errors and degraded, I power cycled the server and re-seated the drive, it booted up fine and the Pool was normal again.

Fast forward to yesterday when I received a notification the Pool was degraded again.

DA7 and DA5 both have read/write errors.

I have SMART tests scheduled but received no SMART warnings so I checked for errors, here is the output:

DA5

SMART Attributes Data Structure revision number: 10

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000f 076 064 044 Pre-fail Always - 44632200

3 Spin_Up_Time 0x0003 090 090 000 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 100 100 020 Old_age Always - 21

5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0

7 Seek_Error_Rate 0x000f 084 060 045 Pre-fail Always - 234027207

9 Power_On_Hours 0x0032 098 098 000 Old_age Always - 1800 (122 111 0)

10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 020 Old_age Always - 20

184 End-to-End_Error 0x0032 100 100 099 Old_age Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

188 Command_Timeout 0x0032 100 099 000 Old_age Always - 4295032833

189 High_Fly_Writes 0x003a 069 069 000 Old_age Always - 31

190 Airflow_Temperature_Cel 0x0022 074 047 040 Old_age Always - 26 (Min/Max 24/28)

191 G-Sense_Error_Rate 0x0032 093 093 000 Old_age Always - 15492

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 13

193 Load_Cycle_Count 0x0032 095 095 000 Old_age Always - 11551

194 Temperature_Celsius 0x0022 026 053 000 Old_age Always - 26 (0 20 0 0 0)

195 Hardware_ECC_Recovered 0x001a 009 002 000 Old_age Always - 44632200

197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x003e 200 200 000 Old_age Always - 0

200 Multi_Zone_Error_Rate 0x0023 100 100 001 Pre-fail Always - 0

240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 880 (239 115 0)

241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 35954624018

242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 40571654043

DA7

SMART Attributes Data Structure revision number: 10

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x000f 076 064 044 Pre-fail Always - 38111312

3 Spin_Up_Time 0x0003 091 091 000 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 100 100 020 Old_age Always - 14

5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0

7 Seek_Error_Rate 0x000f 081 060 045 Pre-fail Always - 122729332

9 Power_On_Hours 0x0032 100 100 000 Old_age Always - 571 (199 172 0)

10 Spin_Retry_Count 0x0013 100 100 097 Pre-fail Always - 0

12 Power_Cycle_Count 0x0032 100 100 020 Old_age Always - 11

184 End-to-End_Error 0x0032 100 100 099 Old_age Always - 0

187 Reported_Uncorrect 0x0032 100 100 000 Old_age Always - 0

188 Command_Timeout 0x0032 100 100 000 Old_age Always - 0

189 High_Fly_Writes 0x003a 081 081 000 Old_age Always - 19

190 Airflow_Temperature_Cel 0x0022 074 047 040 Old_age Always - 26 (Min/Max 24/28)

191 G-Sense_Error_Rate 0x0032 100 100 000 Old_age Always - 1756

192 Power-Off_Retract_Count 0x0032 100 100 000 Old_age Always - 2

193 Load_Cycle_Count 0x0032 100 100 000 Old_age Always - 1644

194 Temperature_Celsius 0x0022 026 053 000 Old_age Always - 26 (0 20 0 0 0)

195 Hardware_ECC_Recovered 0x001a 009 001 000 Old_age Always - 38111312

197 Current_Pending_Sector 0x0012 100 100 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0010 100 100 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x003e 200 200 000 Old_age Always - 0

200 Multi_Zone_Error_Rate 0x0023 100 100 001 Pre-fail Always - 0

240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 483 (175 26 0)

241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 28520739476

242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 32704312746

Disk errors reported in FreeNAS:

I'm no expert with SMART data but I don't see any read/write errors present? What would cause the ZFS to see read/write errors? The LSI card, or am I missing something perhaps? All the new drives are on the same LSI card in IT mode that was working with the old drives.

Thanks

Last edited: