My snapshots are failing allegedly due to lack of disk space:

I am trying to understand what is out of space (dataset, pool, etc?) and how to fix it. I have read documentation and many forum posts, but still find this aspect confusing.

My pool has 10x 6TB HUS726060AL5210 disks in a RAIDZ-2, so should have (10-2)x6TB=48TB usable capacity - layout below. The sole purpose of my FreeNAS box is iSCSI storage for my virtual machines. (Unfortunately I have named my pool and zvol dataset identically, which makes things confusing.)

How do I interpret this output? What is the pool, what is my zvol?

This shows plenty of free space in pool:

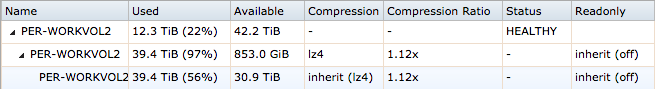

“View Volumes” in web config is even more confusing?

Questions:

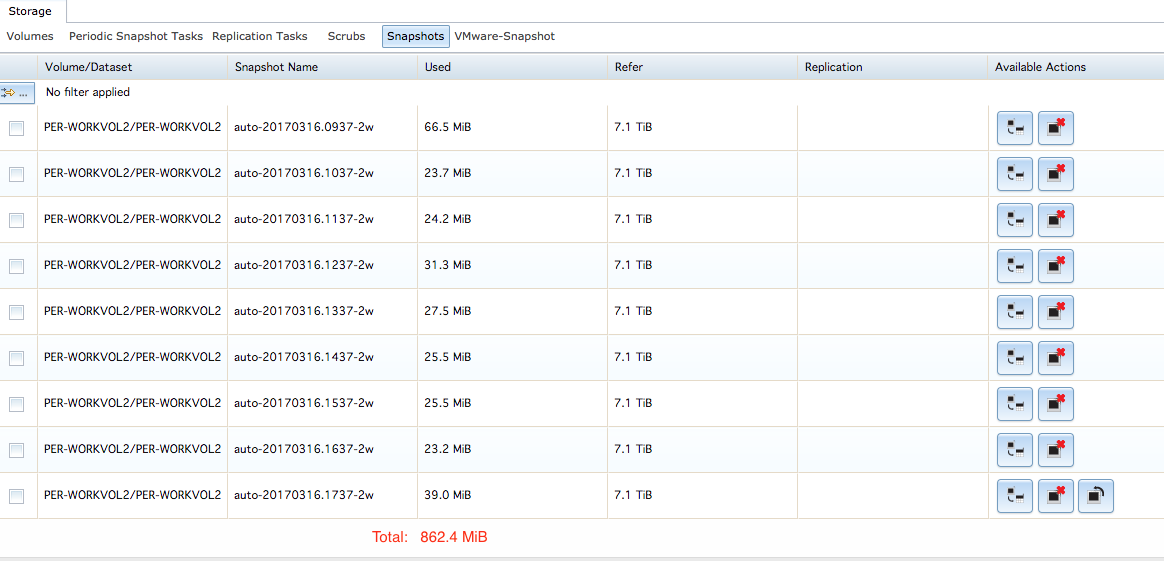

The 9x existing snapshots take up minimal space, only 862.4 MiB when I counted up the "Used" column:

Questions:

My hardware & version info:

Code:

Snapshot PER-WORKVOL2/PER-WORKVOL2@auto-20170329.1637-2w failed with the following error: cannot create snapshot 'PER-WORKVOL2/PER-WORKVOL2@auto-20170329.1637-2w': out of space

I am trying to understand what is out of space (dataset, pool, etc?) and how to fix it. I have read documentation and many forum posts, but still find this aspect confusing.

My pool has 10x 6TB HUS726060AL5210 disks in a RAIDZ-2, so should have (10-2)x6TB=48TB usable capacity - layout below. The sole purpose of my FreeNAS box is iSCSI storage for my virtual machines. (Unfortunately I have named my pool and zvol dataset identically, which makes things confusing.)

Code:

# zpool status pool: PER-WORKVOL2 state: ONLINE scan: scrub repaired 0 in 8h9m with 0 errors on Sun Mar 19 08:09:37 2017 config: NAME STATE READ WRITE CKSUM PER-WORKVOL2 ONLINE 0 0 0 raidz2-0 ONLINE 0 0 0 gptid/e64c2232-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/e6cda207-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/e34f7114-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/e73694ca-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/e8437afc-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/e8d23cef-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/e95263d8-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/e9d66038-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/ea5f6d13-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/eaefd10c-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 logs mirror-1 ONLINE 0 0 0 gptid/ebe42886-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 gptid/ec124b82-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 cache gptid/ebb23f0d-ef4a-11e6-8f69-0cc47ad8b846 ONLINE 0 0 0 spares gptid/eb7fba38-ef4a-11e6-8f69-0cc47ad8b846 AVAIL

How do I interpret this output? What is the pool, what is my zvol?

Code:

# zfs list NAME USED AVAIL REFER MOUNTPOINT PER-WORKVOL2 39.4T 853G 201K /mnt/PER-WORKVOL2 PER-WORKVOL2/.system 413M 853G 400M legacy PER-WORKVOL2/.system/configs-eab18b758b91471d95803a91d80bfcda 7.09M 853G 7.09M legacy PER-WORKVOL2/.system/cores 201K 853G 201K legacy PER-WORKVOL2/.system/rrd-eab18b758b91471d95803a91d80bfcda 201K 853G 201K legacy PER-WORKVOL2/.system/samba4 631K 853G 631K legacy PER-WORKVOL2/.system/syslog-eab18b758b91471d95803a91d80bfcda 5.77M 853G 5.77M legacy PER-WORKVOL2/PER-WORKVOL2 39.4T 30.9T 9.34T -

This shows plenty of free space in pool:

Code:

# zpool iostat capacity operations bandwidth pool alloc free read write read write ------------ ----- ----- ----- ----- ----- ----- PER-WORKVOL2 12.3T 42.2T 95 124 2.34M 2.49M

“View Volumes” in web config is even more confusing?

Questions:

- I assume 1st line refers to pool? If so what does 42.2 TiB available refer to? Is it total capacity after subtracting RAIDZ-2 parity? If so, why 42.2 TiB? 48TB (8x6TB) = 43.7 TiB. Is missing 1.5TiB simply ZFS overheads? Or is this minus snapshot usage? Or do I simply put it down to actual capacity being slightly less than advertised?

- What on earth does second and third lines refer to? All I have is a single zvol for iSCSI storage.

The 9x existing snapshots take up minimal space, only 862.4 MiB when I counted up the "Used" column:

Questions:

- What does "Refer" column mean?

- If I delete, say the first snapshot, does it free up only the 66.5 MiB, or does it free up 7.1TiB, or does it free up sum of 66.5 Mib + 7.1 TiB?

- What space does snapshots consume in "View Volumes" screenshot further above? 1st, 2nd or 3rd line?

- How do I fix my failing snapshots?

My hardware & version info:

- Build: FreeNAS-9.10.2 (a476f16),

- Supermicro MBD-X10DRI-O board

- 8x Samsung 16Gb ECC DDR4 2133 RDIMMs = 128Gb RAM

- 1x Intel Xeon E5-2620v4 2.1Ghz

- 1x SAS9207-8i HBA