Stux

MVP

- Joined

- Jun 2, 2016

- Messages

- 4,419

So, I've got a toy/test system which I've been experiencing poor performance on.

Finally got all the data off and decided to do some destructive testing with badblocks.

Its a Core 2 Quad Extreme X9650 (Intel DX38BT), ie with the notorious FSB bottleneck... I think I have a very graphic example of at least a bottleneck, if not the FSB bottleneck.

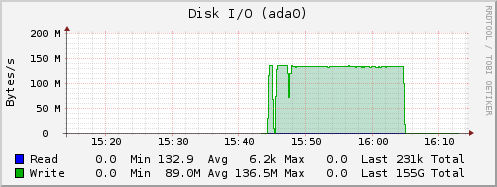

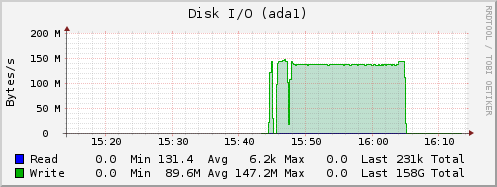

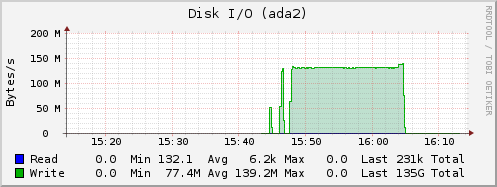

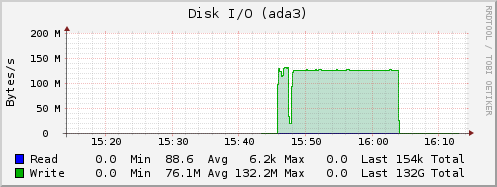

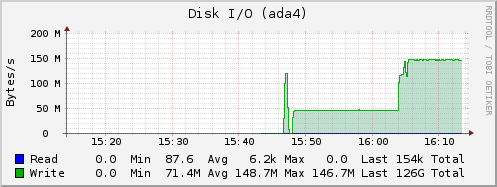

So, I was running badblocks across the 5 3TB disks. Excuse the blips at the beginning, that was me starting/stopping etc. But the first 4 drives were sustaining circa 140-150MB/s quite nicely, 5th drive was circa 45MB/s

After a WTF, I decided to kill the first 4 drives' badblocks and focus on the 5th drive, perhaps its a dodgy sata cable etc, while checking if the drive was still in warranty... and whatdyaknow, it immediately shot up to 150MB/s.

So, my conclusion is the system can't sustain more than 650MB/s to the disks.

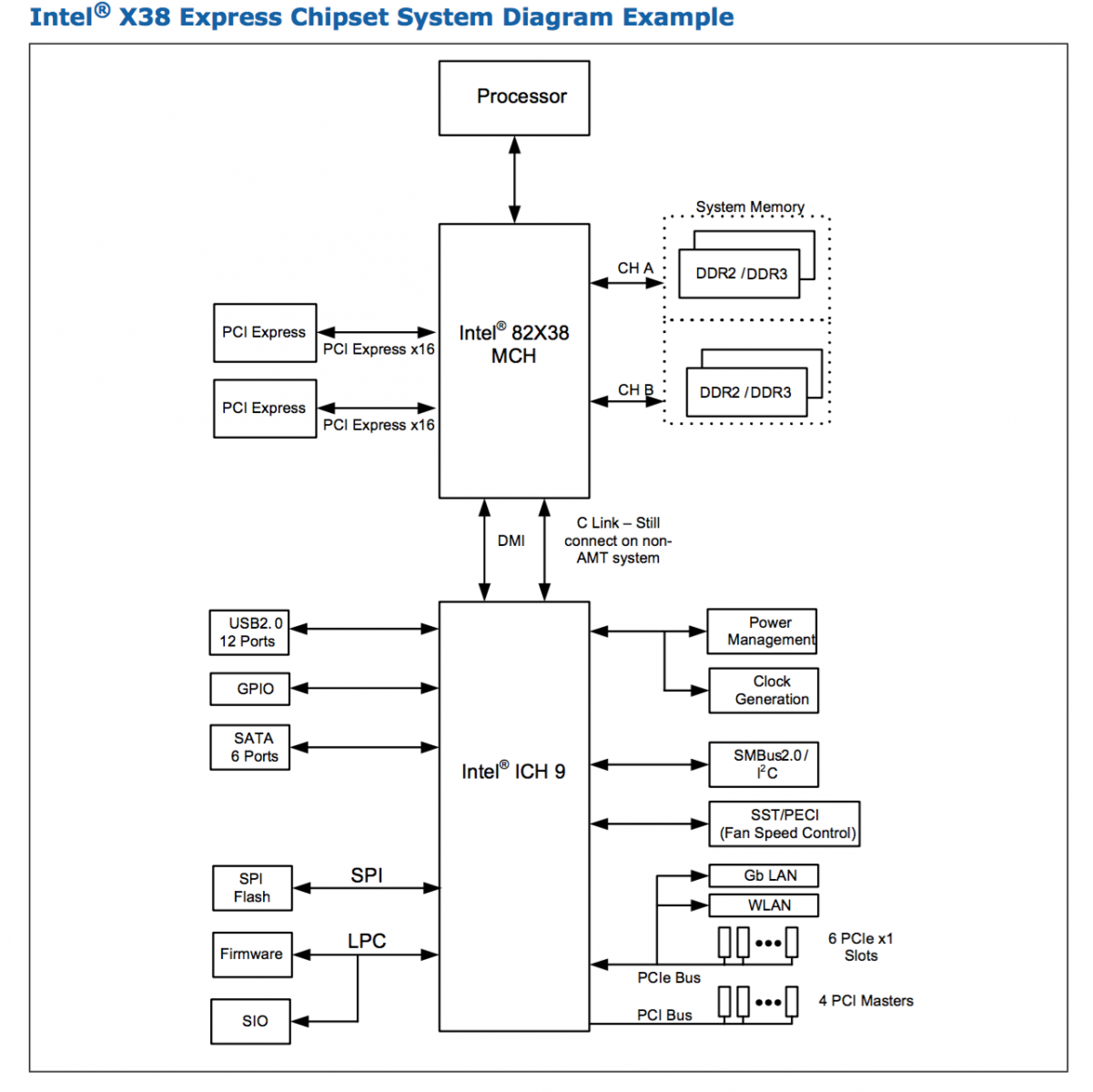

Of course, its probably not the FSB. Its more likely the DMI bus is saturating.

The six onboard SATA ports (and the gigabit links) and everything in fact, are connected via DMI to the CPU.

From the datasheet:

"2GB/s point-to-point DMI to ICH9 (1GB/s each direction)"

DMI is limited to 1GB/s in each direction

This system uses PCIe2 which is 500MB/s for each lane, and the datasheet says DMI is PCIe, which I guess means this system has 2 lanes of PCIe2 joining the chipset to the CPU.

Of course, maybe its the SATA controller that is saturating...

Good news, if I cared I guess is that the two 16x slots are CPU connected, and an HBA could solve that issue.

And of course, the other bottleneck is I believe this is all SATA1, ie limited to 150MB/s anyway.

These are just 3TB WD Reds... nothing special, but even they saturate SATA1.

A good reason why anything older than nehalem is a dead end.

Finally got all the data off and decided to do some destructive testing with badblocks.

Its a Core 2 Quad Extreme X9650 (Intel DX38BT), ie with the notorious FSB bottleneck... I think I have a very graphic example of at least a bottleneck, if not the FSB bottleneck.

So, I was running badblocks across the 5 3TB disks. Excuse the blips at the beginning, that was me starting/stopping etc. But the first 4 drives were sustaining circa 140-150MB/s quite nicely, 5th drive was circa 45MB/s

After a WTF, I decided to kill the first 4 drives' badblocks and focus on the 5th drive, perhaps its a dodgy sata cable etc, while checking if the drive was still in warranty... and whatdyaknow, it immediately shot up to 150MB/s.

So, my conclusion is the system can't sustain more than 650MB/s to the disks.

Of course, its probably not the FSB. Its more likely the DMI bus is saturating.

The six onboard SATA ports (and the gigabit links) and everything in fact, are connected via DMI to the CPU.

From the datasheet:

"2GB/s point-to-point DMI to ICH9 (1GB/s each direction)"

DMI is limited to 1GB/s in each direction

This system uses PCIe2 which is 500MB/s for each lane, and the datasheet says DMI is PCIe, which I guess means this system has 2 lanes of PCIe2 joining the chipset to the CPU.

Of course, maybe its the SATA controller that is saturating...

Good news, if I cared I guess is that the two 16x slots are CPU connected, and an HBA could solve that issue.

And of course, the other bottleneck is I believe this is all SATA1, ie limited to 150MB/s anyway.

These are just 3TB WD Reds... nothing special, but even they saturate SATA1.

A good reason why anything older than nehalem is a dead end.

Last edited: