Hello all,

I have setup freenas on a Hp Blade BL 460 G1 with 2 x Xeon Quad Core and 64 GB RAM. This blade server have direct attached through an optical cable an HP Eva enclosure with 14x 500GB 15k

So we create a big datastore with lz4 , share type " windows " and create an iscsi target and give all this storage to a hyper-c Cluster . The iSCSI Connectivity from cluster to freenas is 1GBit per node.

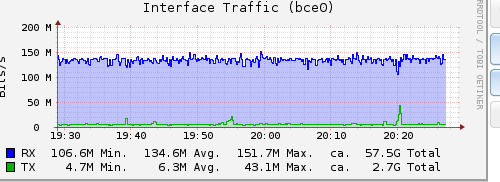

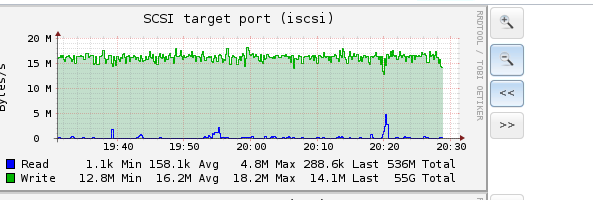

The problem as we speak is the very low performance. We run about 20 VM's MAX simultaneous . These virtual machines are very slow to respond , also for boot etc . Is there some way to see in freenas the latency of the storage ? Or the total IOPS of the system ? In reporting i can see in CPU about 15-20% MAX average , Memory is wired 58.9 G . Strange is the network traffic graph which is all time maxed at 150MBit so i thing something going on there . It is like it cannot go higher and it tops there.

Any ideas ?

Thanks a lot

I have setup freenas on a Hp Blade BL 460 G1 with 2 x Xeon Quad Core and 64 GB RAM. This blade server have direct attached through an optical cable an HP Eva enclosure with 14x 500GB 15k

So we create a big datastore with lz4 , share type " windows " and create an iscsi target and give all this storage to a hyper-c Cluster . The iSCSI Connectivity from cluster to freenas is 1GBit per node.

The problem as we speak is the very low performance. We run about 20 VM's MAX simultaneous . These virtual machines are very slow to respond , also for boot etc . Is there some way to see in freenas the latency of the storage ? Or the total IOPS of the system ? In reporting i can see in CPU about 15-20% MAX average , Memory is wired 58.9 G . Strange is the network traffic graph which is all time maxed at 150MBit so i thing something going on there . It is like it cannot go higher and it tops there.

Any ideas ?

Thanks a lot