titanve

Explorer

- Joined

- Sep 12, 2018

- Messages

- 52

Hello everyone,

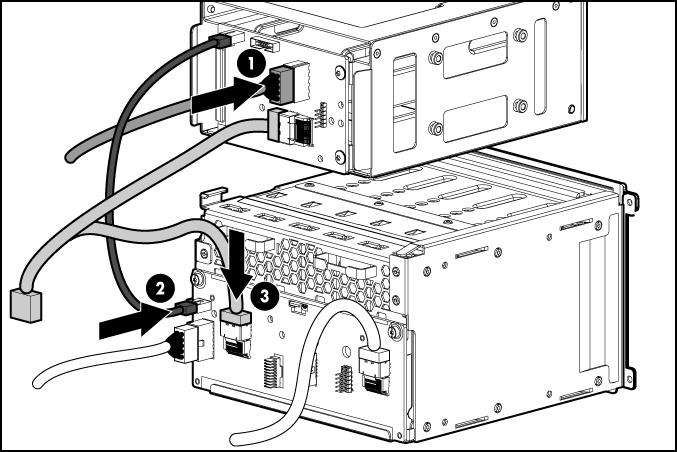

My freenas is rebooting randomly when using iSCSI to backup my vm's using Vsphere Data Protection. I added the device to the ESXi without troubles and I have to RJ45 crossover cables which connect the freenas with the ESXi host. I wonder if I'm missing some network configuration. I set the MTU to 9000 as suggested in a tutorial, is it ok?

I'm attaching some images so you can see my setup and behavior while doing the backup. The Freenas is in a HP ML350 G6 server with 24GB in RAM. Both NIC's are GLan.

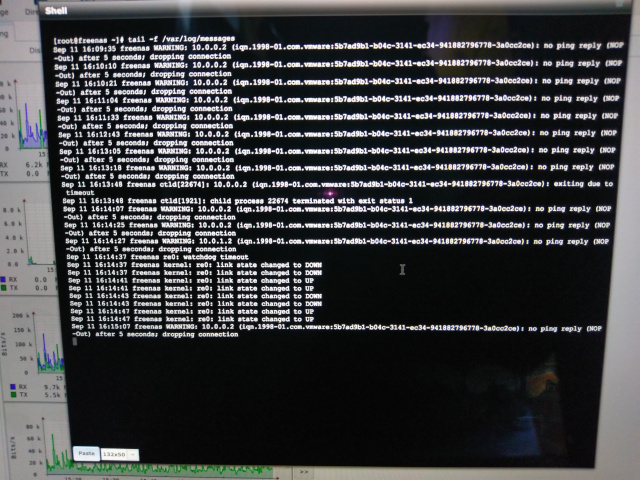

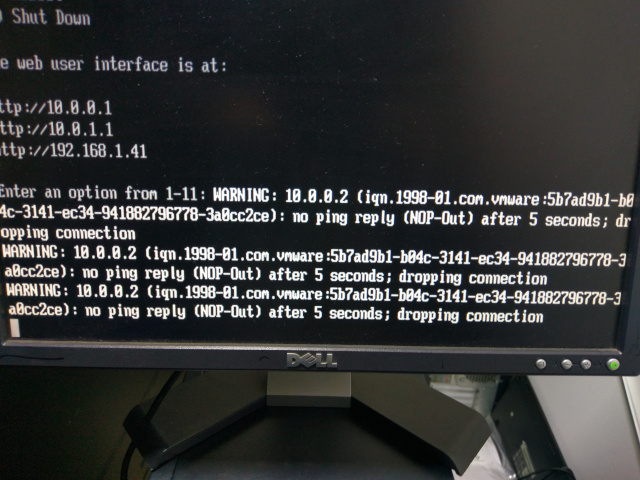

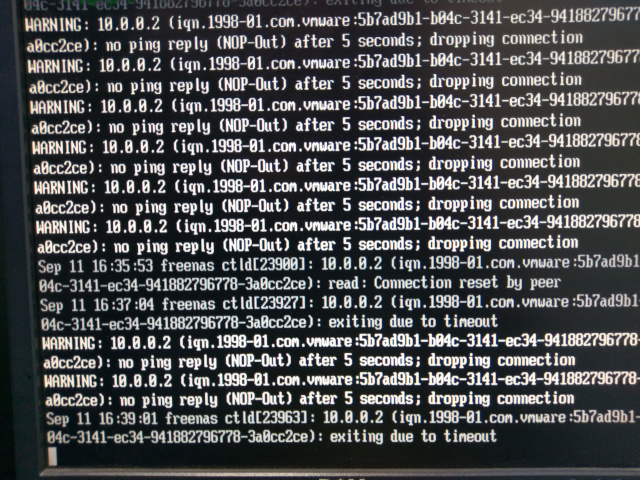

As you can see in the pictures sometimes the link on both NIC's goes down.

I tested RAM, CPU and Boot and they are ok. My RAID (I've got RAID10 configured) controller is the HP Smart Array P410i

Network description:

FREENAS ESXi

10.0.0.1/24 <-----> 10.0.0.2/24 both MTU 9000

10.0.1.1/24 <-----> 10.0.1.2/24 both MTU 9000

Thanks for your help

IMAGES:

My freenas is rebooting randomly when using iSCSI to backup my vm's using Vsphere Data Protection. I added the device to the ESXi without troubles and I have to RJ45 crossover cables which connect the freenas with the ESXi host. I wonder if I'm missing some network configuration. I set the MTU to 9000 as suggested in a tutorial, is it ok?

I'm attaching some images so you can see my setup and behavior while doing the backup. The Freenas is in a HP ML350 G6 server with 24GB in RAM. Both NIC's are GLan.

As you can see in the pictures sometimes the link on both NIC's goes down.

I tested RAM, CPU and Boot and they are ok. My RAID (I've got RAID10 configured) controller is the HP Smart Array P410i

Network description:

FREENAS ESXi

10.0.0.1/24 <-----> 10.0.0.2/24 both MTU 9000

10.0.1.1/24 <-----> 10.0.1.2/24 both MTU 9000

Thanks for your help

IMAGES: