Killer-Bimmer

Cadet

- Joined

- Oct 26, 2019

- Messages

- 8

Cheers All! First Post, but long-time lurker; have posted on FB page a few times…

Appreciate the community and all the knowledge especially from the long-time members! Sometimes your patience is awe inspiring lol… Hopefully I don’t try it here, but probably will

I’ve have been running a system and learning along the way for some time, but still feel ignorant compared to some here.

My current system is a Family/Work/Pleasure ESXi bare metal setup:

This is my first fully operational system that is on 24/7 and utilized for very mixed use:

Here is where I’m at: My NextCloud Family Linage site has been more popular than I anticipated, now I have documents and photos that go back to the early 1800’s and early 1900’s. I’m adding more members pretty regularly and data is growing pretty fast with about 3TB since first initial load this year. This is the critical data wanting to ensure is secure and long-term degradation is minimized.

Work data is important, and speed of access is critical, but this is replicated data that can be recovered easily.

Next is the pleasure part as I travel all the time and data access and ease of access is important. This has been great, but honestly the heavy hitter on resources is really just Plex transcoding when I’m away. The other hit is when I’m moving large amounts of data for use and I’m highly impatient, so speed is very important. My workstation is already connected to my 10GB switch and speed is great, but was not good with FreeNAS NFS/ESXi/Windows was poor i.e. no SLOG

I've have learned a lot and know that current system is compromised on pool setup and other areas, but here is my plan to resolve:

1U Chassis: Supermicro BPN-SAS3-815TQ

2U Chassis: Supermicro SC826BE2C_R920LPB:

Obviously open to suggestions and comments and I thank all those that have spent so much time helping others!

Cheers

Appreciate the community and all the knowledge especially from the long-time members! Sometimes your patience is awe inspiring lol… Hopefully I don’t try it here, but probably will

I’ve have been running a system and learning along the way for some time, but still feel ignorant compared to some here.

My current system is a Family/Work/Pleasure ESXi bare metal setup:

- MB: X11SPM-TPF

- CPU: 4114

- Ram: 192 GB (6x32GB)

- Drive(s):

- X4 4TB Red Pro’s (Pool-1) Z1

- X4 6TB Red Pro’s (Pool-2) Z1

- X1 4TB Purple

- X1 512GB Samsung Pro NVMe

- X2 128GB Supermicro SATADom’s

- X1 10GB Fiber with x1 1GB fail-over

- Going to add a larger 10GB/s Switch US-16-XG-US 10G

- All in a Fractal Node 804

This is my first fully operational system that is on 24/7 and utilized for very mixed use:

- VM’s:

- NextCloud (Internal and about 20 external users)

- 7.5TB of current Data and growing

- NFS & SMB

- Also serves as a customer product information and instructional video access (intermittent use)

- Family Lineage vault for external family sharing and external family data uploads (very intermittent use, but when accessed data uploads and downloads are large i.e. 1,000’s of photos and multiple video up/down)

- NextCloud (Internal and about 20 external users)

- Obviously FreeNAS with Intel x8 AHCI controller I/O pass through

- Two pools because I had x4 4TB from a WD PR4100 setup then purchased the x4 6TB because I wanted more storage.

- Windows 10

- Plex (about 5 intermittent external users)

- 15TB of current data

- Central Windows Machine Back-up and file history

- Five machines

- Central File server

- 2TB

- ESXi sSATA controller serving the 4TB Purple to Windows

- Used for HDHomerun DVR with original goal for security system as well

- HDHomerun

- Heavy use and a mother-in-law that keeps here TV on 24/7

- She’s definitely tested my 24/7 wireless data reliability

- Heavy use and a mother-in-law that keeps here TV on 24/7

- Syncthing

- Primarily bother and I very large file sharing (don’t ask what)

- Plex (about 5 intermittent external users)

- NFS disk and multiple SMB’s

- Ubuntu, but more for a test VM and learning breaking things

- Plane for more VM’s for other work-related usage more on that another time

Here is where I’m at: My NextCloud Family Linage site has been more popular than I anticipated, now I have documents and photos that go back to the early 1800’s and early 1900’s. I’m adding more members pretty regularly and data is growing pretty fast with about 3TB since first initial load this year. This is the critical data wanting to ensure is secure and long-term degradation is minimized.

Work data is important, and speed of access is critical, but this is replicated data that can be recovered easily.

Next is the pleasure part as I travel all the time and data access and ease of access is important. This has been great, but honestly the heavy hitter on resources is really just Plex transcoding when I’m away. The other hit is when I’m moving large amounts of data for use and I’m highly impatient, so speed is very important. My workstation is already connected to my 10GB switch and speed is great, but was not good with FreeNAS NFS/ESXi/Windows was poor i.e. no SLOG

I've have learned a lot and know that current system is compromised on pool setup and other areas, but here is my plan to resolve:

- Move current system to a 1U for vSphere Management (Have Essentials License) console and possibly noncritical operations

- Adding a 2U Storage Server ESXi bare metal

- Improve pool set-up and increase failure tolerance from Z1 to Z2

- Fix NFS/ESXi issues with proper SLOG

- Add ability for hardware fail-over

1U Chassis: Supermicro BPN-SAS3-815TQ

- Move/add the 6TB drives and other hardware from above

- Reduce to 64GB Ram (x2 32GB)

- Move to a Supermicro Passive Cooler: SNK-P0067PS

- 4TB and WD PR4100 goes to brother

- Noctua Cooler, PS and Case to be sold

2U Chassis: Supermicro SC826BE2C_R920LPB:

Choose the SC826BE2C_R920LPB due to the Duel 12GB/s Backplane for future data expansion ability

- Motherboard: X11SPH_nCTPF

- CPU: Silver 4214

- Been happy with the 4114 and the 4214 just adds some ability and close to cost of original 4114

- Supermicro 2U Active Cooler: SNK-P0068APS4

- RAM: 192GB DDR4 ECC Registered @ 2,666 will operate at 2,400(x6-32GB)

- Only had to purchase x2 sticks

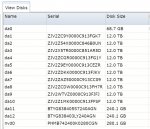

- Drives: x10 12TB Seagate Exos X12 nearline SAS 12GB/s: ST12000NM0027

- SAS3008 passed directly to FreeNAS

- These will be used for a single Z2 Pool

- $38.90/TB

- NVMe 1Tb Samsung Pro; Primarily for Windows VM

- SATADom’s: Boot devices for ESXi and FreeNAS and other OS that are not data intensive VM’s

- SLOG: Haven’t purchased - This is where I need help, looked at the P900, but I want PLP so looking at the DC P4800X (P900 is much less, but seems pointless with no end to end or PLP). Other suggestions?

- L2Arc: Don’t believe I need for my use, but the P900 seems to be ok here as losing is not detrimental from my understanding due to being read

Obviously open to suggestions and comments and I thank all those that have spent so much time helping others!

Cheers