I think there's a slight chance I'm going crazy here, with the amount I've searched for an answer to this.

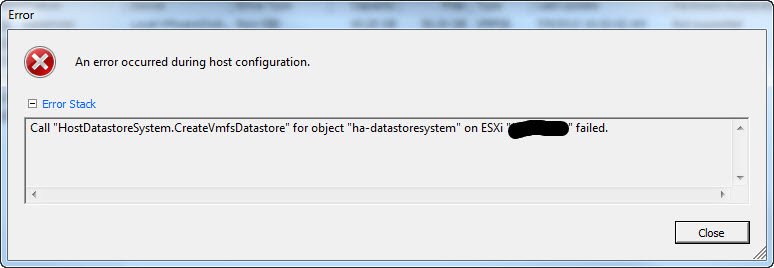

I've made a ZFS raid volume on FreeNAS (tried both file and device extents) based on various guides on the web, tutorials, etc. I've made the iSCSI target, mapped extent to the target, all that fun stuff. ESXi host can see the target and even report the size of the LUN, however when I try to create a VMFS datastore on the LUN I get an error. Shown here:

Now the best I can gather from researching this error online is that VMware cannot make heads or tails of the file system and therefore will not create a VMFS on it. I'm just stumped as to how to format the FS on the freenas box into something that Vmware can actually use, and thus move on with this project. All the youtube videos I've watched on using Vmware with Freenas and iSCSI don't show anyone having this problem, and I'm pretty certain I'm following the directions correctly.

Before anyone asks,

This is not a main production environment - we have Dell EQL Sans for that

I've gone over the guide scouring for any vmware or iscsi pointers.

Many thanks for any assistance

I've made a ZFS raid volume on FreeNAS (tried both file and device extents) based on various guides on the web, tutorials, etc. I've made the iSCSI target, mapped extent to the target, all that fun stuff. ESXi host can see the target and even report the size of the LUN, however when I try to create a VMFS datastore on the LUN I get an error. Shown here:

Now the best I can gather from researching this error online is that VMware cannot make heads or tails of the file system and therefore will not create a VMFS on it. I'm just stumped as to how to format the FS on the freenas box into something that Vmware can actually use, and thus move on with this project. All the youtube videos I've watched on using Vmware with Freenas and iSCSI don't show anyone having this problem, and I'm pretty certain I'm following the directions correctly.

Before anyone asks,

This is not a main production environment - we have Dell EQL Sans for that

I've gone over the guide scouring for any vmware or iscsi pointers.

Many thanks for any assistance