The $1,000,000 question I can't find the answer to is.. "Does ESXi support TRIM and does it function?" Normally for a product that is as expensive as it is and with the amount of documentation provided(notice I didn't say well documented) you'd expect that if TRIM really was supported there would be web links to documentation clearly validating that TRIM is enabled and does work. So I'm expecting to find that TRIM is not supported with ESXi.

*NOTE*: The remainder of this reading is rather technical. Being that I have an engineering background I'll be providing far more detail that may be needed. This is to allow others to reproduce my work if they desire, dispute my hardware being acceptable for the intended testing, and/or dispute my findings. If you don't want to read about the testing I performed itself, feel free to skip to the CONCLUSION section of this post. If you do read through this whole page, I highly recommend you read the links I provide.

*NOTE*: Much of this data applies to all SSDs regardless of brand or model. Others may apply for just Intel SSDs, or maybe even only specific Intel SSDs with specific firmware. The reader is encouraged to verify any information I provide to ensure it applies for your SSD.

*DISCLAIMER*: If you've read my stuff on the forum, you probably know I'm an advocate of SSDs, and in particular Intel SSDs. I only use Intel SSDs in my HTPC, desktop, laptop, etc. Only my FreeNAS server has spinning rust drives. The rest are all solid state. I've never had one fail and they've always performed to my expectations. Obviously drives do fail, but I consider Intel to be the 'cream of the crop' with regards to reliability. This is a personal choice based on my reading. You are encouraged to form your own opinion based on all available information.

Well, the question seems easy, but finding the answer is hard. So some quick Googling turned up a few webpages of people that attempted to solve this problem. The first link I found that looked promising was http://www.v-front.de/2013/10/faq-using-ssds-with-esxi.html. Mr. Andreas Peetz did a fairly comprehensive explanation for TRIM. But, unfortunately he found no answers. There he discussed some details and came to the conclusion of "Who knows?" If you read through it(and you should if you haven't yet) you'll see him mention background Garbage Collection. Garbage Collection is when a drive's firmware examines the file system for empty blocks and basically does it's own internal TRIMming independent of the system itself. The firmware must support the file system though. Being that ESXi is a product that isn't very common for SSD use, I'm not going to hedge any bets on GC functioning. Their VMFS file system is unique to their one product, and based on past experience with GC from years gone by, the only file systems that supported GC that I worked with were FAT32 and NTFS. Sorry Linux/FreeBSD/anyone else. You're very likely to be out in the cold. Now obviously there's the very distinct possibility that the drive is using GC. Which, if it is doing GC, then all of this will be for naught as we'll get the intended expectations regardless of if GC or TRIM is being used. Even if GC or TRIM doesn't work for this scenario there's some things we can do to help prolong a drive's life anyway. I'll get to that later in the conclusion section. I did some googling to try to find available information on supported file systems for Sandforce controllers that claim to do garbage collection is impossible. Companies seem to be hellbent on telling you about the feature, but then provide no actual tangible information so you can determine if their product is right for you. Not the first time an industry will hold back on the information that an informed user/owner might need to know, and certainly won't be the last. There's plenty of product propaganda for potential buyers, but almost no information for those that are detail oriented. Just another reason why I have never been particularly fond of Sandforce. Anytime I've wanted details on how their drives work internally its fraught with a disappointing level of detail. Intel is very secretive too, but they are more open than Sandforce has ever been.

So how does TRIM work?

At the deepest levels TRIM simply informs an SSD that it can erase particular memory pages that are no longer storing your data. Normally when a file is deleted from your partition the file system simply marks those sectors as available again. For platter based drives this is normal and has been the case for decades. This is why you can often run tools like R-studio, Ontrack Data Recovery software, or other "undelete" tools to help find those old files.

However SSDs have a problem that is unique to MLC memory. An erase cycle takes significant time to perform. Before any write can be made to your SSD the particular location must be erased. The first generation of SSDs had horrible random write performance because any write must be preceded with an erase command, and the erase command carried with it a significant performance penalty. So the solution is to erase pages that aren't storing data so you always have fresh erased memory cells ready to store your data. The erase command itself is internal to the SSD and is performed to make the cells ready to write the new data. The penalty for "erasing" a page of memory on your SSD is quite high. Obviously this means that the SSD would be a horrible choice if performance mattered at all without TRIM. But people gladly spent huge amounts of money to buy them(I even bought a deeply discounted 32GB drive for $200 years ago.. the OCZ Solid which is in my pfsense box since its useless for anything else). Not all of the empty space will be in an 'erased' state, but as long as you never run out of erased memory everything is fine and your SSD will perform acceptably. Another trick that SSDs use to ensure there is always erased space is the spare capacity. Every SSD has extra spare capacity just like a hard drive. When bad sectors need to be remapped, they are remapped to the spare sectors on the drive. For SSDs though, they may report 120GB of disk space, but they may have 128GB of total flash memory(or more). That extra 8GB ensure that even if your drive is completely full you still have around 8GB of "erased" memory at all times. In the event that a bad memory block is found it is simply disabled, and your 128GB of memory might be 127.995GB. Since you are still over the 120GB of available disk space you won't see this internal remapping or the subsequent reduction in available memory pages. If you monitor SMART parameters some brands, models, and firmware version do report this information and you can use it as a gauge to determine how healthy a drive is. This is handled by what is often called the Flash Memory Abstraction Layer(FAL). Virtually all SSDs today have this in some form, but the name is sometimes slightly different between manufacturers. This happens behind the back of the rest of the machine and is handled completely internal to the SSD itself. In fact, some firmware problems that have had to be fixed in the field by end-user upgrades have been with improper handling of the abstraction layer resulting in data corruption. Whoops! The FAL does nothing more but translate the LBA address to the actual memory pages/cells that contain that data. SSDs are not linear devices like hard drives, and their data is scattered all over the memory chips. The SSD controller handles all of this internally and you, your OS, and your hard drive diagnostic tools are non the wiser. By the time information is being exchanged at the SATA/SAS level there's no way to know about this abstraction.

Your operating system, if it supports TRIM, will tell the drive when files are deleted that the particular locations that the deleted file previously used are actually free. This allows the disk to keep its own internal list of memory blocks that are actually "erasable" without causing data loss. The SSD will keep this list in a table and every so often(usually 5-30 minutes) it will erase and consolidate pages that no longer storing data but haven't been erased. Depending on your model, brand, and firmware version the frequency at which pages are erased can range from very conservative to extremely aggressive. If the SSD is too conservative it may impact performance. If it is too aggressive it may cause what is called write amplification. This results when the firmware is too aggressive with erasing memory blocks and causes excessive moving of data around the memory cells.

Once a SSD has erased those pages they are no longer mapped to any actual location. The process of attempting to read from those sectors to show as either all zeros or all ones(depends on the manufacturer) since those sectors aren't actually allocated and don't belong to any actual memory locations anymore. Remember that the old data no longer exists, and the FAL will report false data. This is why using tools that "undelete" files on SSDs is typically ineffective for drives using TRIM. The data literally no longer exists after the drive has erased the pages. Unfortunately, if GC functions on this drive and properly erases locations on VMFS partitions it will mask this whole test, which will be both good and bad. It will be good because it will effectively prove that GC does work with VMFS(effectively meaning TRIM doesn't need to function to keep an SSD healthy and operating at peak performance). It will also be bad because the whole point of my test was to prove that TRIM functions(or doesn't function). I could deliberately change from AHCI to IDE mode for my SATA controller, which will disable TRIM since TRIM requires AHCI. But as you will see in a bit I won't have to do this test.

Requirements for TRIM

So why is TRIM such an enigma to prove? Well, for starters you need 3 things for TRIM to work properly:

1. SATA/SAS controller that supports AHCI and TRIM(with AHCI enabled) and the controller driver must properly support TRIM. Some controllers don't have any options, so you will have to determine for yourself if AHCI is actually supported/implemented in your controller. This also often means if you are running your SSD in a RAID combination besides mirrors that TRIM is not supported.

2. The disk itself must support TRIM in its firmware. Virtually all SSDs available today provide this feature in its firmware. Older drives may not support TRIM, so you will have to check with your manufacturer to determine for yourself if your drive and firmware version supports trim.

3. The operating system must support TRIM and it must be enabled.

All 3 of those must exist, must be enabled and functioning properly.

Categories of TRIM support

So now seems like an appropriate time to talk about the difference between TRIM being supported, enabled, and functional.

Supported: I can have all of the things above, but if I've chosen to disable TRIM(for example by deliberately disabling it in the OS) then TRIM could be said to be "supported". Clearly though, the drive will not be trimming itself appropriately. You could also say that TRIM is merely supported if you haven't verfied that TRIM is actually function since this is the lowest level.

Enabled: If everything is correct and enabled that is required for TRIM I have reason to believe TRIM should be functioning, it is merely "enabled". For most people(such as Windows 7,8, and 8.1 users) this *should* be the same as the next category. This is roughly equivalent to running the "fsutil behavior query DisableDeleteNotify" from the command line. Just because it returns 0(which means the OS has the trim feature enabled) does NOT mean that your drives are actually being TRIMmed. In fact, you can get a response of "0" even on computers that do not have an SSD at all! Isn't Windows awesome?! This is why the distinction between supported, enabled, and functional is necessary for those with attention to detail. Small details are the difference between a feature working and not working.

Functional: If I'm able to prove for certainty that TRIM is operating properly, then we can say it's functioning. For most windows users, if you've bought a pre-made machine with an SSD or added one you should have verified that the requirements were met yours should be functional. If you didn't you should go and check up on these things. I've already met several people that had no TRIM functioning on their SSD despite swearing up and down their hardware supported TRIM, etc etc etc. I do enjoy laughing at people that simply think they can buy stuff and it'll work. It's not that simple, which is precisely why this article is being written.

So we're trying to get from a point of saying that trim is supported in ESXi, to TRIM is enabled, and hopefully for many ESXi users out there we can prove that trim is actually functional. Here's the technical explanation for the "proposed" trim feature as it was being drafted for the specifications in 2007...

So how are we going to test this? Simple. We're going to look for the end result. Everything I found via Google was that people were looking for proof of TRIM being enabled. Well, why not just look for the expected end result? So I'm going to install ESXi on a testbed. Then I will write random data to the datastore drive until it is completely full, then delete the random files. After I delete the files, if trim is functioning properly then within 15-30 minutes at the most the drive's firmware should be busy erasing memory pages on the drive. It may not erase them all, but I'd bet more than 80% of the drive will be erased within an hour or two. There is no way for the user to know when the erasures actually took place since this is handled strictly internally to the SSD. So I plan to give each drive several hours between deleting the files and actually looking at the sectors to determine if the pages were erased. Then, I'll put the hard drive under a proverbial microscope. If I start looking at the sector level of the datastore partition I should see that the vast majority of the drive will be either all zeros(or ones for some drives) because erased pages will not actually contain data. On the other hand, if I find tons of random data throughout the partition then its a safe bet that neither garbage collection nor TRIM erased the free space.

With that said, let's get to work.

Testing Parameters

Here's the hardware I'll be using for the test. It's my HTPC and is being taken out of service for a day or two for this test:

-Gigabyte H55M-USB3 with latest BIOS (F11)

-8GB of RAM

-i7-870K at stock speeds, 2.93Ghz

-Connected to Intel SATA controller in AHCI mode

-Intel 330 120GB drive with firmware version 300i

-Running various versions of ESXi to determine if older or newer builds will affect the outcome.

First, I'm going to install ESXi 5.1 build 799733(released Sept 2012) and then update and test 5.1 build 1483097(current build of 5.1 as of January 2014) and then lastly I'll upgrade to 5.5 build 1474528(current build as of January 2014) to see where things are and where they've come from. By examining the outcome of what TRIM/GC should be doing we can determine conclusively if TRIM/GC is working or not.

Test with 5.1 Build 799733

So for each test I did the same thing. I did a dd if=/dev/random of=random and let that run for a few minutes. Then I did CTRL+C and used cat since /dev/random only gives me a few hundred MB per 5 minutes. This allowed me to fill the drive much faster than using waiting for random or urandom devices.

cat random random random random random random random > random1

-then-

cat random1 random1 random1 random1 random1 random1 > random2

It's not the most elegant, but it fills the drive in much less time than waiting for /dev/random or /dev/urandom.

After the files were created I deleted them. Now, if TRIM is doing its job, the SSD should be informed of the erasure I just made. So within a short time the drive should begin erasing the memory pages to make them ready for their next write.

Lucky for you, reading this article is like a time machine. Due to me taking a nap, the system sat on for almost a full 24 hours, completely idle the whole time. I do get busy and you can never wait "too long" for TRIM or GC. Surely TRIM or garbage collection, if functioning properly and as hoped, will have shown some spectacular results. Time to do some forensic analysis on the drive!

For analysis I'll be using a program called WinHex. Found it via a quick Google search. So what was the verdict with 5.1 build 799733?

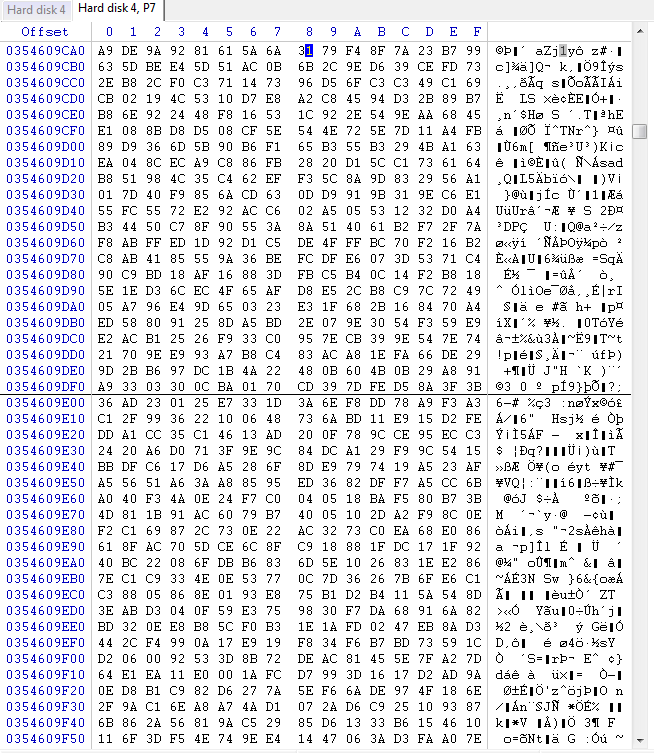

Random data throughout the partition. The 106GB partition does contain a small amount of zeros at the very beginning and very end, but it accounts for less than 1% of the drive's total disk space. I presume this was reserved slack space for the file system and isn't available for the end user. So this pretty much confirms that the drive does NOT provide garbage collection nor does it TRIM with my hardware and ESXi version!

So let's upgrade to 5.1's latest build and see what happens...

Test with 5.1 Build 1483097

The latest image file available is ESXi-5.1.0-2014-0102001-standard. This will upgrade the test bed to 5.1.0 build 1483097. This is the latest as of February 2nd, 2014. After installation I'll do a reboot to complete the upgrade, exit from maintenance mode, then fill the drive again followed by deleting the files!

Considering that 5.1 build 799733 gave disappointing results I think it's a pretty safe bet that ESXi just doesn't support TRIM at all. But, we shall see how the remainder of the tests go.

Here's the results with 5.1 build 1483097

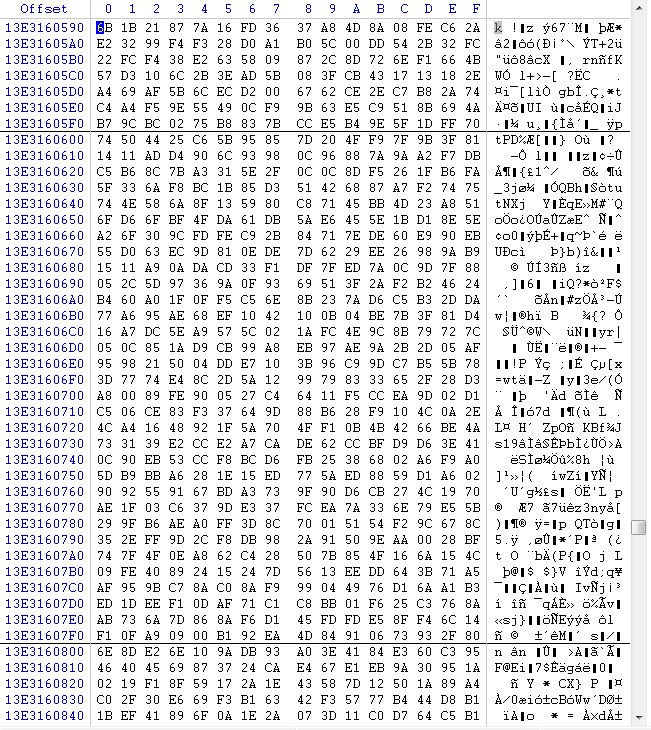

So I've checked out the drive. Guess what the results are? Nope, the drive was not TRIMmed/GCed. Again, the latest version of ESXi 5.1 does NOT support TRIM.

Let's try 5.5's latest available build.

Test with 5.5 Build 1474528

For this, i'll be installing the ESXi-5.5.0-20131204001-standard image.

So now it's time to go to the latest version, 5.5 build 1474528. So another fill of the drive is necessary followed by a delete.

So the results are in. Any guesses anyone?

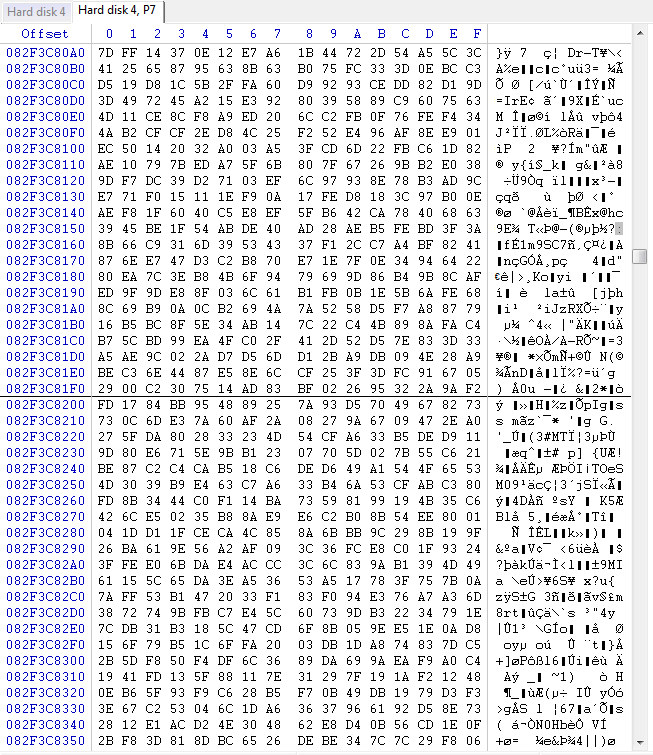

Sadly, I have to report that the drive was not TRIMmed/GCed with 5.5 either.

CONCLUSION

Unfortunately, unless there is something specific to my configuration that makes TRIM non-functional that I'm not aware of, it appears that ESXi does NOT support TRIM. There is no documentation from ESXi to go on, so the ability to even confirm that my hardware won't support TRIM is impossible. Clearly if you are using ESXi you cannot rely on the TRIM feature. If you are using an Intel 330 SSD with firmware version 300i then it is also a safe bet that garbage collection doesn't work. Of course it is not possible for me to test every single SSD out there but the expectation that GC will work with VMFS formatted partitions was a far stretch in my opinion from the beginning. To be completely honest, I have no expectation that garbage collection is supported with any model of any brand currently on the market for VMFS partitions. VMFS is very much a niche file system. Of course, if Intel(or any other manufacturer) wants to send me an SSD to test I'd be more than happy to update this page. Personally, I consider Intel to be a(or at least one of the) market leader regarding SSDs. So the fact that they don't support VMFS tends to show me that garbage collection just isn't there.

There appears to be no TRIM supported with ESXi on any build publicly available as of Feb 2, 2014. GC may be supported, but would have to be tested on a case-by-case basis for the applicable drive model and firmware version. But, if you are interested in extending the life of your SSD and it is Intel(other drives may support this, you are on your own to make that determination) then read the next section.

Extra information for Intel SSDs only(but may apply to other brands):

So now, let's talk about some other peculiarities with Intel SSDs.

Check out this presentation from 2012... http://forums.freenas.org/attachmen...1/?temp_hash=51616631b1f27814a51c8e76c83146ab

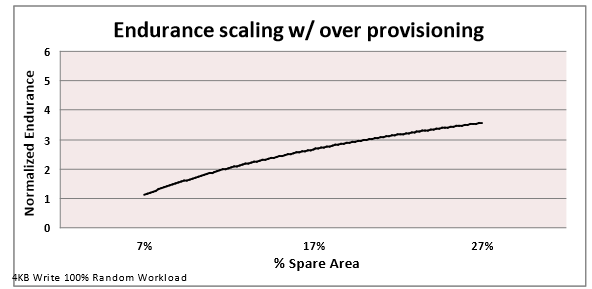

In particular, on page 10 is this little graph:

What is this? Over provisioning? Endurance will increase by over 3 fold at 27%? Yes!

Over provisioning is the act of taking actual available disk space and converting it to act as an enlarged spare area on the drive. This will shrink the drive down from its designed capacity, but lifespan should increase as a bonus.

If you read through that whole link there's 2 ways to "over provision" a drive. You can use the ATA8-ACS feature called SET MAX ADDRESS to undersize your disk or you can choose to not partition the entire disk. Intel SSDs will use unpartitioned disk space as reserve/spare space and will use that space to provide a longer life. How much will you gain depends on many factors. But if you can afford to give up 27% of your disk space, your expected lifespan can increase by about 3.5x. It's your choice how much you want to over provision a drive. You're the administrator of your server. Feel free to set it to whatever makes you happy! If you can afford to give up 27%, I say go for it!

For more information on over provisioning via the ATA8-ACS feature read these two PDFS: One Two

For those of you with Intel SSDs and use Windows, I highly recommend you install the Intel Toolbox. It will tell you when updates are available for the Toolbox automatically. It will also provide you with an easy opportunity to install firmware updates. If a firmware update is available, you just click the update button then reboot the computer. Done! But, in particular is a very useful feature called the "Intel SSD optimizer". While information on everythingit does is very incomplete(hooray for company secrets), I have noticed that it does some cool TRIMming stuff. When you run the tool it seems to create a series of 1GB files in the root of your partition(for example, C:\) and it literally allocates those files so the disk space is "used"(but doesn't actually write data to the files) and then follows up with deleting those files. This triggers your operating system's TRIM feature to effectively TRIM your entire disk. Naturally, it doesn't fill the drive, but seems to leave just 1GB free. According to Intel's website if you want to use an Intel SSD that supports TRIM on an OS like XP or Vista(neither of which support TRIM) then the Intel SSD Optimizer(one feature of the Intel Toolbox) will handle the TRIMming for you. Of course, if you use any other brand you won't be able to use the Intel SSD Toolbox. If you are in this boat and want to use XP or Vista, I recommend you use only an Intel SSD that is compatible with the Intel SSD Toolbox or do your own research to determine which SSDs do garbage collection or some other form of TRIM to help keep your drive at peak performance.

If you've read this far, congrats for not falling asleep. I spent almost 15 hours just writing up this document, research, actual testing, etc! Hopefully it was informative and useful for you.

*** - If you are an Intel employee and have access to guys with actual SSD knowledge or are one of the people that work on SSD technology(even if non-Intel), I'd love to have a Q&A session with you. Feel free to PM me as I have burning questions I've wanted answered for years and have never been able to get the answers I wanted.

*NOTE*: The remainder of this reading is rather technical. Being that I have an engineering background I'll be providing far more detail that may be needed. This is to allow others to reproduce my work if they desire, dispute my hardware being acceptable for the intended testing, and/or dispute my findings. If you don't want to read about the testing I performed itself, feel free to skip to the CONCLUSION section of this post. If you do read through this whole page, I highly recommend you read the links I provide.

*NOTE*: Much of this data applies to all SSDs regardless of brand or model. Others may apply for just Intel SSDs, or maybe even only specific Intel SSDs with specific firmware. The reader is encouraged to verify any information I provide to ensure it applies for your SSD.

*DISCLAIMER*: If you've read my stuff on the forum, you probably know I'm an advocate of SSDs, and in particular Intel SSDs. I only use Intel SSDs in my HTPC, desktop, laptop, etc. Only my FreeNAS server has spinning rust drives. The rest are all solid state. I've never had one fail and they've always performed to my expectations. Obviously drives do fail, but I consider Intel to be the 'cream of the crop' with regards to reliability. This is a personal choice based on my reading. You are encouraged to form your own opinion based on all available information.

Well, the question seems easy, but finding the answer is hard. So some quick Googling turned up a few webpages of people that attempted to solve this problem. The first link I found that looked promising was http://www.v-front.de/2013/10/faq-using-ssds-with-esxi.html. Mr. Andreas Peetz did a fairly comprehensive explanation for TRIM. But, unfortunately he found no answers. There he discussed some details and came to the conclusion of "Who knows?" If you read through it(and you should if you haven't yet) you'll see him mention background Garbage Collection. Garbage Collection is when a drive's firmware examines the file system for empty blocks and basically does it's own internal TRIMming independent of the system itself. The firmware must support the file system though. Being that ESXi is a product that isn't very common for SSD use, I'm not going to hedge any bets on GC functioning. Their VMFS file system is unique to their one product, and based on past experience with GC from years gone by, the only file systems that supported GC that I worked with were FAT32 and NTFS. Sorry Linux/FreeBSD/anyone else. You're very likely to be out in the cold. Now obviously there's the very distinct possibility that the drive is using GC. Which, if it is doing GC, then all of this will be for naught as we'll get the intended expectations regardless of if GC or TRIM is being used. Even if GC or TRIM doesn't work for this scenario there's some things we can do to help prolong a drive's life anyway. I'll get to that later in the conclusion section. I did some googling to try to find available information on supported file systems for Sandforce controllers that claim to do garbage collection is impossible. Companies seem to be hellbent on telling you about the feature, but then provide no actual tangible information so you can determine if their product is right for you. Not the first time an industry will hold back on the information that an informed user/owner might need to know, and certainly won't be the last. There's plenty of product propaganda for potential buyers, but almost no information for those that are detail oriented. Just another reason why I have never been particularly fond of Sandforce. Anytime I've wanted details on how their drives work internally its fraught with a disappointing level of detail. Intel is very secretive too, but they are more open than Sandforce has ever been.

So how does TRIM work?

At the deepest levels TRIM simply informs an SSD that it can erase particular memory pages that are no longer storing your data. Normally when a file is deleted from your partition the file system simply marks those sectors as available again. For platter based drives this is normal and has been the case for decades. This is why you can often run tools like R-studio, Ontrack Data Recovery software, or other "undelete" tools to help find those old files.

However SSDs have a problem that is unique to MLC memory. An erase cycle takes significant time to perform. Before any write can be made to your SSD the particular location must be erased. The first generation of SSDs had horrible random write performance because any write must be preceded with an erase command, and the erase command carried with it a significant performance penalty. So the solution is to erase pages that aren't storing data so you always have fresh erased memory cells ready to store your data. The erase command itself is internal to the SSD and is performed to make the cells ready to write the new data. The penalty for "erasing" a page of memory on your SSD is quite high. Obviously this means that the SSD would be a horrible choice if performance mattered at all without TRIM. But people gladly spent huge amounts of money to buy them(I even bought a deeply discounted 32GB drive for $200 years ago.. the OCZ Solid which is in my pfsense box since its useless for anything else). Not all of the empty space will be in an 'erased' state, but as long as you never run out of erased memory everything is fine and your SSD will perform acceptably. Another trick that SSDs use to ensure there is always erased space is the spare capacity. Every SSD has extra spare capacity just like a hard drive. When bad sectors need to be remapped, they are remapped to the spare sectors on the drive. For SSDs though, they may report 120GB of disk space, but they may have 128GB of total flash memory(or more). That extra 8GB ensure that even if your drive is completely full you still have around 8GB of "erased" memory at all times. In the event that a bad memory block is found it is simply disabled, and your 128GB of memory might be 127.995GB. Since you are still over the 120GB of available disk space you won't see this internal remapping or the subsequent reduction in available memory pages. If you monitor SMART parameters some brands, models, and firmware version do report this information and you can use it as a gauge to determine how healthy a drive is. This is handled by what is often called the Flash Memory Abstraction Layer(FAL). Virtually all SSDs today have this in some form, but the name is sometimes slightly different between manufacturers. This happens behind the back of the rest of the machine and is handled completely internal to the SSD itself. In fact, some firmware problems that have had to be fixed in the field by end-user upgrades have been with improper handling of the abstraction layer resulting in data corruption. Whoops! The FAL does nothing more but translate the LBA address to the actual memory pages/cells that contain that data. SSDs are not linear devices like hard drives, and their data is scattered all over the memory chips. The SSD controller handles all of this internally and you, your OS, and your hard drive diagnostic tools are non the wiser. By the time information is being exchanged at the SATA/SAS level there's no way to know about this abstraction.

Your operating system, if it supports TRIM, will tell the drive when files are deleted that the particular locations that the deleted file previously used are actually free. This allows the disk to keep its own internal list of memory blocks that are actually "erasable" without causing data loss. The SSD will keep this list in a table and every so often(usually 5-30 minutes) it will erase and consolidate pages that no longer storing data but haven't been erased. Depending on your model, brand, and firmware version the frequency at which pages are erased can range from very conservative to extremely aggressive. If the SSD is too conservative it may impact performance. If it is too aggressive it may cause what is called write amplification. This results when the firmware is too aggressive with erasing memory blocks and causes excessive moving of data around the memory cells.

Once a SSD has erased those pages they are no longer mapped to any actual location. The process of attempting to read from those sectors to show as either all zeros or all ones(depends on the manufacturer) since those sectors aren't actually allocated and don't belong to any actual memory locations anymore. Remember that the old data no longer exists, and the FAL will report false data. This is why using tools that "undelete" files on SSDs is typically ineffective for drives using TRIM. The data literally no longer exists after the drive has erased the pages. Unfortunately, if GC functions on this drive and properly erases locations on VMFS partitions it will mask this whole test, which will be both good and bad. It will be good because it will effectively prove that GC does work with VMFS(effectively meaning TRIM doesn't need to function to keep an SSD healthy and operating at peak performance). It will also be bad because the whole point of my test was to prove that TRIM functions(or doesn't function). I could deliberately change from AHCI to IDE mode for my SATA controller, which will disable TRIM since TRIM requires AHCI. But as you will see in a bit I won't have to do this test.

Requirements for TRIM

So why is TRIM such an enigma to prove? Well, for starters you need 3 things for TRIM to work properly:

1. SATA/SAS controller that supports AHCI and TRIM(with AHCI enabled) and the controller driver must properly support TRIM. Some controllers don't have any options, so you will have to determine for yourself if AHCI is actually supported/implemented in your controller. This also often means if you are running your SSD in a RAID combination besides mirrors that TRIM is not supported.

2. The disk itself must support TRIM in its firmware. Virtually all SSDs available today provide this feature in its firmware. Older drives may not support TRIM, so you will have to check with your manufacturer to determine for yourself if your drive and firmware version supports trim.

3. The operating system must support TRIM and it must be enabled.

All 3 of those must exist, must be enabled and functioning properly.

Categories of TRIM support

So now seems like an appropriate time to talk about the difference between TRIM being supported, enabled, and functional.

Supported: I can have all of the things above, but if I've chosen to disable TRIM(for example by deliberately disabling it in the OS) then TRIM could be said to be "supported". Clearly though, the drive will not be trimming itself appropriately. You could also say that TRIM is merely supported if you haven't verfied that TRIM is actually function since this is the lowest level.

Enabled: If everything is correct and enabled that is required for TRIM I have reason to believe TRIM should be functioning, it is merely "enabled". For most people(such as Windows 7,8, and 8.1 users) this *should* be the same as the next category. This is roughly equivalent to running the "fsutil behavior query DisableDeleteNotify" from the command line. Just because it returns 0(which means the OS has the trim feature enabled) does NOT mean that your drives are actually being TRIMmed. In fact, you can get a response of "0" even on computers that do not have an SSD at all! Isn't Windows awesome?! This is why the distinction between supported, enabled, and functional is necessary for those with attention to detail. Small details are the difference between a feature working and not working.

Functional: If I'm able to prove for certainty that TRIM is operating properly, then we can say it's functioning. For most windows users, if you've bought a pre-made machine with an SSD or added one you should have verified that the requirements were met yours should be functional. If you didn't you should go and check up on these things. I've already met several people that had no TRIM functioning on their SSD despite swearing up and down their hardware supported TRIM, etc etc etc. I do enjoy laughing at people that simply think they can buy stuff and it'll work. It's not that simple, which is precisely why this article is being written.

So we're trying to get from a point of saying that trim is supported in ESXi, to TRIM is enabled, and hopefully for many ESXi users out there we can prove that trim is actually functional. Here's the technical explanation for the "proposed" trim feature as it was being drafted for the specifications in 2007...

File deleting happens in a file system all time, but the information related to the deleted file only kept in OS, not to device. As a result, a device treats both valid data and invalid data(deleted file) in its storage media the same way, all necessary operations keeping data alive are applied. For example, background defect management and error recovery process are used for HDD. Merge, wear leveling and erase are applied to SSD. OS’s process, such as format, Defragger, Recycle bin, temp file deleting and Paging can produce a considerable amount of deleted file. By telling device the deleted file information as invalid data, the device can reduce its internal operation on all invalid data as self-optimization.

So how are we going to test this? Simple. We're going to look for the end result. Everything I found via Google was that people were looking for proof of TRIM being enabled. Well, why not just look for the expected end result? So I'm going to install ESXi on a testbed. Then I will write random data to the datastore drive until it is completely full, then delete the random files. After I delete the files, if trim is functioning properly then within 15-30 minutes at the most the drive's firmware should be busy erasing memory pages on the drive. It may not erase them all, but I'd bet more than 80% of the drive will be erased within an hour or two. There is no way for the user to know when the erasures actually took place since this is handled strictly internally to the SSD. So I plan to give each drive several hours between deleting the files and actually looking at the sectors to determine if the pages were erased. Then, I'll put the hard drive under a proverbial microscope. If I start looking at the sector level of the datastore partition I should see that the vast majority of the drive will be either all zeros(or ones for some drives) because erased pages will not actually contain data. On the other hand, if I find tons of random data throughout the partition then its a safe bet that neither garbage collection nor TRIM erased the free space.

With that said, let's get to work.

Testing Parameters

Here's the hardware I'll be using for the test. It's my HTPC and is being taken out of service for a day or two for this test:

-Gigabyte H55M-USB3 with latest BIOS (F11)

-8GB of RAM

-i7-870K at stock speeds, 2.93Ghz

-Connected to Intel SATA controller in AHCI mode

-Intel 330 120GB drive with firmware version 300i

-Running various versions of ESXi to determine if older or newer builds will affect the outcome.

First, I'm going to install ESXi 5.1 build 799733(released Sept 2012) and then update and test 5.1 build 1483097(current build of 5.1 as of January 2014) and then lastly I'll upgrade to 5.5 build 1474528(current build as of January 2014) to see where things are and where they've come from. By examining the outcome of what TRIM/GC should be doing we can determine conclusively if TRIM/GC is working or not.

Test with 5.1 Build 799733

So for each test I did the same thing. I did a dd if=/dev/random of=random and let that run for a few minutes. Then I did CTRL+C and used cat since /dev/random only gives me a few hundred MB per 5 minutes. This allowed me to fill the drive much faster than using waiting for random or urandom devices.

cat random random random random random random random > random1

-then-

cat random1 random1 random1 random1 random1 random1 > random2

It's not the most elegant, but it fills the drive in much less time than waiting for /dev/random or /dev/urandom.

After the files were created I deleted them. Now, if TRIM is doing its job, the SSD should be informed of the erasure I just made. So within a short time the drive should begin erasing the memory pages to make them ready for their next write.

Lucky for you, reading this article is like a time machine. Due to me taking a nap, the system sat on for almost a full 24 hours, completely idle the whole time. I do get busy and you can never wait "too long" for TRIM or GC. Surely TRIM or garbage collection, if functioning properly and as hoped, will have shown some spectacular results. Time to do some forensic analysis on the drive!

For analysis I'll be using a program called WinHex. Found it via a quick Google search. So what was the verdict with 5.1 build 799733?

Random data throughout the partition. The 106GB partition does contain a small amount of zeros at the very beginning and very end, but it accounts for less than 1% of the drive's total disk space. I presume this was reserved slack space for the file system and isn't available for the end user. So this pretty much confirms that the drive does NOT provide garbage collection nor does it TRIM with my hardware and ESXi version!

So let's upgrade to 5.1's latest build and see what happens...

Test with 5.1 Build 1483097

The latest image file available is ESXi-5.1.0-2014-0102001-standard. This will upgrade the test bed to 5.1.0 build 1483097. This is the latest as of February 2nd, 2014. After installation I'll do a reboot to complete the upgrade, exit from maintenance mode, then fill the drive again followed by deleting the files!

Considering that 5.1 build 799733 gave disappointing results I think it's a pretty safe bet that ESXi just doesn't support TRIM at all. But, we shall see how the remainder of the tests go.

Here's the results with 5.1 build 1483097

So I've checked out the drive. Guess what the results are? Nope, the drive was not TRIMmed/GCed. Again, the latest version of ESXi 5.1 does NOT support TRIM.

Let's try 5.5's latest available build.

Test with 5.5 Build 1474528

For this, i'll be installing the ESXi-5.5.0-20131204001-standard image.

So now it's time to go to the latest version, 5.5 build 1474528. So another fill of the drive is necessary followed by a delete.

So the results are in. Any guesses anyone?

Sadly, I have to report that the drive was not TRIMmed/GCed with 5.5 either.

CONCLUSION

Unfortunately, unless there is something specific to my configuration that makes TRIM non-functional that I'm not aware of, it appears that ESXi does NOT support TRIM. There is no documentation from ESXi to go on, so the ability to even confirm that my hardware won't support TRIM is impossible. Clearly if you are using ESXi you cannot rely on the TRIM feature. If you are using an Intel 330 SSD with firmware version 300i then it is also a safe bet that garbage collection doesn't work. Of course it is not possible for me to test every single SSD out there but the expectation that GC will work with VMFS formatted partitions was a far stretch in my opinion from the beginning. To be completely honest, I have no expectation that garbage collection is supported with any model of any brand currently on the market for VMFS partitions. VMFS is very much a niche file system. Of course, if Intel(or any other manufacturer) wants to send me an SSD to test I'd be more than happy to update this page. Personally, I consider Intel to be a(or at least one of the) market leader regarding SSDs. So the fact that they don't support VMFS tends to show me that garbage collection just isn't there.

There appears to be no TRIM supported with ESXi on any build publicly available as of Feb 2, 2014. GC may be supported, but would have to be tested on a case-by-case basis for the applicable drive model and firmware version. But, if you are interested in extending the life of your SSD and it is Intel(other drives may support this, you are on your own to make that determination) then read the next section.

Extra information for Intel SSDs only(but may apply to other brands):

So now, let's talk about some other peculiarities with Intel SSDs.

Check out this presentation from 2012... http://forums.freenas.org/attachmen...1/?temp_hash=51616631b1f27814a51c8e76c83146ab

In particular, on page 10 is this little graph:

What is this? Over provisioning? Endurance will increase by over 3 fold at 27%? Yes!

Over provisioning is the act of taking actual available disk space and converting it to act as an enlarged spare area on the drive. This will shrink the drive down from its designed capacity, but lifespan should increase as a bonus.

If you read through that whole link there's 2 ways to "over provision" a drive. You can use the ATA8-ACS feature called SET MAX ADDRESS to undersize your disk or you can choose to not partition the entire disk. Intel SSDs will use unpartitioned disk space as reserve/spare space and will use that space to provide a longer life. How much will you gain depends on many factors. But if you can afford to give up 27% of your disk space, your expected lifespan can increase by about 3.5x. It's your choice how much you want to over provision a drive. You're the administrator of your server. Feel free to set it to whatever makes you happy! If you can afford to give up 27%, I say go for it!

For more information on over provisioning via the ATA8-ACS feature read these two PDFS: One Two

For those of you with Intel SSDs and use Windows, I highly recommend you install the Intel Toolbox. It will tell you when updates are available for the Toolbox automatically. It will also provide you with an easy opportunity to install firmware updates. If a firmware update is available, you just click the update button then reboot the computer. Done! But, in particular is a very useful feature called the "Intel SSD optimizer". While information on everythingit does is very incomplete(hooray for company secrets), I have noticed that it does some cool TRIMming stuff. When you run the tool it seems to create a series of 1GB files in the root of your partition(for example, C:\) and it literally allocates those files so the disk space is "used"(but doesn't actually write data to the files) and then follows up with deleting those files. This triggers your operating system's TRIM feature to effectively TRIM your entire disk. Naturally, it doesn't fill the drive, but seems to leave just 1GB free. According to Intel's website if you want to use an Intel SSD that supports TRIM on an OS like XP or Vista(neither of which support TRIM) then the Intel SSD Optimizer(one feature of the Intel Toolbox) will handle the TRIMming for you. Of course, if you use any other brand you won't be able to use the Intel SSD Toolbox. If you are in this boat and want to use XP or Vista, I recommend you use only an Intel SSD that is compatible with the Intel SSD Toolbox or do your own research to determine which SSDs do garbage collection or some other form of TRIM to help keep your drive at peak performance.

If you've read this far, congrats for not falling asleep. I spent almost 15 hours just writing up this document, research, actual testing, etc! Hopefully it was informative and useful for you.

*** - If you are an Intel employee and have access to guys with actual SSD knowledge or are one of the people that work on SSD technology(even if non-Intel), I'd love to have a Q&A session with you. Feel free to PM me as I have burning questions I've wanted answered for years and have never been able to get the answers I wanted.