Setting up tt-rss with docker-compose TrueCharts app

In the spring of 2023 TrueCharts underwent a

large re-write that required reinstalling apps and appears to even have broken some. For me, the tt-rss app no longer works. I didn't want to fully escape-hatch to a VM just for an RSS reader, so I turned to the

docker-compose TrueCharts app as a stopgap.

Docker-Compose is also broken

While the docker-compose app can be coerced into running, it doesn't seem to work out-of-the-box for me. The app will start but then will not launch the docker compose yaml app inside of it. It looks like many users watch this tutorial video and get confused, but that video is out of date now and not applicable.

For a long time Docker-Compose on TrueNAS SCALE was been pretty troublesome. However, with our new Docker-Compose App, TrueNAS SCALE starts to fully support ...

www.youtube.com

Workaround Setup with tt-rss

There are two key changes to get docker-compose working: don't use the UI field for the compose.yaml file, or the ingress fields when setting up.

And I found that I needed to fully delete the app, and re-create docker-compose a second time in order for these steps to work, so don't give up immediately if it doesn't all start working!

Set up the compose.yaml file manually

You must already have the 'stable' TrueCharts catalog

set up and synced on your TrueNAS instance.

In the TrueNAS UI, navigate to the 'Apps' page and then the 'Available Applications' tab. Click the 'Refresh All' button at the top if you haven't synced the catalog recently. Then search for 'docker' in the search bar at the top, and click 'Install' on docker-compose:

In the app-setup pane that opens, it will ask for a docker compose file. Leave this field blank:

Under "Networking and Service" enable expert config, and create a custom service:

And then add your app's service port:

Under "Storage and Persistence" add additional app storage to your docker yaml file:

I also added the following for /var/lib/docker but I don't remember why. Maybe try leaving this out, and see if things work.

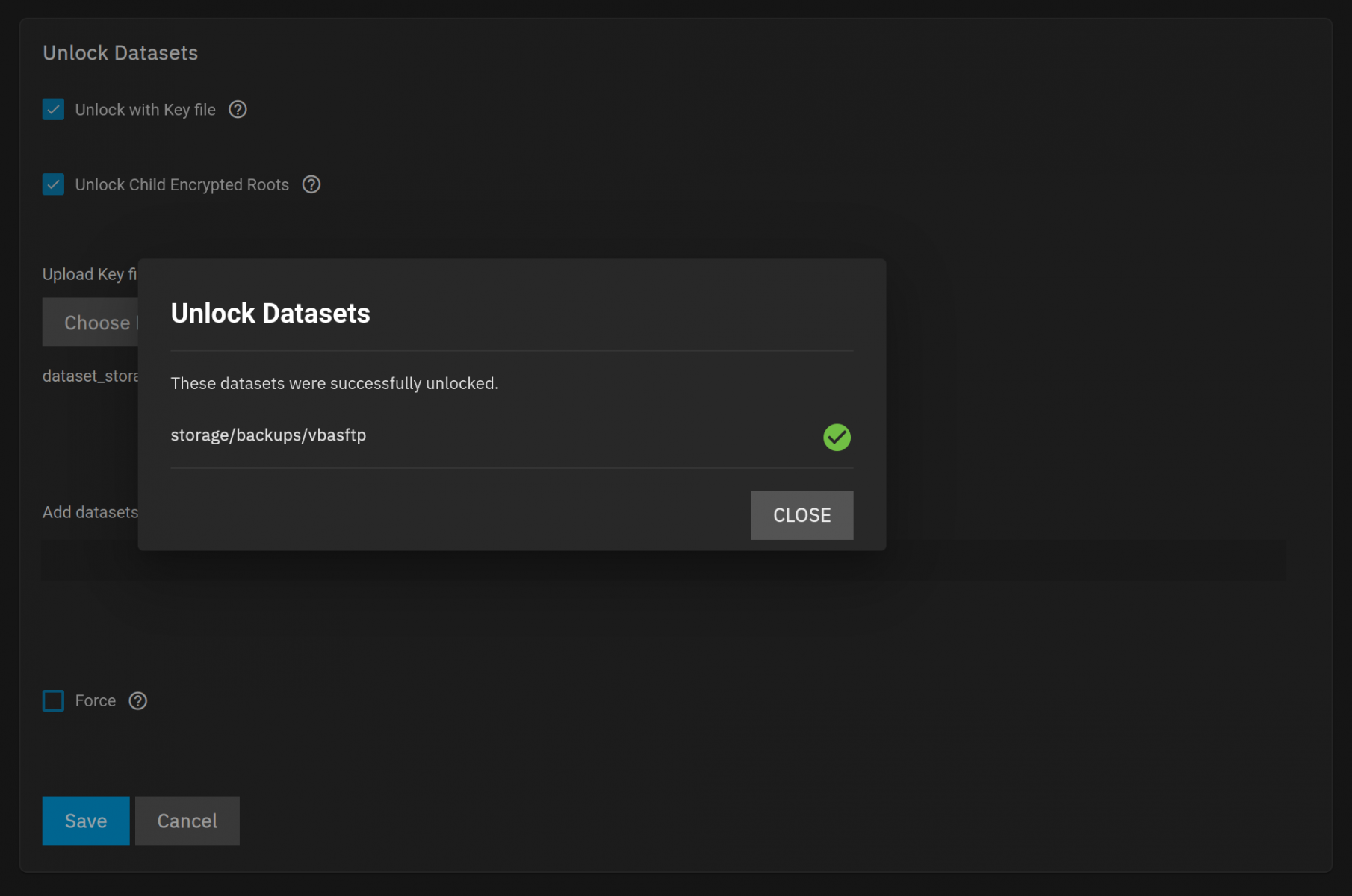

Finish the dialog and click save. Wait for the app to finish installing and become 'active', then click on the triple-dot context menu and click "Shell"

In the "Choose pod" dialog leave the default options and click the "Choose" button. From the shell, navigate to your compose.yaml file, and run 'docker compose -f /path/to/compose.yaml up -d'. It doesn't seem to work without the -f flag.

Now your compose service should be running, and will survive restarting the app!

Use external-service to access app

This next step requires that you've

set up ingress/traefik nonsense already.

I was not able to get the settings in docker-compose configured to let me access the app directly. What did work was setting up an external-service app and pointing that to the port we exposed above. Create an external-service, enable ingress, add a Host and set the HostName to your Cloudflare DNS subdomain for the app.

Under "Networking and Services" configure the service IP to your TN IP and set the port.

Save the dialog and the app should be accessible!