jobsoftinc

Cadet

- Joined

- Oct 27, 2015

- Messages

- 7

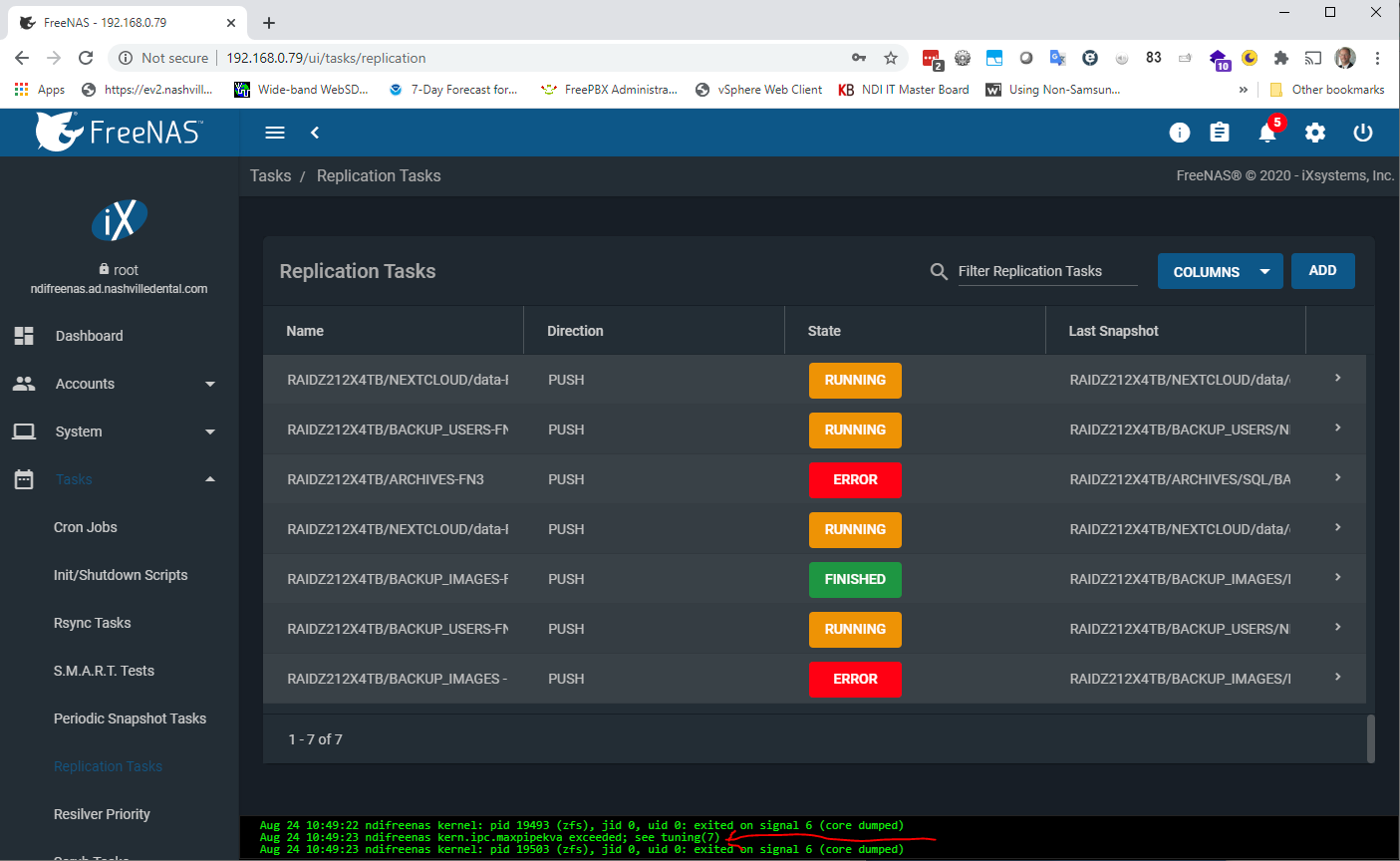

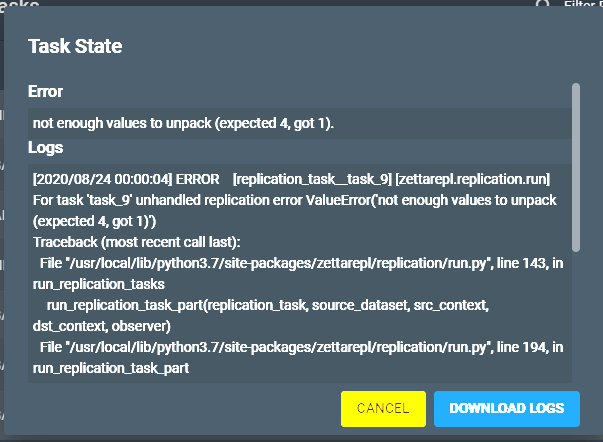

Not sure what additional info you guys would need, but I've got several periodic snapshots replicating to 2 different same rev level FreeNASes on the same gigabit LAN. This HAD been working very smoothly for several months too! But, I think after one of the updates back in July, suddenly I started getting the the error 'kern.ipc.maxpipekva exceeded followed by Signal 6 core dump of 'zfs'. If I reboot this main server, it goes back to replicating, for a while. I tried to increase this parameter within the FreeNAS GUI, but while it seems to take longer for this error to resurface, resurface it always seems to.

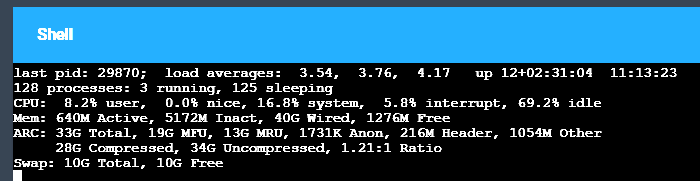

The server is a Dell C2100 with dual Xeon L5630s and 48GB ram.

The snapshots are every 15 mins keeping 2 weeks worth. Most incrementals are small to nothing in size. And again, this had been going fine with no issues for at least 2 months prior to this anomaly first appearing.

Any thoughts anyone?

Thanks!

Mark

The server is a Dell C2100 with dual Xeon L5630s and 48GB ram.

The snapshots are every 15 mins keeping 2 weeks worth. Most incrementals are small to nothing in size. And again, this had been going fine with no issues for at least 2 months prior to this anomaly first appearing.

Any thoughts anyone?

Thanks!

Mark