Hi,

I'm running a Freenas 9.2.18 based server (Supermicro 1027r-wrf, 16GB ECC ram, 4 128GB Samsung 850 pro SSD, 4 Gigabit lans) connected to an ESXI 5.5 host with a Raid10 full SSD storage.

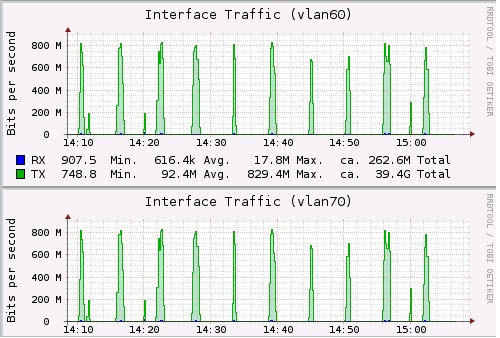

DD speed tests on the storage are pretty impressive, the disapponting part is when I have to benchmark the ZFS volume. I configured a MPIO iSCSI portal in Freenas using all the 4 lans (I segmented my Procurve swith with 4 different subtnets VLANs) and I managed to saturate all the paths (VMware round robin) only when I adjusted the iSCSI IOPS setting to 1 from the default 1000 IOPS limit.

Now speed is pretty decent but it seems like I can't max out the Gigabit lan speed, limited to 95,3 Mb/s for each path (I'd expect about 125 Mb/s)

Is a bottleneck on the Freenas config side? I tried all setting, experimental iSCSI included, but I cound't sort it out, any advice from the experts would be very appreciated!

I'm running a Freenas 9.2.18 based server (Supermicro 1027r-wrf, 16GB ECC ram, 4 128GB Samsung 850 pro SSD, 4 Gigabit lans) connected to an ESXI 5.5 host with a Raid10 full SSD storage.

DD speed tests on the storage are pretty impressive, the disapponting part is when I have to benchmark the ZFS volume. I configured a MPIO iSCSI portal in Freenas using all the 4 lans (I segmented my Procurve swith with 4 different subtnets VLANs) and I managed to saturate all the paths (VMware round robin) only when I adjusted the iSCSI IOPS setting to 1 from the default 1000 IOPS limit.

Now speed is pretty decent but it seems like I can't max out the Gigabit lan speed, limited to 95,3 Mb/s for each path (I'd expect about 125 Mb/s)

Is a bottleneck on the Freenas config side? I tried all setting, experimental iSCSI included, but I cound't sort it out, any advice from the experts would be very appreciated!