rmccullough

Patron

- Joined

- May 17, 2018

- Messages

- 269

Will this patch be ported to 11.2?

iXsystems has back ported the bhyve patch to FreeNAS 11.3.

I have checked with

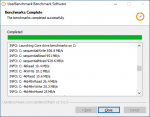

It works very well, and it is faster than before.

- FreeNAS-11.3-RC2

- virtio-win-0.1.164.iso

- Windows Server 2019 Evaluation

- virtio disk and virtio network

Therefore I think that Windows on FreeNAS bhyve is now a viable solution.