AFAIK ZFS allows creation of user meta-data on datasets, so I would assume that the devs have created a retention paramenter. Storing a delete date would certainly simplify the purge process as the deletion time can be calculated easily at the time the snapshot is created - This would only need be done once - and then repeatedly checked at intervals.The creation time already matches the snapshot name. Is there a different property that lists the expiration date or retention time?

-

Important Announcement for The TrueNAS Community.

The TrueNAS Community has now been moved. This forum will now become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

- Forums

- Archives

- FreeNAS (Legacy Software Releases)

- FreeNAS Help & support

- General Questions and Help

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

11.3: Periodic snapshots without lifetime in name!

- Thread starter vicmarto

- Start date

fracai

Guru

- Joined

- Aug 22, 2012

- Messages

- 1,212

I do know if you create a shapshot with a year retention every hour then delete that snapshot task and make a new one with an hour retention it will delete old snapshots older then an hours. It behaves as if the snapshot finds anything that matches its name and deletes it if it is older then its retention period.

AFAIK ZFS allows creation of user meta-data on datasets, so I would assume that the devs have created a retention paramenter. Storing a delete date would certainly simplify the purge process as the deletion time can be calculated easily at the time the snapshot is created - This would only need be done once - and then repeatedly checked at intervals.

It sounds like the snapshot script is not using any additional properties and just looking at the creation date and the current retention time. This might be the right move for the current implementation. Otherwise you run the risk of creating snapshots set to expire in 10 years, realizing that is too long, changing the retention, and forgetting that you have several really old snapshots hanging around. Currently it's possible you'd expect those old snapshots to stick around as that's what you originally asked for, but FreeNAS is trying to make a smart decision here and clean up the old stuff. It's also entirely possible that you accidentally change the retention time and lose all your snapshots. Given that everything should be listed in the interface I may be coming around to the idea that there really should be a "delete date property" as it'd be better to save extra snapshots than it would to delete them early.

I wonder though, does this mean you can only have a single retention period?

I'll take the opportunity to plug my own ZFS Rollup scripts that can avoid this situation. Set up a single, frequent, recursive snapshot task with a long retention time for the root of your pool and then use the scripts to prune excessive snapshots as they age. Read about them on the forum here or the source at GitHub.

It sounds like the snapshot script is not using any additional properties and just looking at the creation date and the current retention time. This might be the right move for the current implementation. Otherwise you run the risk of creating snapshots set to expire in 10 years, realizing that is too long, changing the retention, and forgetting that you have several really old snapshots hanging around. Currently it's possible you'd expect those old snapshots to stick around as that's what you originally asked for, but FreeNAS is trying to make a smart decision here and clean up the old stuff. It's also entirely possible that you accidentally change the retention time and lose all your snapshots. Given that everything should be listed in the interface I may be coming around to the idea that there really should be a "delete date property" as it'd be better to save extra snapshots than it would to delete them early.

I wonder though, does this mean you can only have a single retention period?

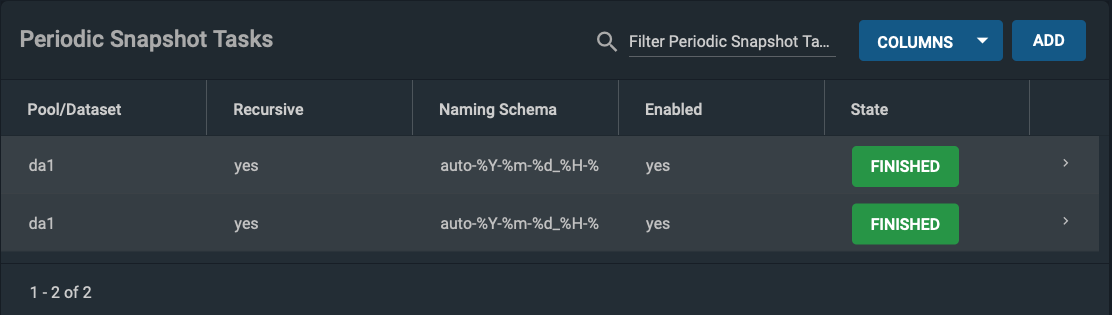

Those are valid points. I do know when I was testing 11.3 on a vm that if I created two snapshot tasks for the same dataset with the same snapshot naming format only one would run. But if I named the shapshots differently by adding the missing retention period back then they would both work and the short snapshot retention would be cleaned up while the longer one would stick around. Basically it worked just like prior to 11.3.

Somewhat related but unfortunately today marks the 2nd time I've upgraded to 11.3 and lost snapshots. Some how I keep loosing my old snapshots that are supposed to be retained for a year. I didn't see this in my testing inside the vm so it seems to be related to the upgrade process and the normal processing after that. Hopefully its just something happens once and functions correctly after that.

The problem with the current Naming Schema is that tasks fails when snapshots names overlap. For example:

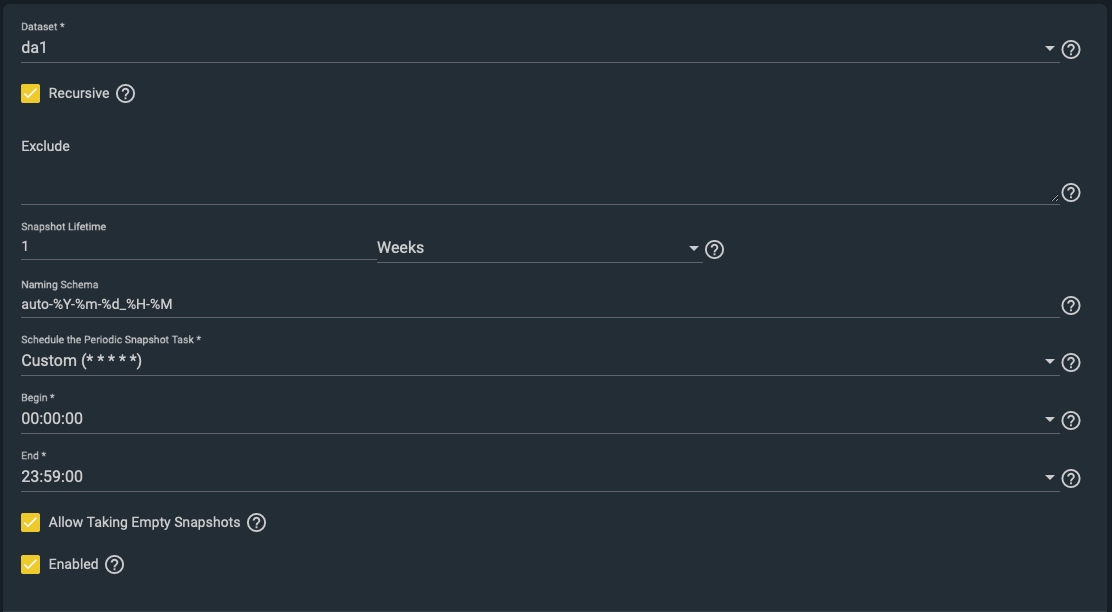

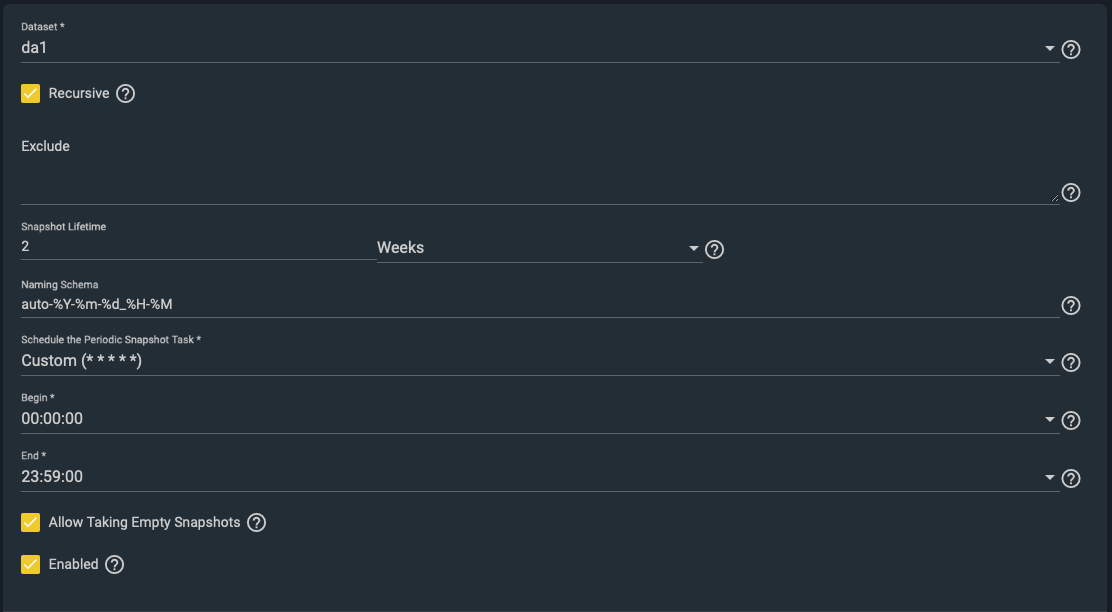

These two Periodic Snapshot Tasks:

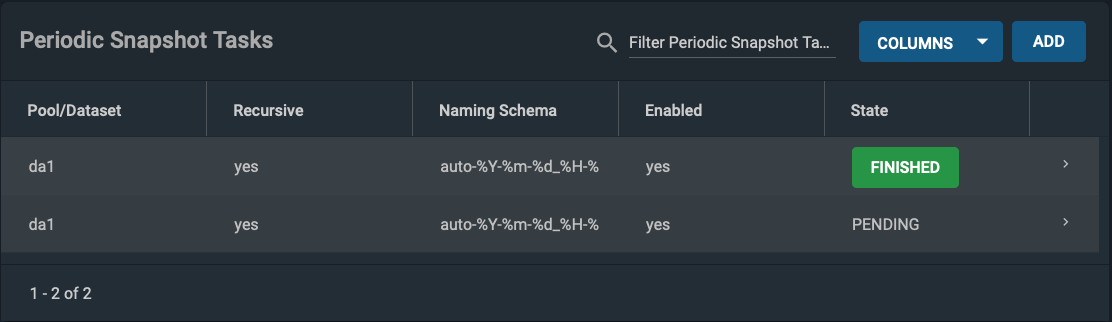

First executes, second never executes. Because it's name would be the same, I presume:

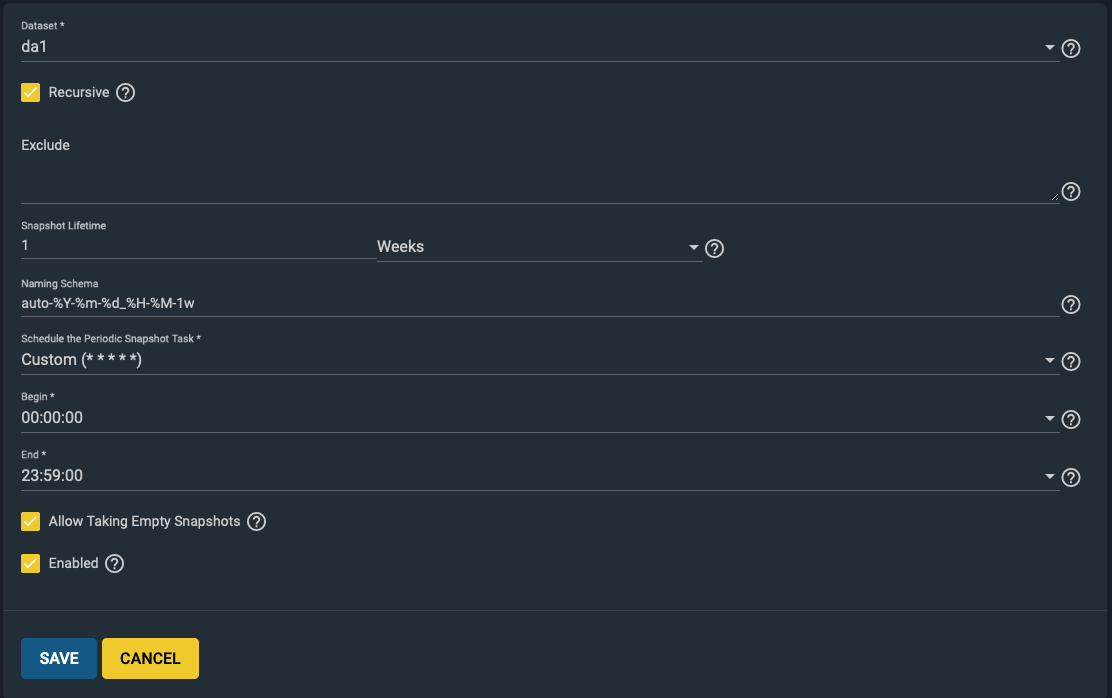

Lets's now change the Naming Schema to force use also the lifetime, and see what happens:

Result: both tasks executes!

These two Periodic Snapshot Tasks:

First executes, second never executes. Because it's name would be the same, I presume:

Lets's now change the Naming Schema to force use also the lifetime, and see what happens:

Result: both tasks executes!

Last edited:

- Joined

- Dec 8, 2017

- Messages

- 442

I ran into the same issue where overlapping tasks now fail. Has there been any ticket submitted for this? Even if the naming change is on purpose (which seems let than ideal) we should be able to created multiple snapshot tasks for the same dataset with different retention periods.

The ticket was created 5 days ago, but has not yet received any attention: https://jira.ixsystems.com/browse/NAS-105099

Vladimir Vinogradenko

Dabbler

- Joined

- Mar 3, 2019

- Messages

- 17

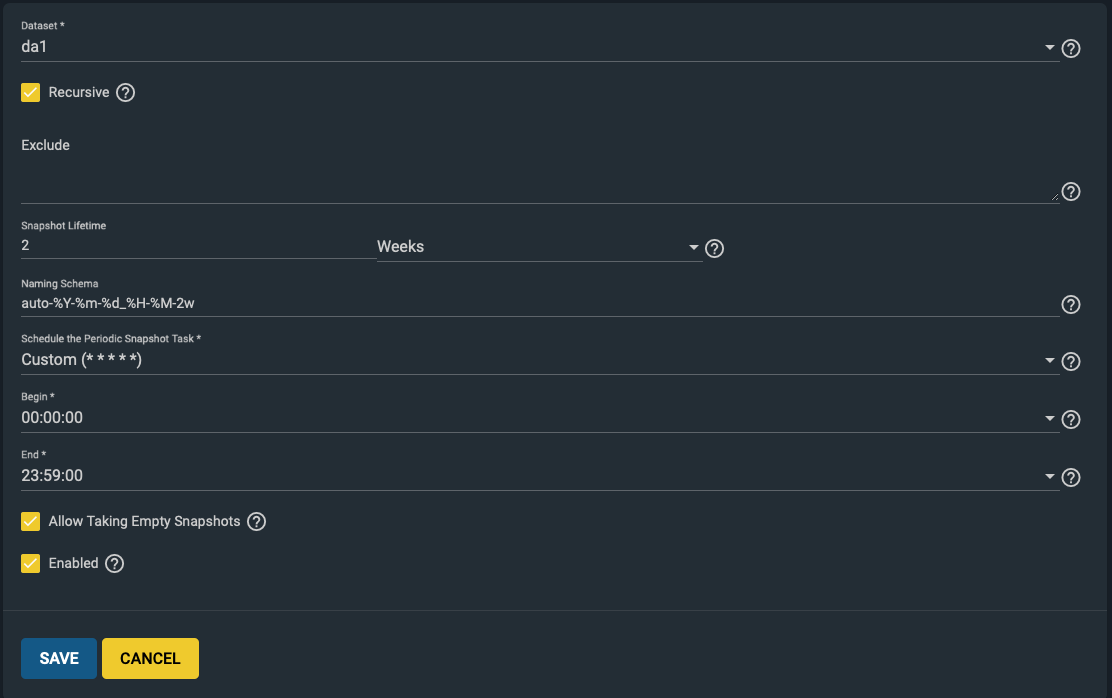

In 11.3 we have decided to use a different retention mechanism so suffixes like -2w or -1y are no longer necessary in snapshot names. You can put them back using Naming Schema option but we don't to this by default as it's not obligatory anymore.

Retention does not use ZFS metadata (it's slow to access with a high number of snapshots); instead, snapshots that are subject to deletion are calculated from existing periodic snapshot tasks and replication tasks. So now snapshot lifetime is no more decided at it's creation.

The bug when two periodic snapshot tasks create same snapshot bot only one is displayed as "FINISHED" (in reality, they both succeed, and both will initiate replication if it is set up so) will be fixed in 11.3-U2.

Retention does not use ZFS metadata (it's slow to access with a high number of snapshots); instead, snapshots that are subject to deletion are calculated from existing periodic snapshot tasks and replication tasks. So now snapshot lifetime is no more decided at it's creation.

The bug when two periodic snapshot tasks create same snapshot bot only one is displayed as "FINISHED" (in reality, they both succeed, and both will initiate replication if it is set up so) will be fixed in 11.3-U2.

- Joined

- Dec 8, 2017

- Messages

- 442

@Vladimir Vinogradenko does that mean that deleting a snapshot task and recreating it (or just deleting it) stops any snapshots from being automatically deleted at their expiration period? Did snapshots used to get deleted automatically regardless of a snapshot task to delete them?

Vladimir Vinogradenko

Dabbler

- Joined

- Mar 3, 2019

- Messages

- 17

Yes, deleting all periodic snapshot tasks for specific snapshot naming schema will stop snapshots with this naming schema from being deleted. But you can recreate periodic snapshot tasks at any moment, and retention will be resumed — they don't have any hidden "list of snapshots to be deleted".@Vladimir Vinogradenko does that mean that deleting a snapshot task and recreating it (or just deleting it) stops any snapshots from being automatically deleted at their expiration period?

No, it worked pretty much the same way in 11.2Did snapshots used to get deleted automatically regardless of a snapshot task to delete them?

nirvdrum

Cadet

- Joined

- Mar 1, 2016

- Messages

- 2

@Vladimir Vinogradenko Can you please clarify how different snapshot schedules for the dataset will work? I'm struggling to see how different retention schedules can be employed if each task ends up using the same snapshot name pattern. How do you map a snapshot back to a particular schedule in that case?

Vladimir Vinogradenko

Dabbler

- Joined

- Mar 3, 2019

- Messages

- 17

In new replication engine snapshot names and creation times are aligned with schedule, so we can do this using that periodic snapshot task schedule. E.g., periodic snapshot task that is scheduled to run every day at 20:00, snapshot auto-2020-03-28-20-00 will be mapped to this task, but auto-2020-03-28-21-00 will not.How do you map a snapshot back to a particular schedule in that case?

If snapshot is mapped to multiple periodic snapshot tasks, the biggest retention time is used (e.g. if one task keeps snapshots for month, but there is another one that keeps them for year, it will only be deleted in a year).

JayG30

Contributor

- Joined

- Jun 26, 2013

- Messages

- 158

In new replication engine snapshot names and creation times are aligned with schedule, so we can do this using that periodic snapshot task schedule. E.g., periodic snapshot task that is scheduled to run every day at 20:00, snapshot auto-2020-03-28-20-00 will be mapped to this task, but auto-2020-03-28-21-00 will not.

If snapshot is mapped to multiple periodic snapshot tasks, the biggest retention time is used (e.g. if one task keeps snapshots for month, but there is another one that keeps them for year, it will only be deleted in a year).

Is there any side effect of adding the snapshot lifetime to the end of the name scheme manually? Will it cause problems with retention, particularly in a system where the user is setting multiple snapshot tasks of varying retention on the same datasets? It seems like if you want that setup (hourly for a day, daily for a week, weekly for a month, monthly for a year....of similar) you still do need multiple snapshot tasks. The appended retention helps the end user more easily verify proper behavior and demonstrate proof in the case of a work environment (screenshots as part of a test plan document).

Also, at the very least the tooltip verbage should be changed. As of now it makes it sound like the retention WILL be appended with no extra work of the end user. Which is now inaccurate.

Similar threads

- Replies

- 4

- Views

- 3K

- Replies

- 1

- Views

- 1K