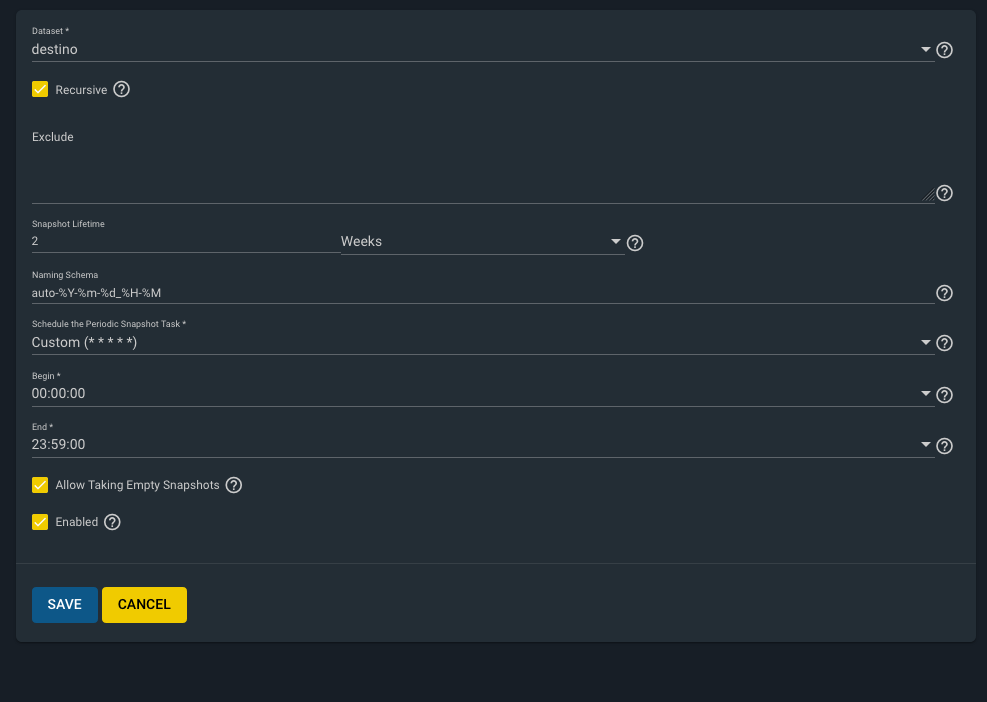

With a periodic snapshot configured as this:

I'm getting snapshot names without the -2w lifetime!!:

The expected behavior is something like: destino@auto-2020-02-16_15-44-2w.

Why? Can you replicate this behavior?

I'm getting snapshot names without the -2w lifetime!!:

# zfs list -r -H -o name -t snapshot destino

destino@auto-2020-02-16_15-28

destino@auto-2020-02-16_15-29

destino@auto-2020-02-16_15-30

destino@auto-2020-02-16_15-31

destino@auto-2020-02-16_15-32

destino@auto-2020-02-16_15-33

destino@auto-2020-02-16_15-34

destino@auto-2020-02-16_15-35

destino@auto-2020-02-16_15-36

destino@auto-2020-02-16_15-37

destino@auto-2020-02-16_15-38

destino@auto-2020-02-16_15-39

destino@auto-2020-02-16_15-40

destino@auto-2020-02-16_15-41

destino@auto-2020-02-16_15-42

destino@auto-2020-02-16_15-43

destino@auto-2020-02-16_15-44The expected behavior is something like: destino@auto-2020-02-16_15-44-2w.

Why? Can you replicate this behavior?